Google Search Console:The Definitive Guide

This guide has everything you need to know about the Google Search Console (GSC).

If you’re new to SEO, I’ll show you how to get started with the GSC.

And if you’re an SEO pro? I’ll reveal advanced tips, tactics and strategies that you can use to get higher rankings.

Bottom line:

If you want to get the most out of the Search Console, you’ll love this guide.

Chapter 1:Getting Started With the

Google Search Console

In this chapter I’ll show you how to use the Search Console. First, you’ll learn how to add your site to the GSC. Then, I’ll help you make sure your site settings are good to go.

Step #1: How to Add Your Site to the GSC

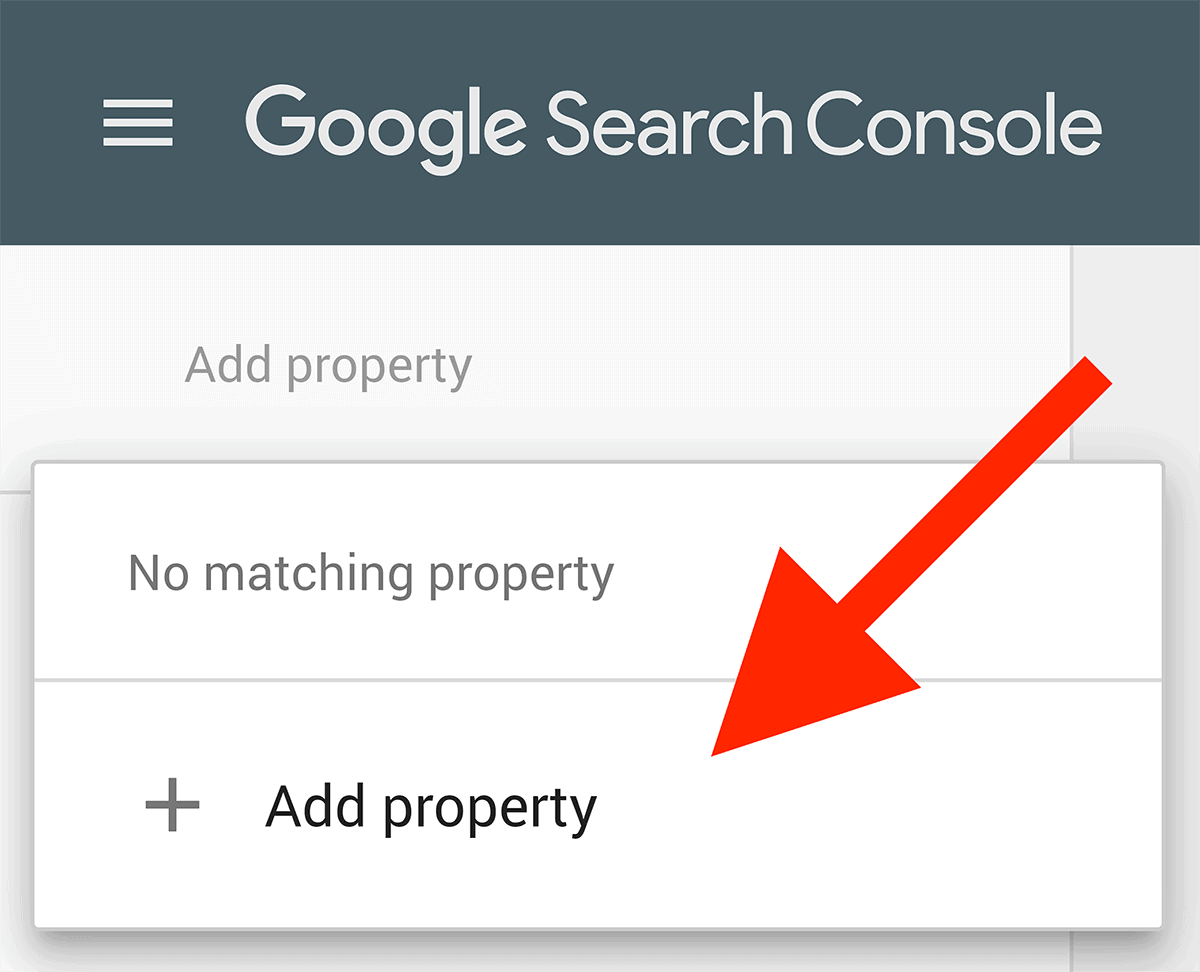

First, log in to the Google Search Console and click on “Add Property”.

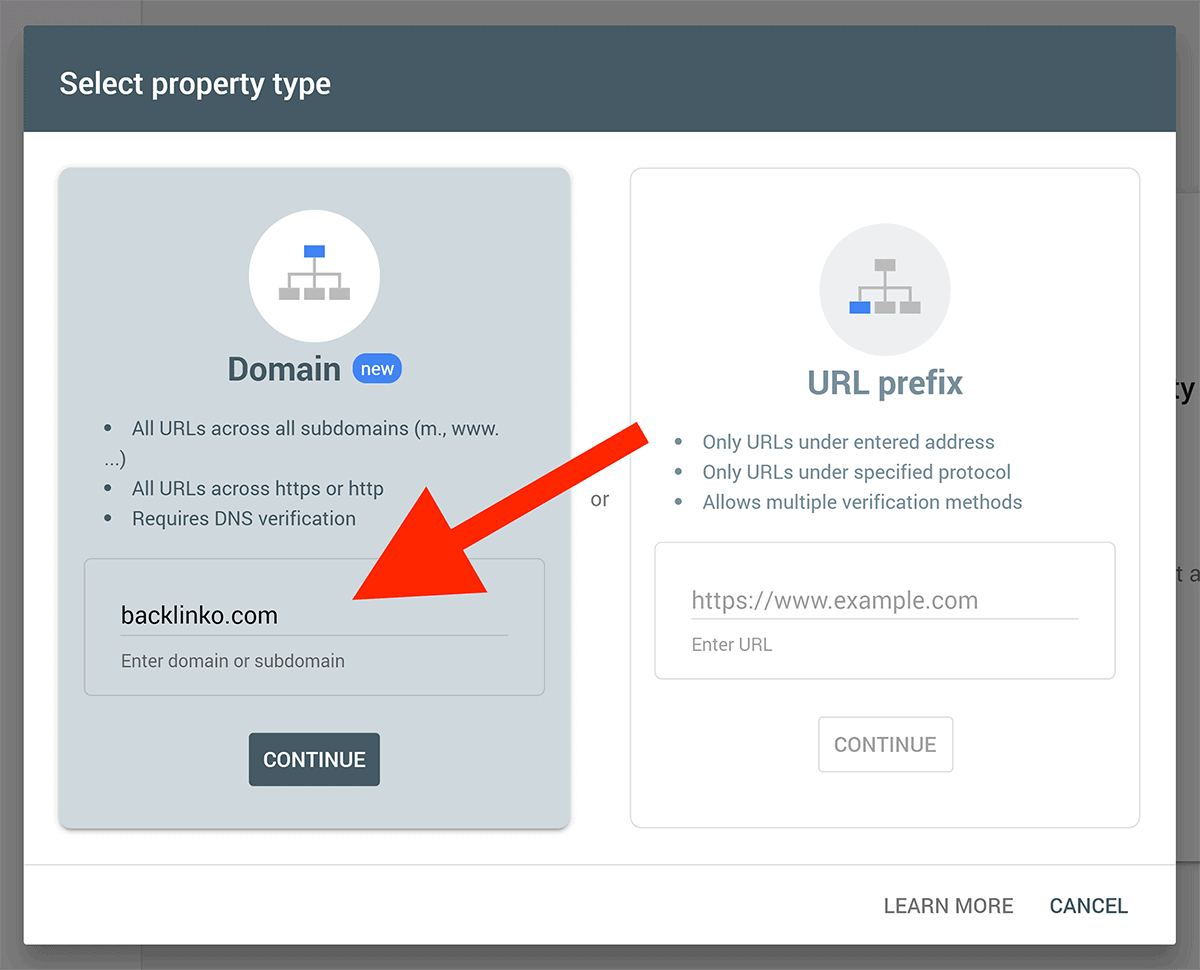

Then, copy and paste your homepage URL into the “Domain” field.

Next, it’s time to verify your site.

There are 7 ways to verify your site. Here are the 3 easiest ways to get your site verified:

- HTML File: Upload a unique HTML file to your site.

- CNAME or TXT Record: Here’s where you add a special CNAME or TXT record to your domain settings.

- HTML Code Snippet (my personal recommendation): Simply upload a small snippet of code (an HTML tag) to the <head> section of your homepage’s code.

Once you’ve done that, you can proceed to step 2.

Step #2: Set Your Target Country

Google does a pretty good job figuring out which country your site is targeting. To do that, they look at data like:

- Your ccTLD (for example: co.uk for UK sites)

- The address listed on your website

- Your server location

- The country you get most backlinks from

- The language your content is written in (English, French etc.)

That said, the more information you can give Google, the better.

So the next step is to set your target country inside the (old) GSC.

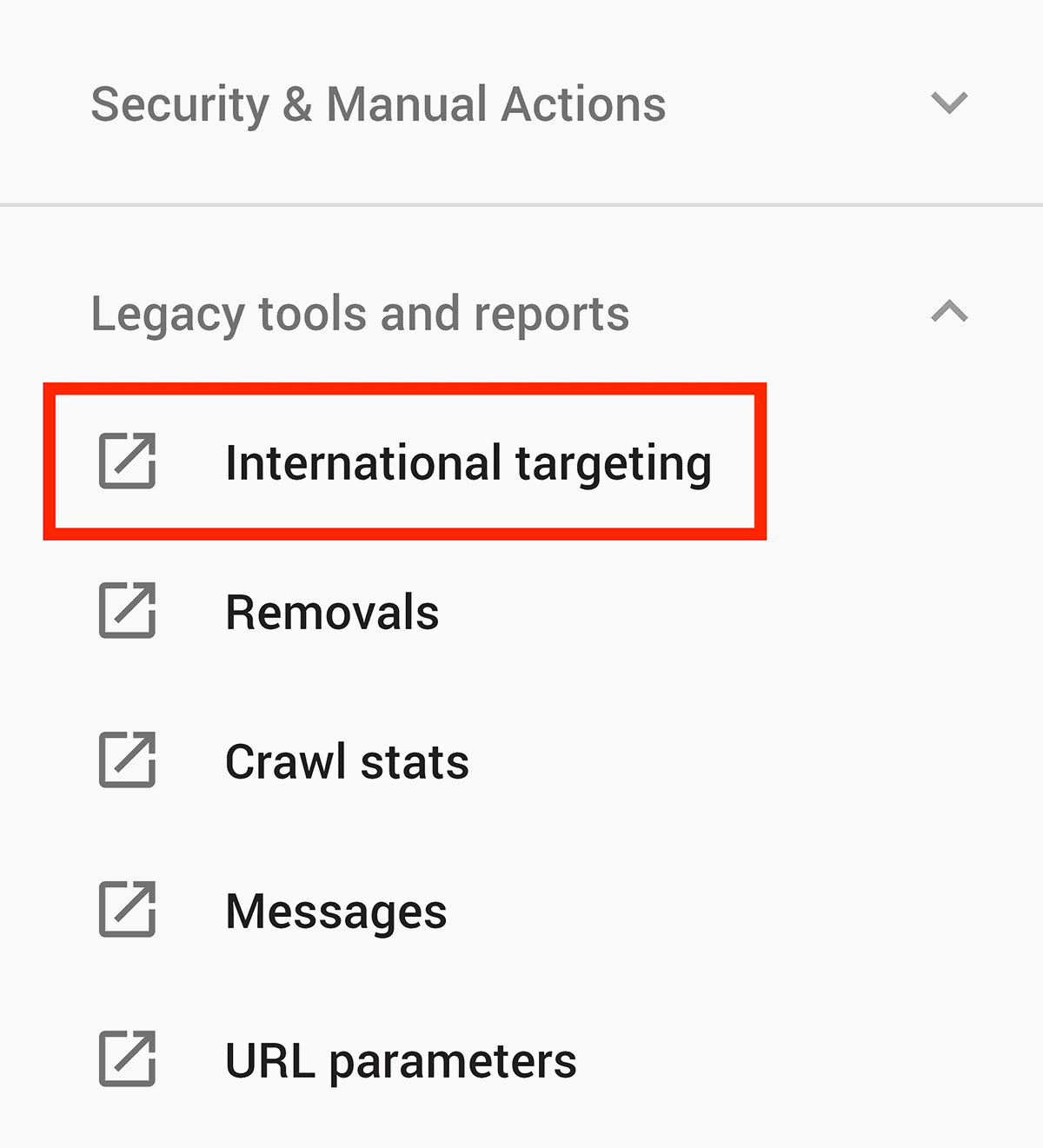

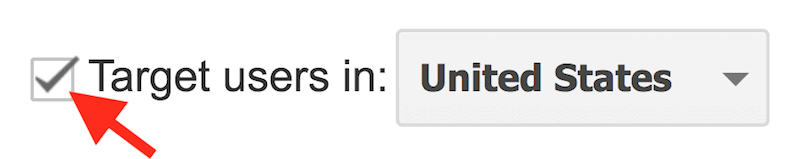

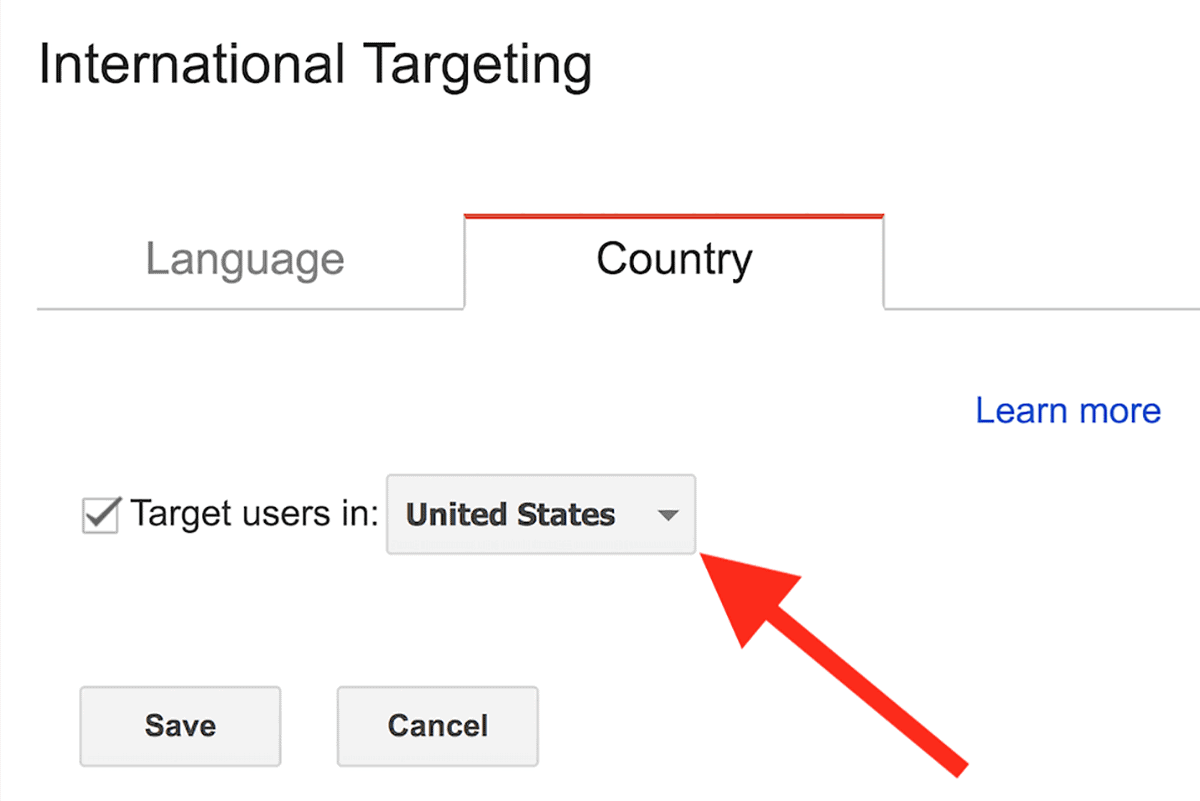

Click the “International Targeting” link (under “Search Traffic”)

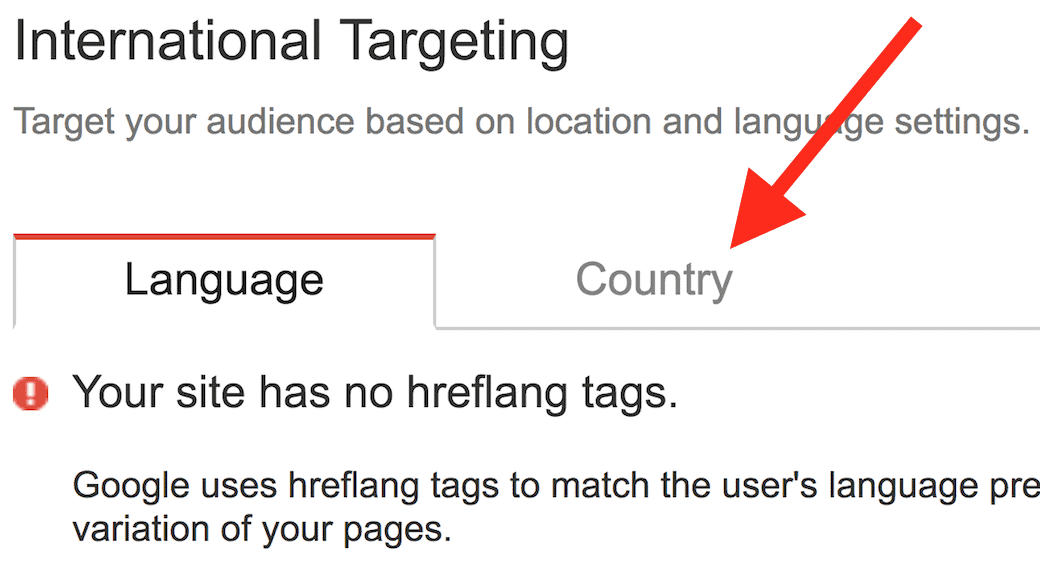

Click the “Country” tab

Check the “Target users in” box

Select your target country from the drop-down box

And you’re all set.

Step #3: Link Google Analytics With Search Console

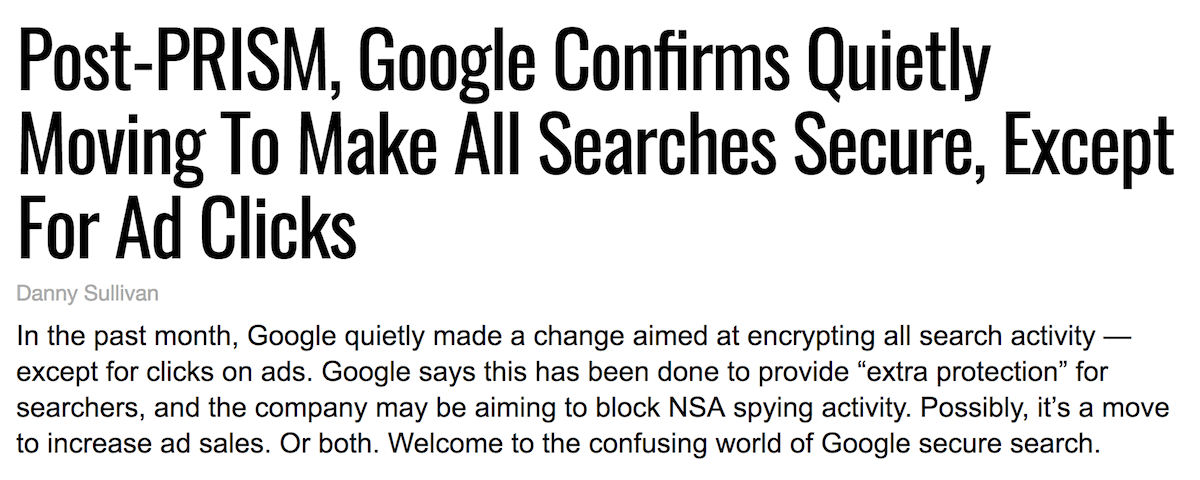

Back in 2013, Google switched all searches over to HTTPS.

This was great for security. But it was a bummer for website owners.

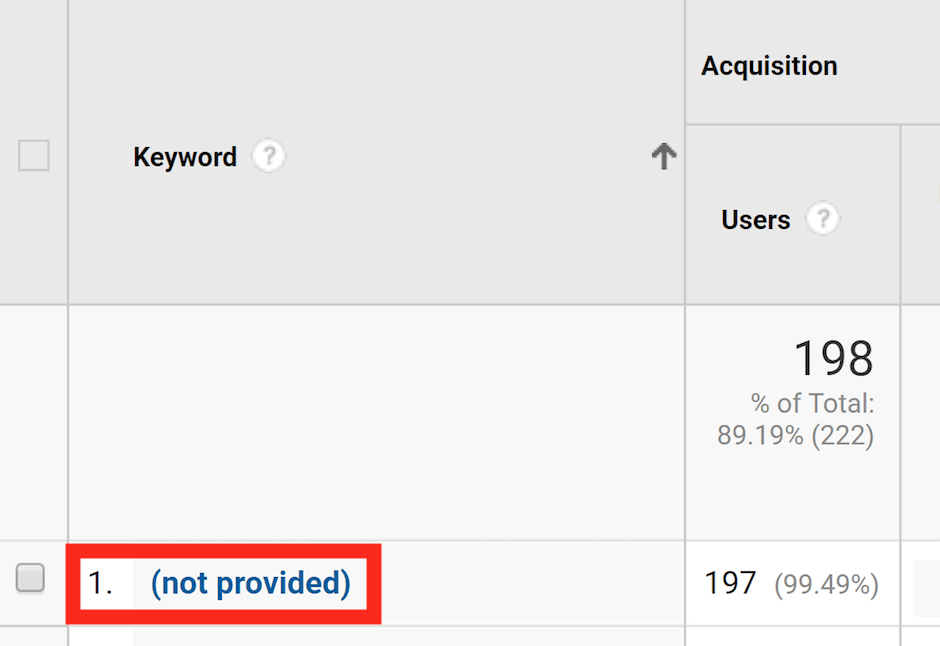

Suddenly, priceless keyword data vanished from Google Analytics.

Instead, all we got was this:

The good news? There’s a simple way to get some of that keyword data back:

Link Google Analytics with your Google Search Console account.

Here’s how:

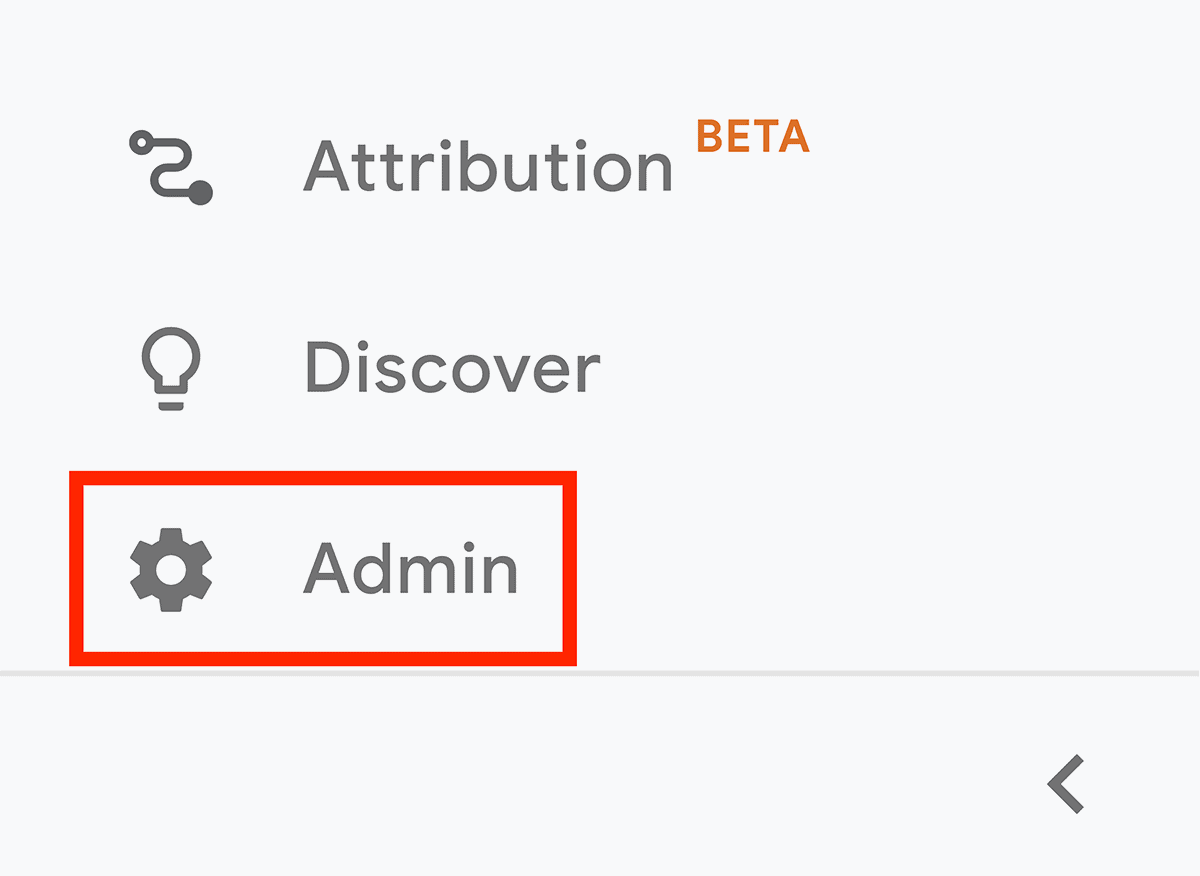

Open up your Google Analytics. Then, click the “Admin” button at the bottom of the left menu.

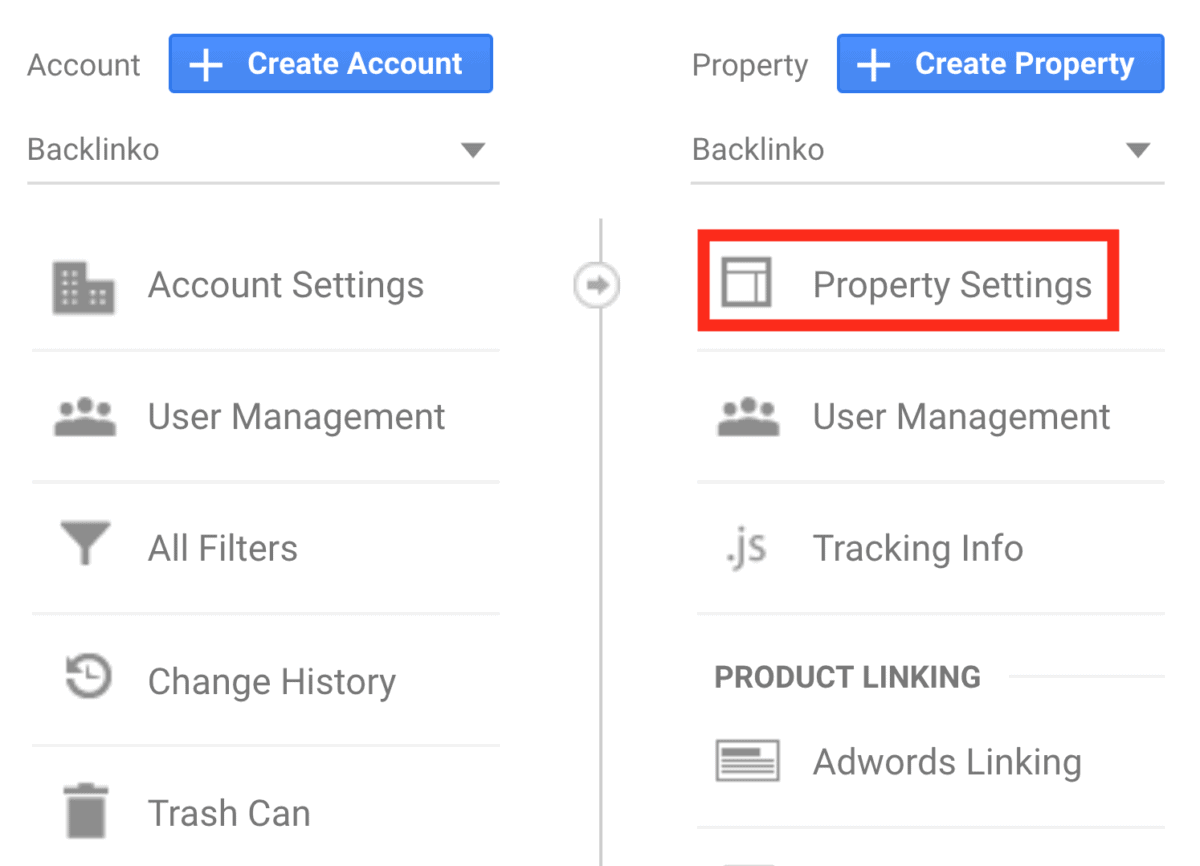

Click on the “Property Settings” link.

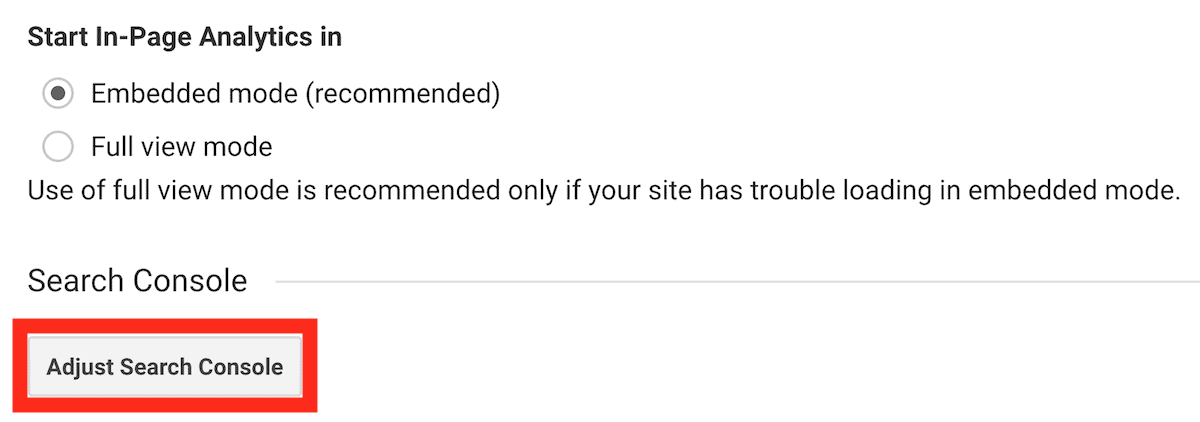

Scroll down until you see the “Adjust Search Console” button. Click it!

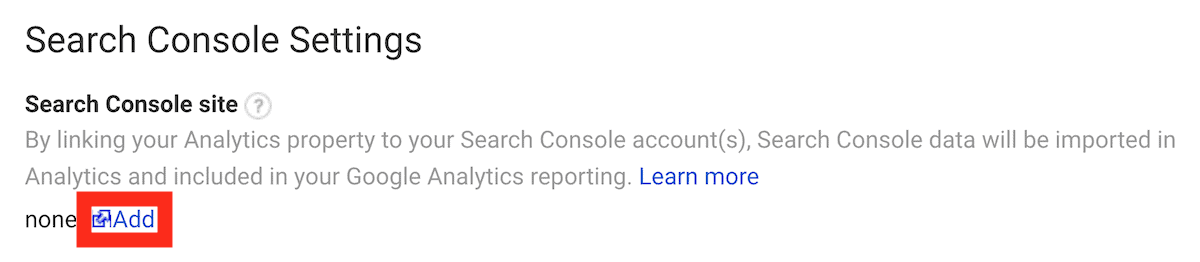

Click on “Add”.

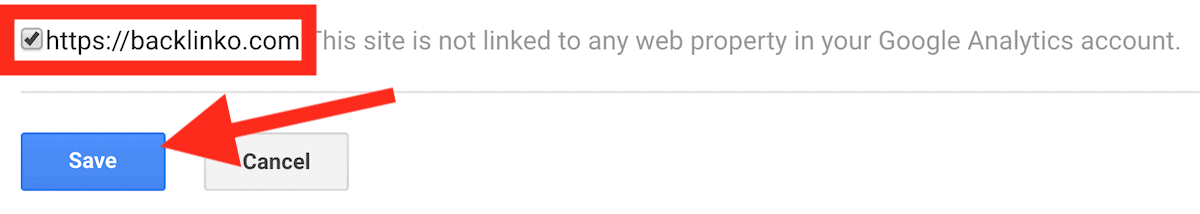

Scroll down until you find your website, check the box, and hit “Save”.

You’re done! Analytics and Search Console are now linked.

Let’s see what you get…

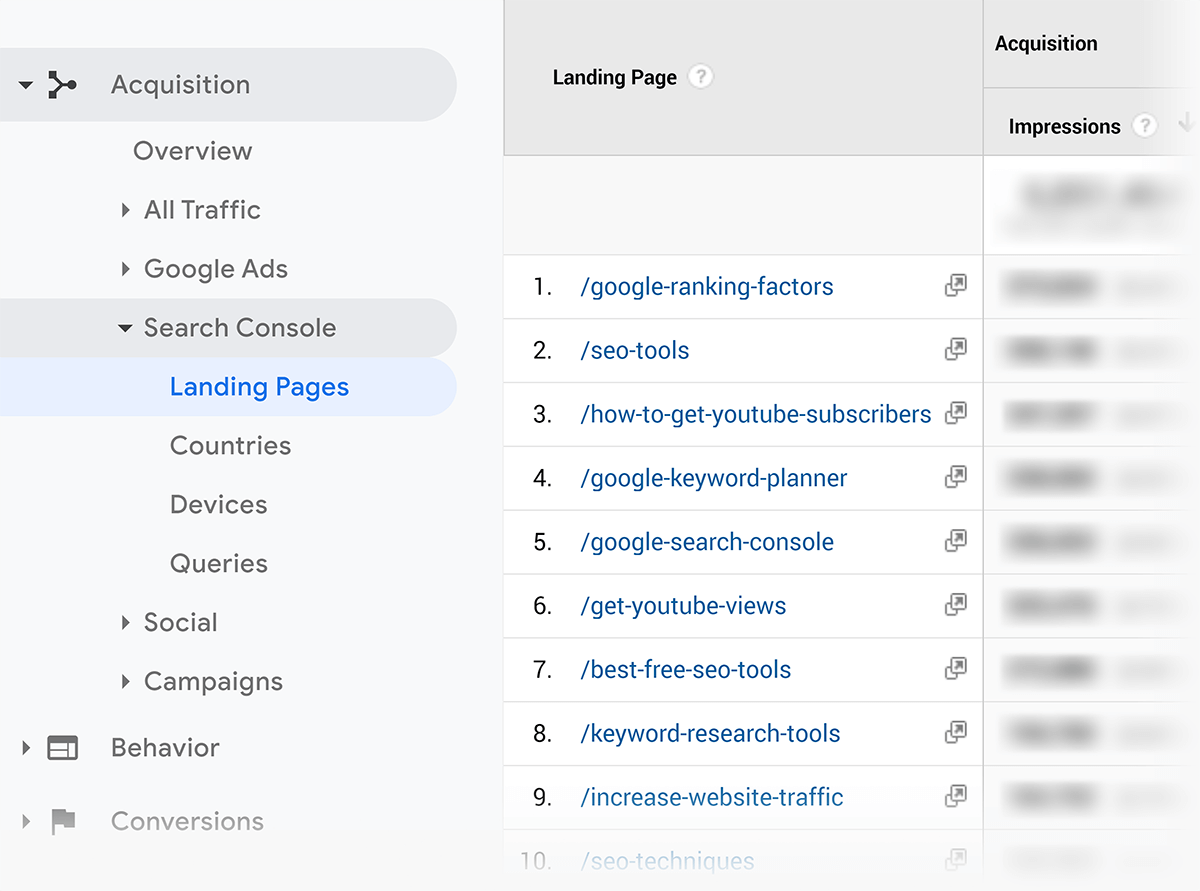

Landing pages with impression and click data:

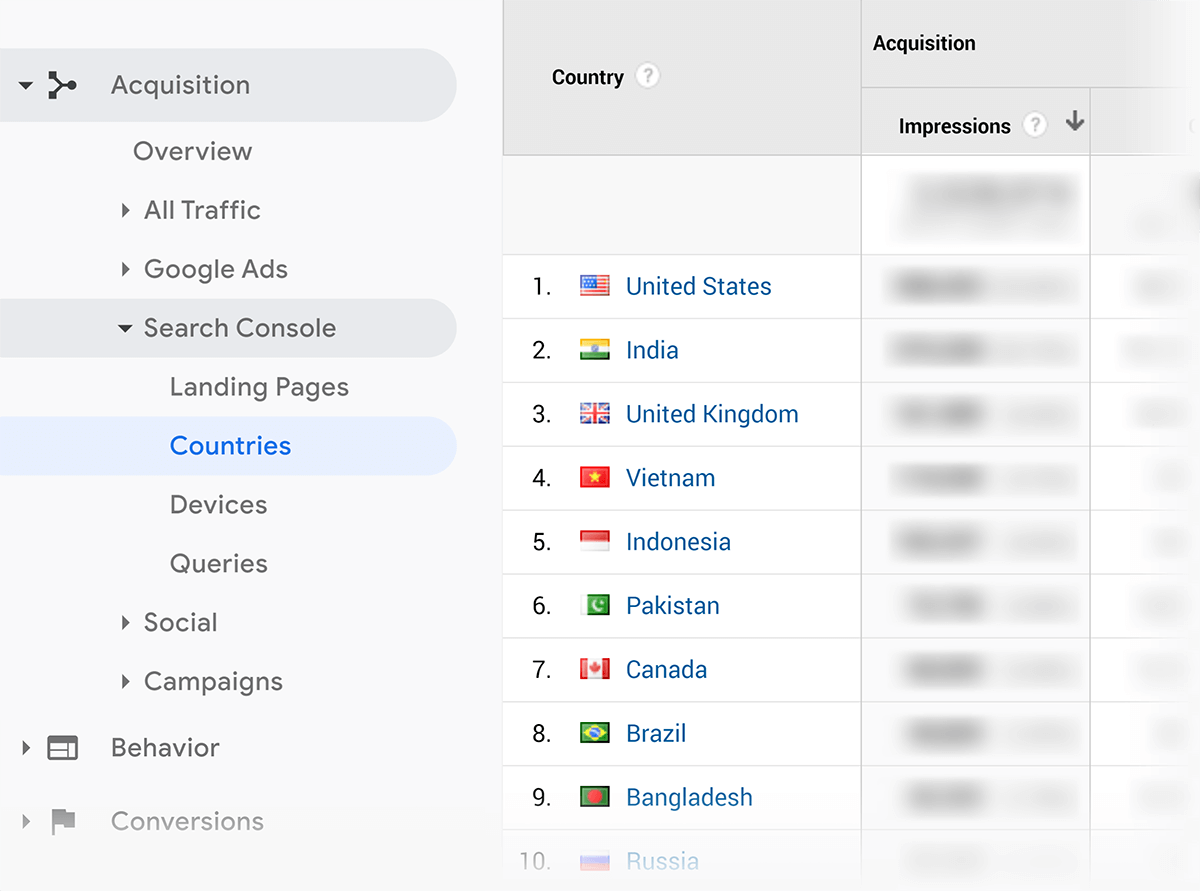

Impression, click, CTR, and position data by country:

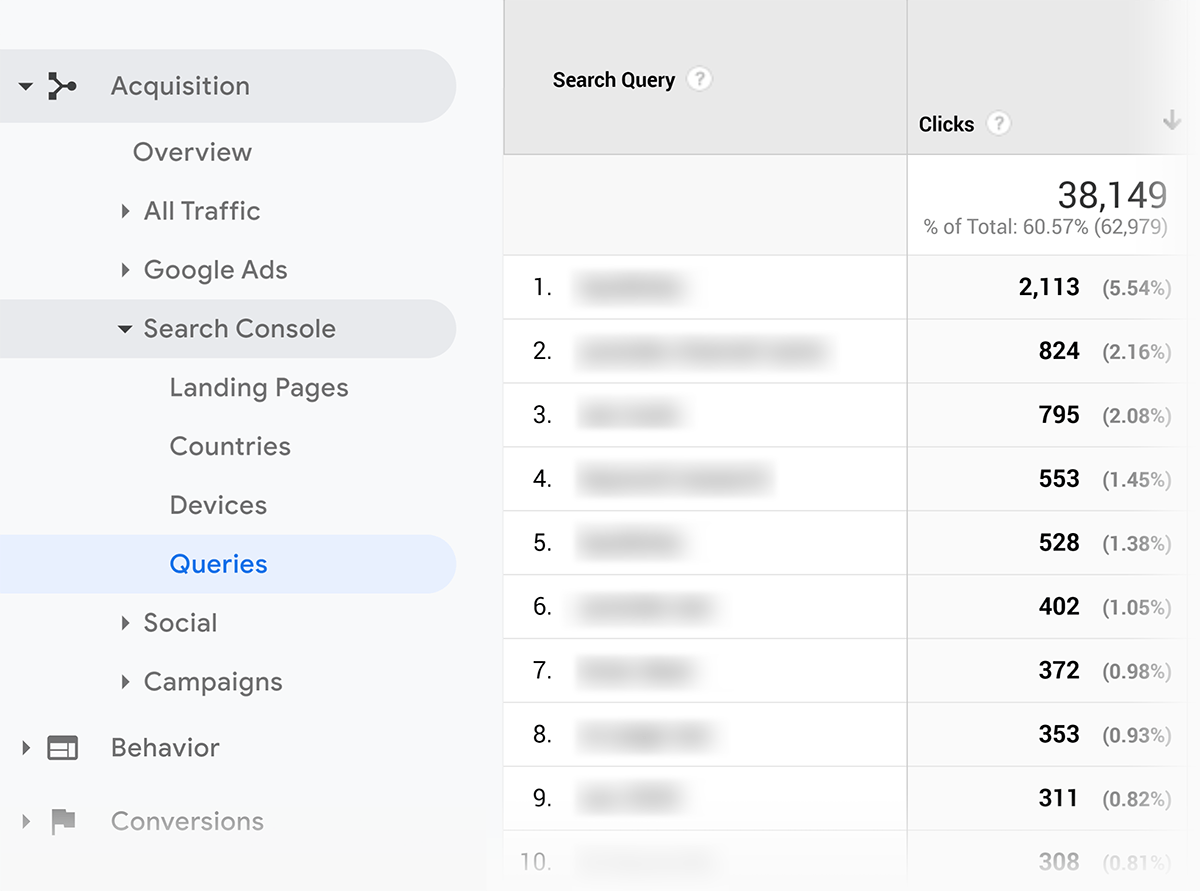

But most importantly… keyword data:

Boom!

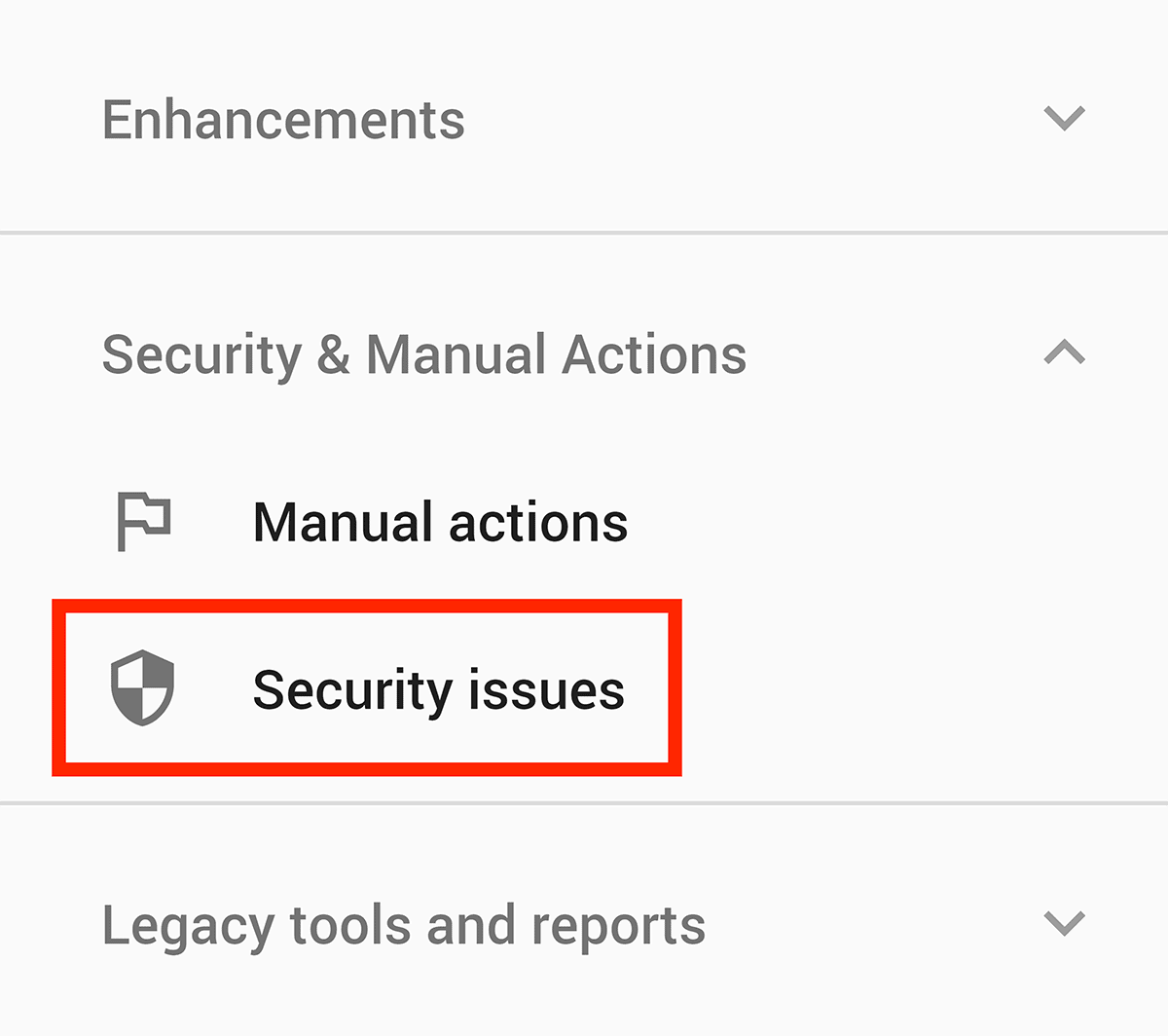

Step #4: Check For Security Issues

Finally, check to see if you have any security issues that might be hurting your site’s SEO.

To do that, click “Security Issues”.

And see what Google says:

In most cases, as you see here, there aren’t any security problems with my site. But it’s worth checking.

Step #5: Add a Sitemap

I’ll be honest:

If you have a small site, you probably don’t NEED to submit a sitemap to Google.

But for bigger sites (like ecommerce sites with thousands of pages) a sitemap is KEY.

That said: I recommend that you go ahead and submit a sitemap either way.

Here’s how to do it:

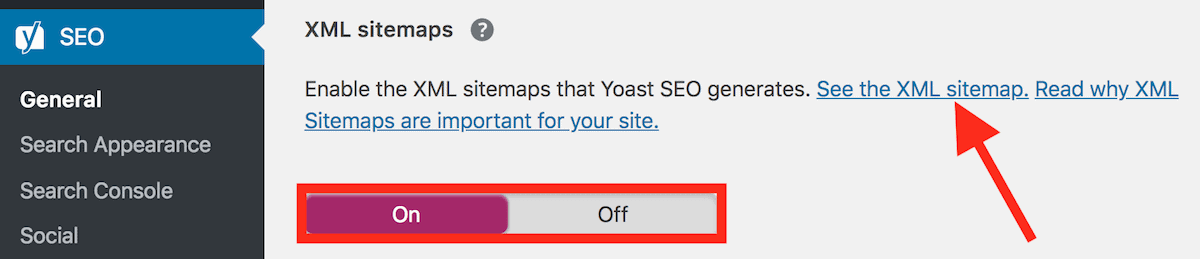

First up, you need to create a sitemap. If you’re running WordPress with the Yoast plugin, you should already have one.

If you don’t have a sitemap yet, head over to Yoast. Then, set the XML sitemaps setting to “On” (under “General/Features”):

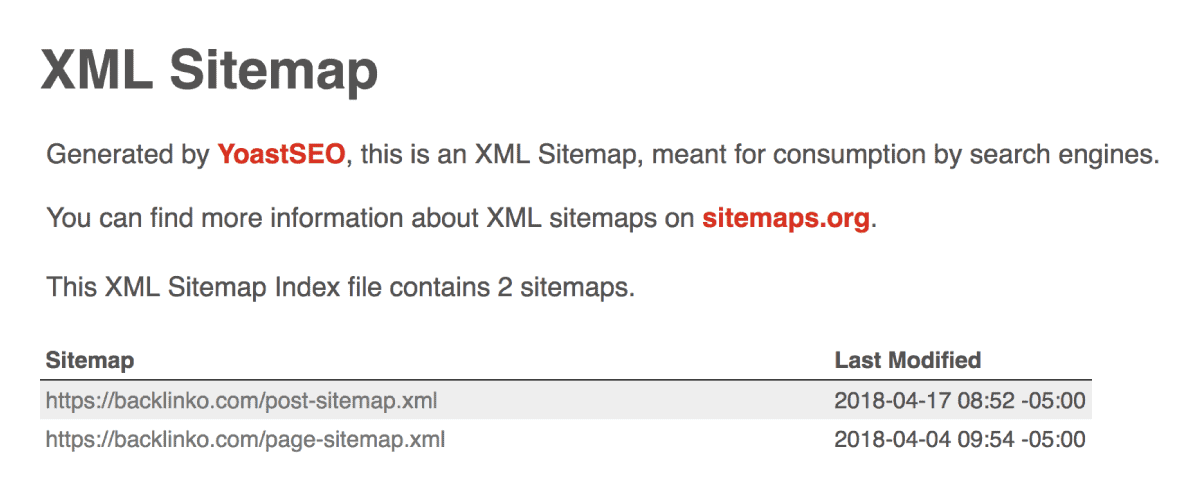

Click the “See the XML Sitemap” link, which will take you to your sitemap:

Don’t use Yoast? Go to yoursite.com/sitemap.xml. If you have a sitemap, it’s usually here. If not, you want to create one.

So let’s submit a sitemap to Google.

It’s SUPER easy to do in the new GSC.

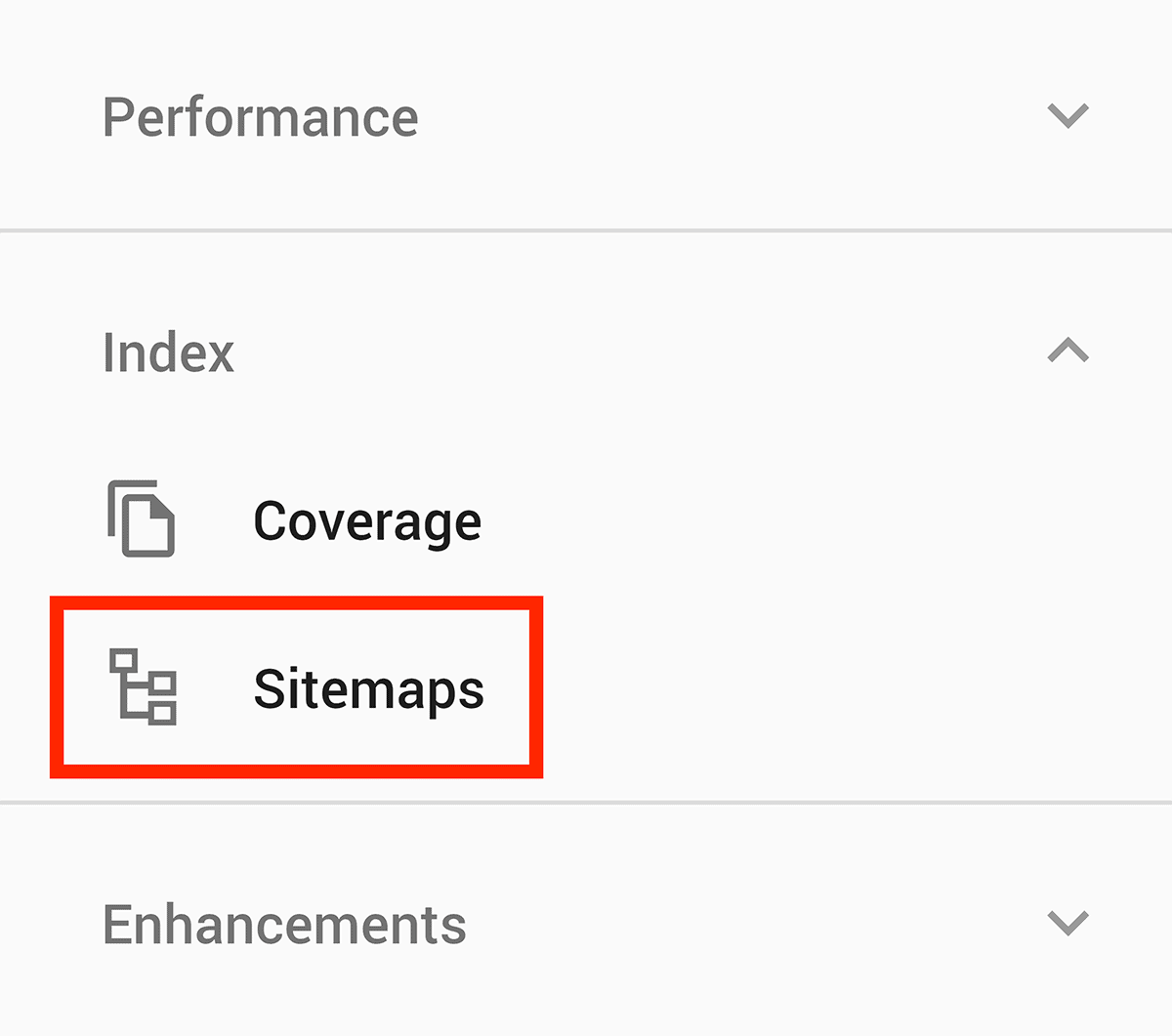

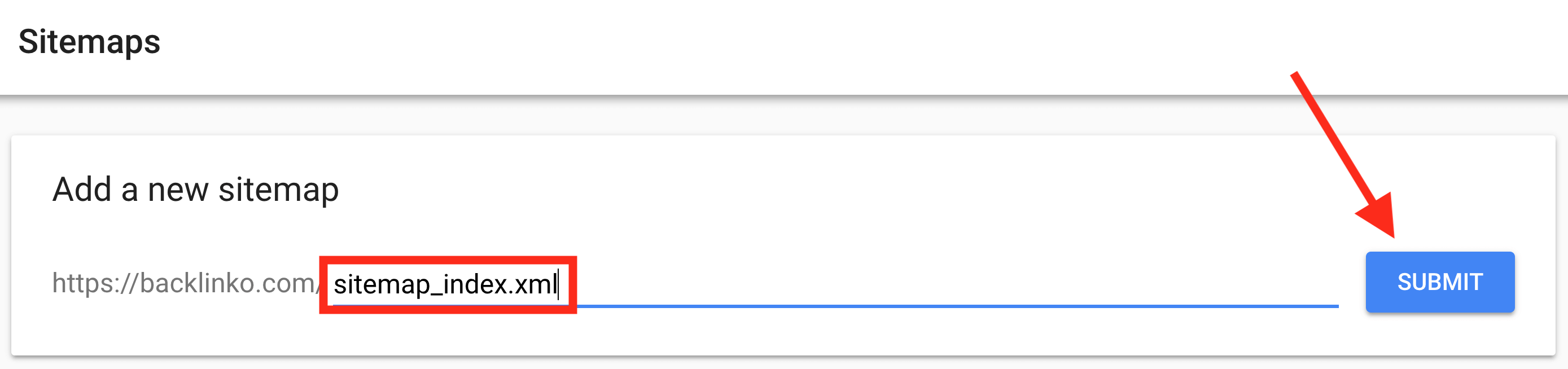

Grab your sitemap URL. Then, hit the “Sitemaps” button.

Paste in your URL and click “Submit”.

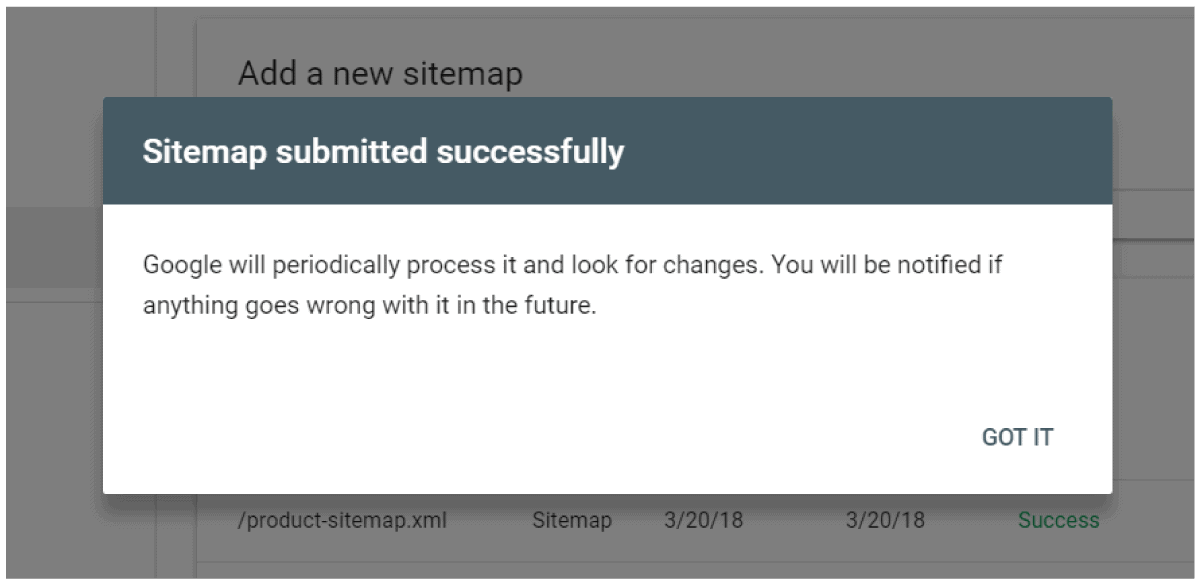

And that’s it:

Told you it was easy 🙂

Chapter 2:How to Optimize Your Technical

SEO With the GSC

In this chapter I’ll share the tactics I use to SLAM DUNK my technical SEO.

As you know, when you fix these technical SEO problems, you’ll usually find yourself with higher rankings and more traffic.

And the Google Search Console has a TON of features to help you easily spot and fix technical SEO issues.

Here’s how to use them:

Use The “Index Coverage” Report To Find

(And Fix) Problems With Indexing

If everything on your website is setup right, Google will:

a) Find your page

and

b) Quickly add it to their index

But sometimes, things go wrong.

Things you NEED to fix if you want Google to index all of your pages.

And that’s where the Index Coverage report comes in.

Let’s dive in.

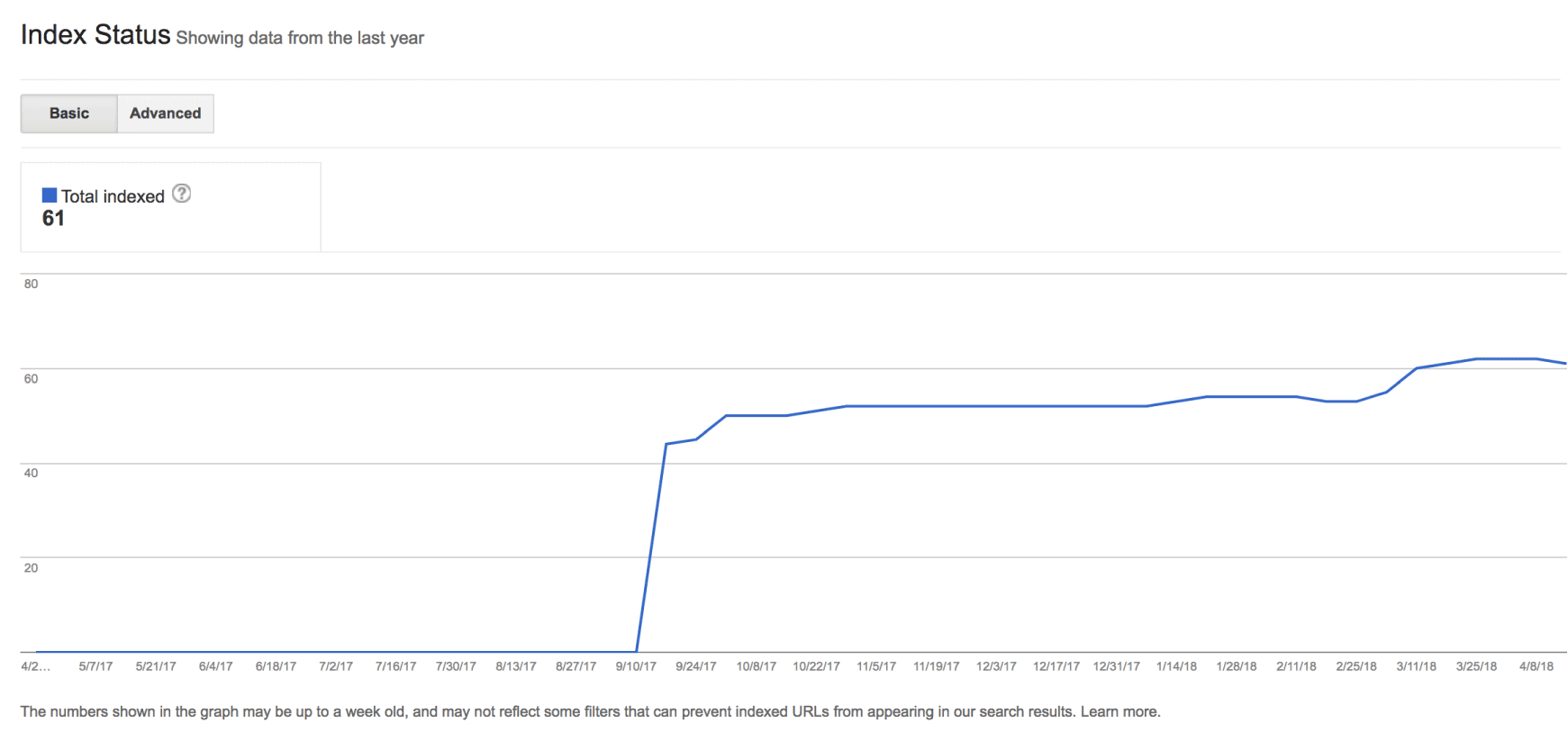

What is the Index Coverage Report?

The Index Coverage report lets you know which pages from your site are in Google’s index. It also lets you know about technical issues that prevent pages from getting indexed.

It’s part of the new GSC and replaces the “Index Status” report in the old Search Console.

Note: The Coverage report is pretty complicated.

And I could just hand you a list of features and wish you luck.

(In fact, that’s what most other “ultimate guides” do).

Instead, I’m going to walk you through an analysis of a REAL site (this one), step-by-step.

That way you can watch me use the Index Coverage Report to uncover problems… and fix them.

How to Find Errors With The Index Coverage Report

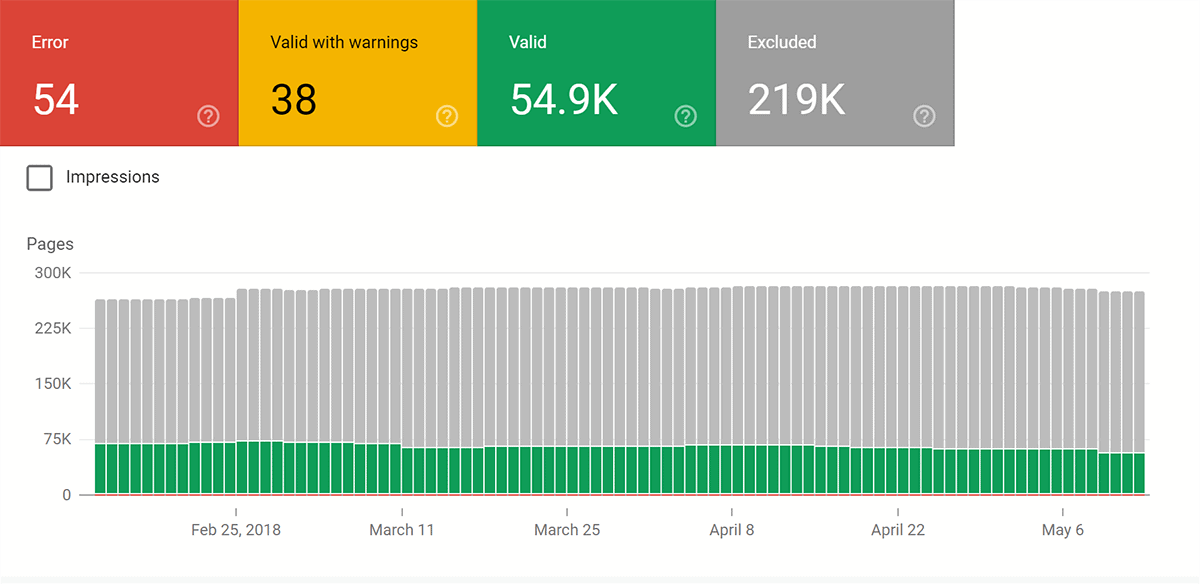

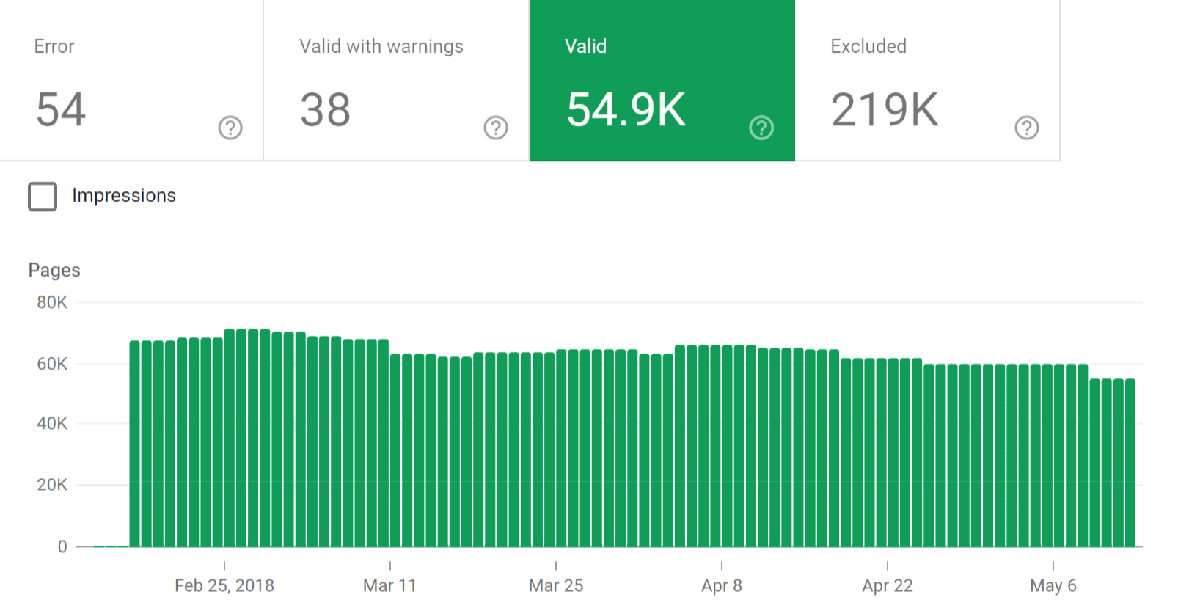

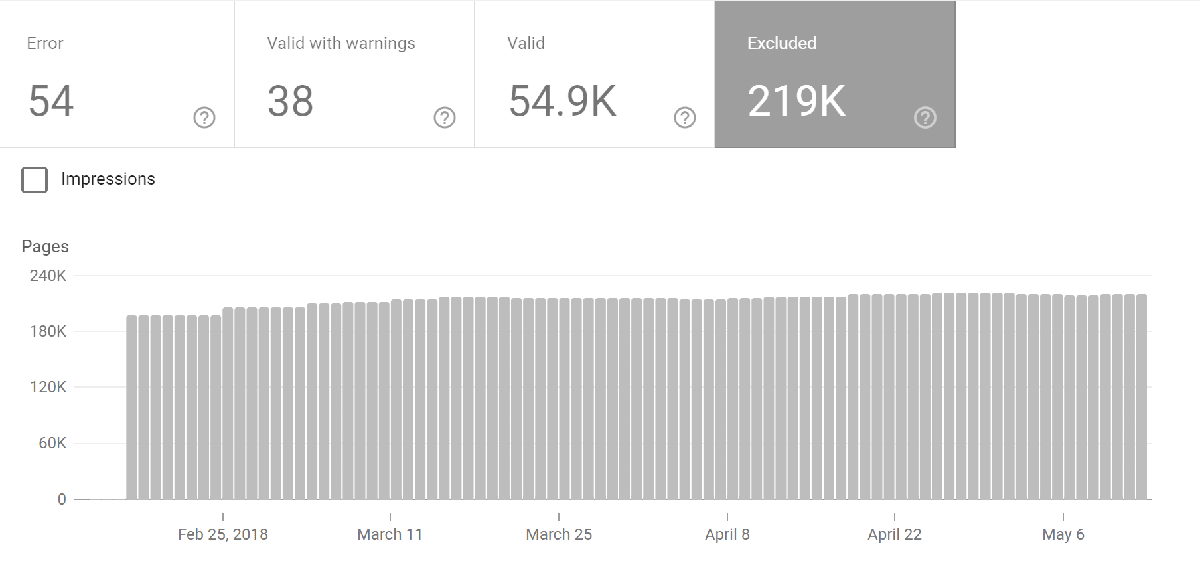

At the top of the Index Coverage report we’ve got 4 tabs:

- Error

- Valid with warnings

- Valid

- Excluded

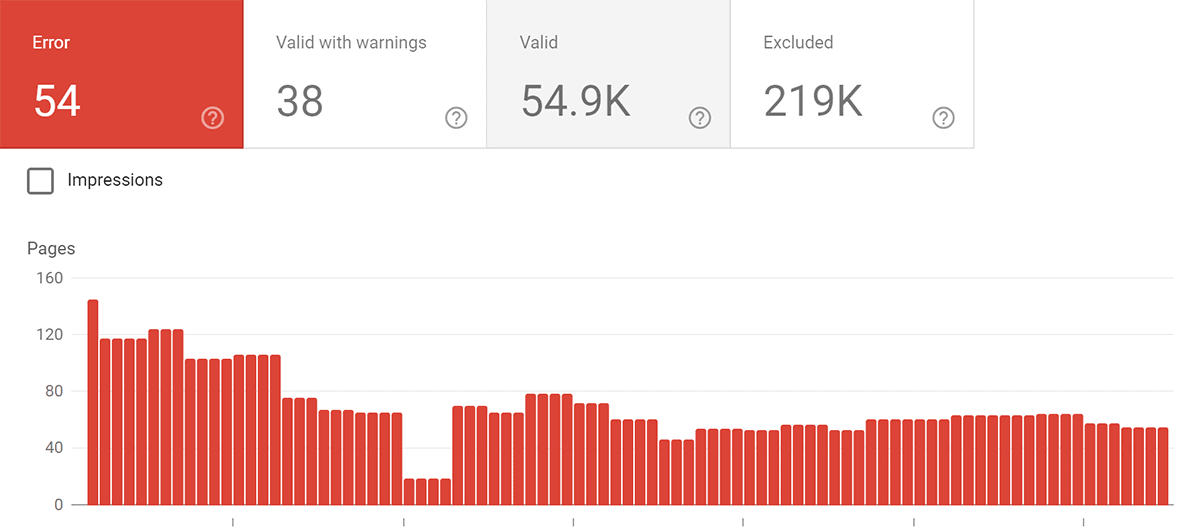

Let’s focus on the “error” tab for now.

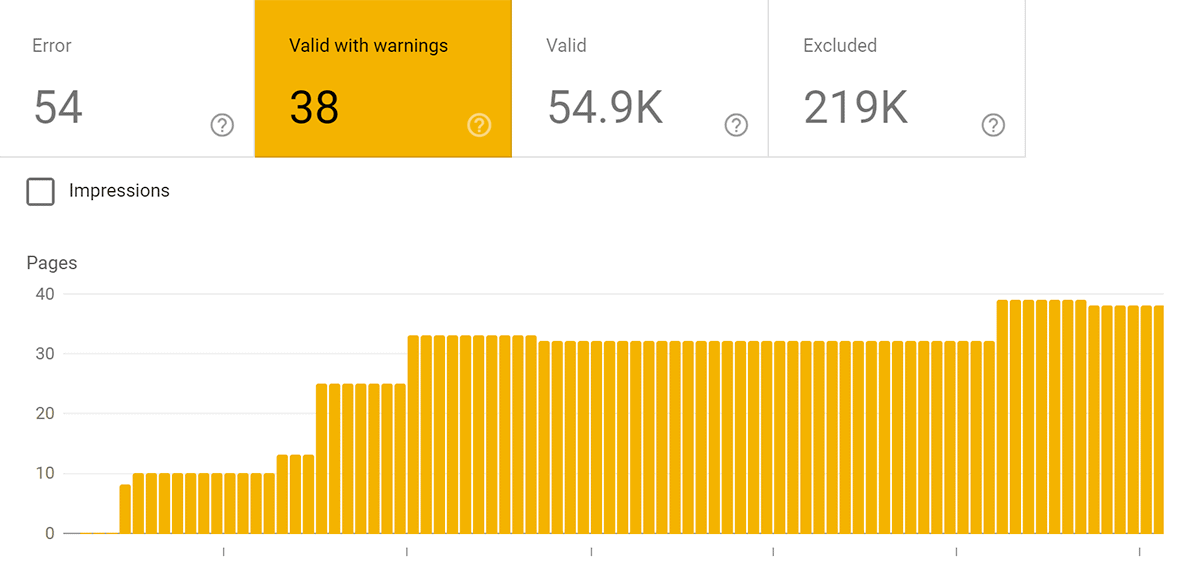

As you can see, this site has 54 errors. The chart shows how that number has changed over time.

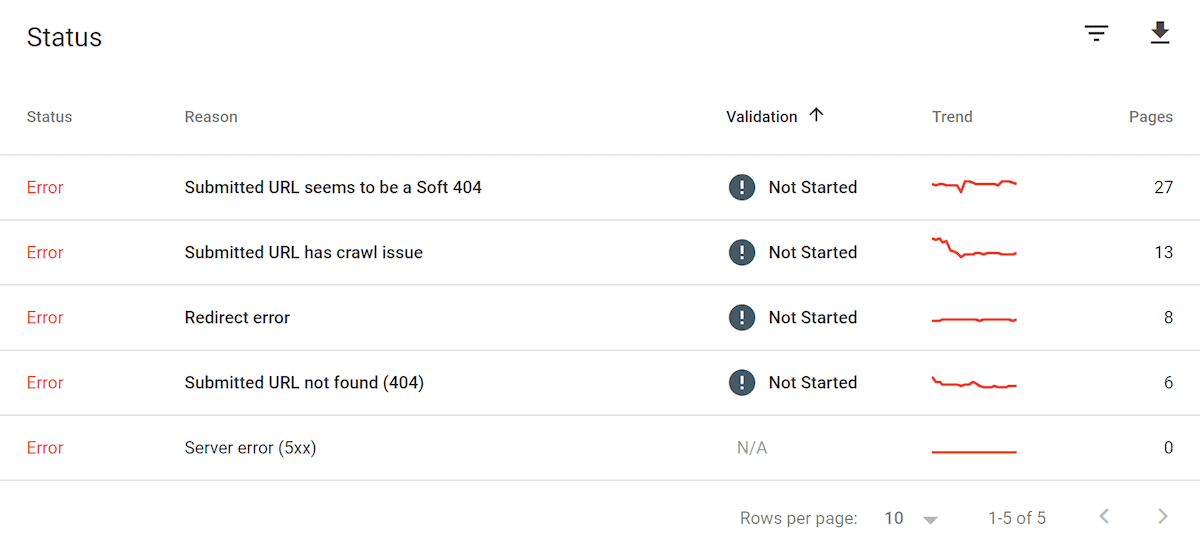

If you scroll down, you get deets on each of these errors:

There’s a lot to take in here.

So to help you make sense of each “reason”, here are some quick definitions:

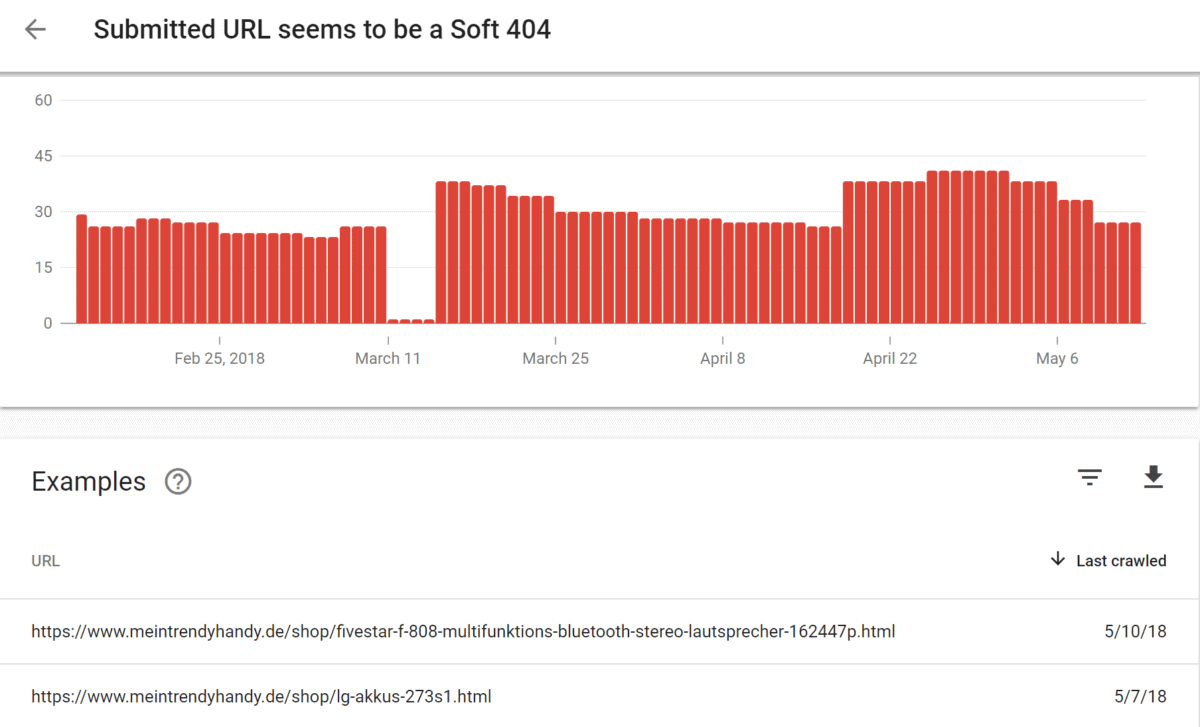

“Submitted URL seems to be a Soft 404”

This means that the page was “not found”, but delivered an incorrect status code in the header.

(I’ve found this one to be a little buggy)

“Redirect error”

There’s a redirect for this page (301/302).

But it ain’t working.

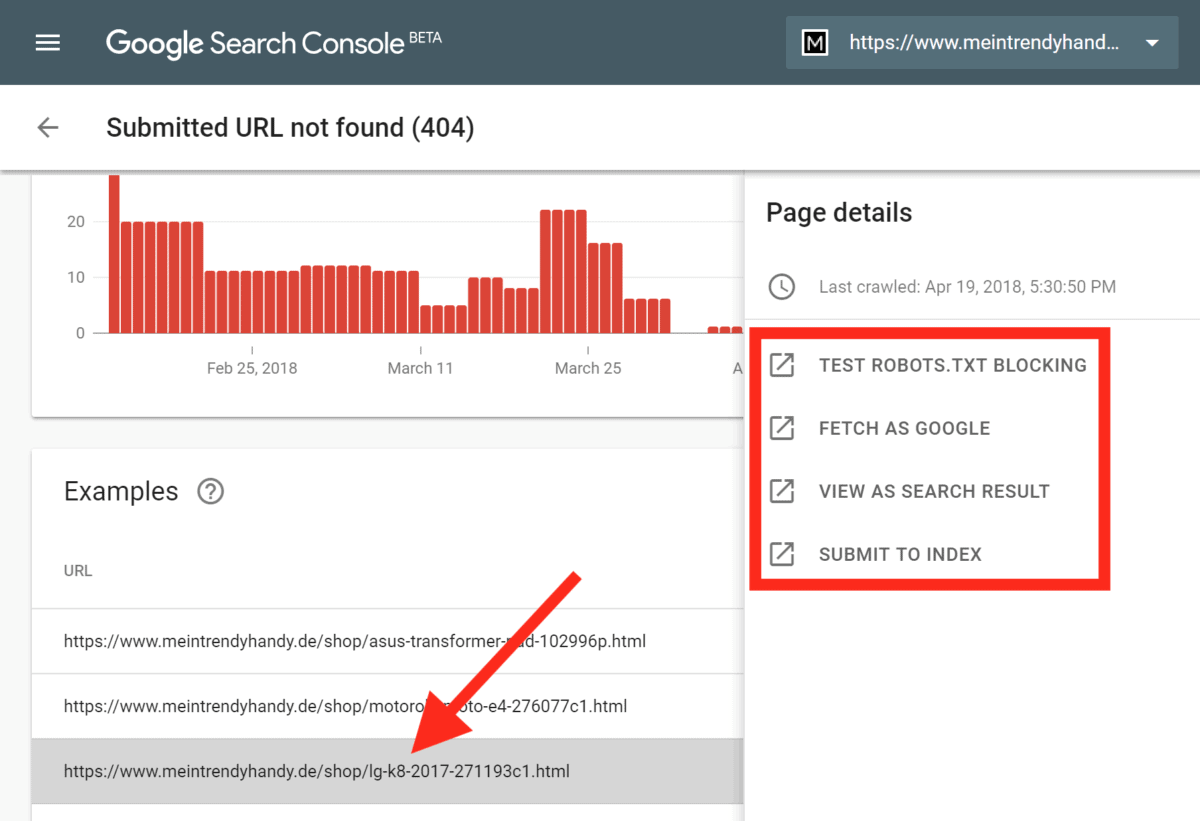

“Submitted URL not found (404)”

The page wasn’t found and the server returned the correct HTTP status code (404).

All good. (Well, if you ignore the fact that the page is broken…)

“Submitted URL has crawl issue”

This could be a 100 different things.

You’ll have to visit the page to see what’s up.

“Server errors (5xx)”

Googlebot couldn’t access the server. It might have crashed, timed out, or been down when Googlebot stopped by.

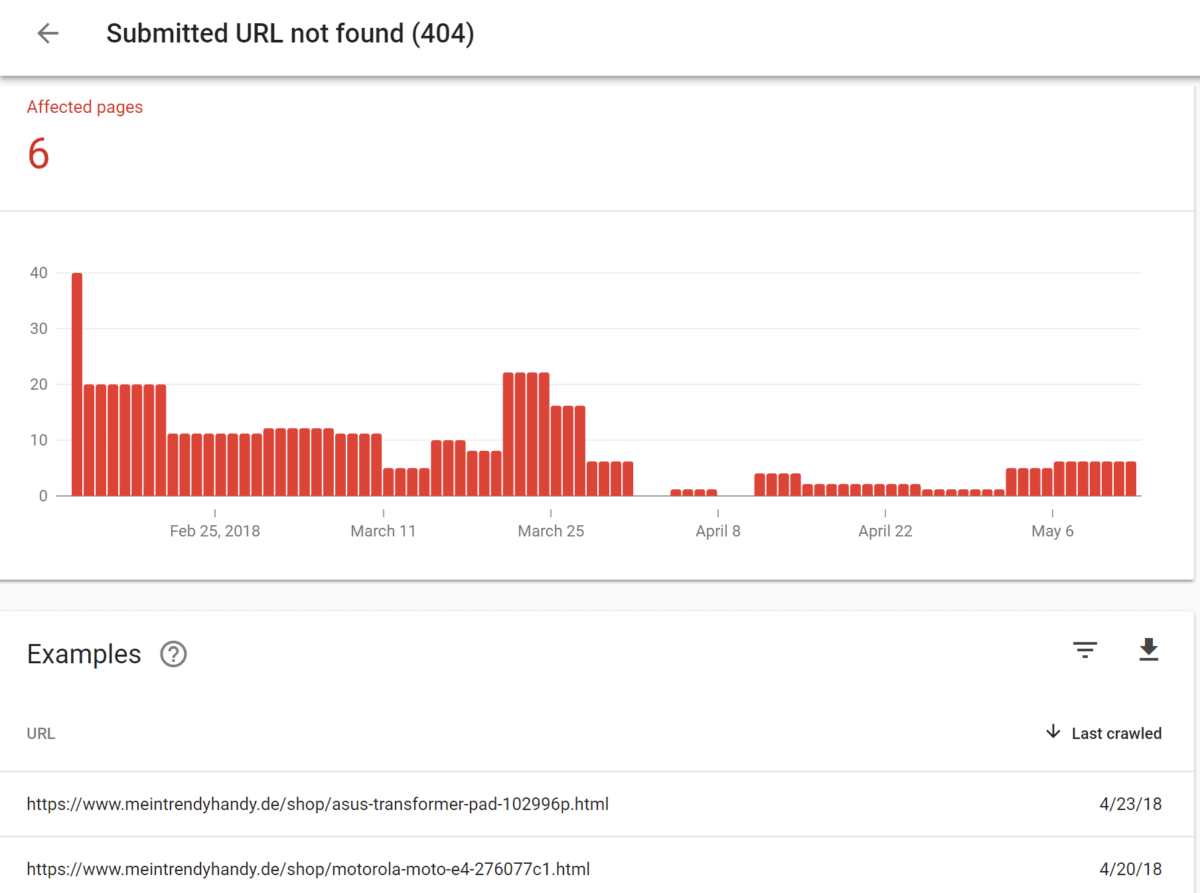

And when you click on an error status, you get a list of pages with that particular problem.

404 errors should be easy to fix. So let’s start with those.

Click a URL on the list. This opens up a side panel with 4 options:

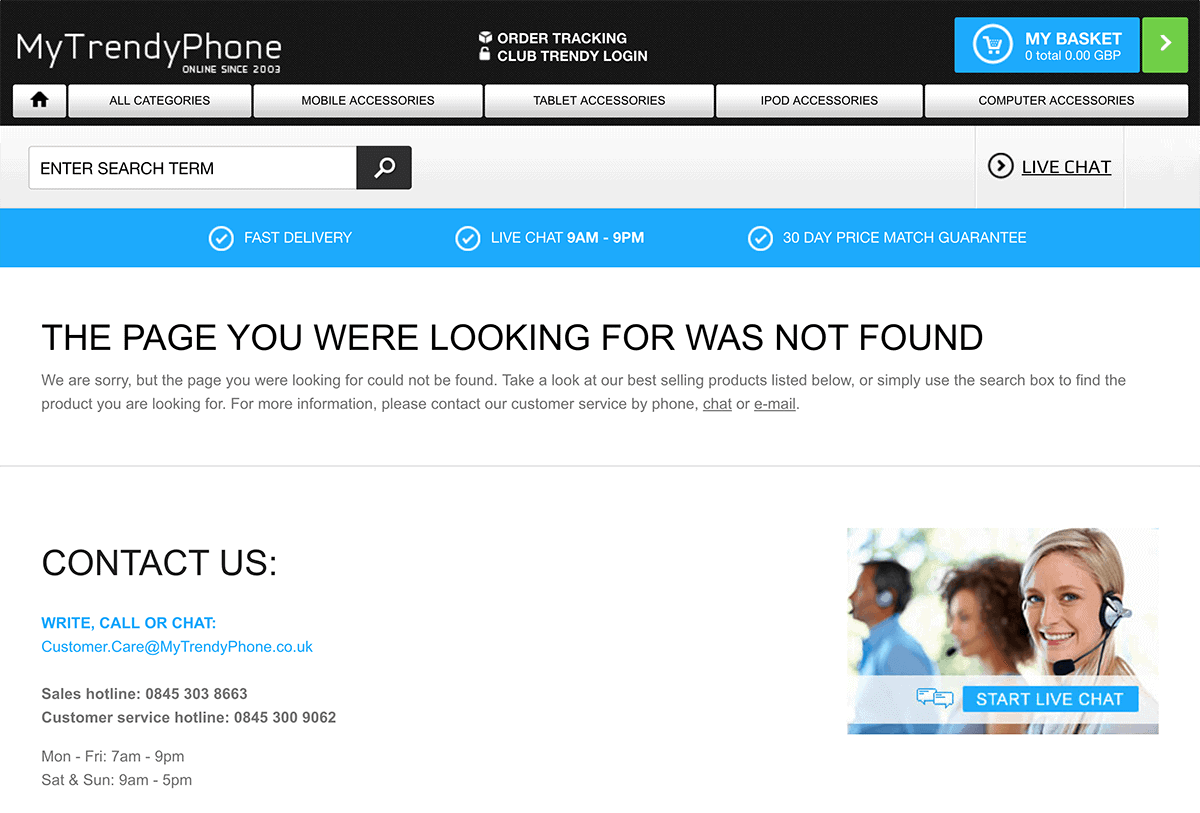

But first, let’s visit the URL with a browser. That way, we can double check that the page is really down.

Yup. It’s down.

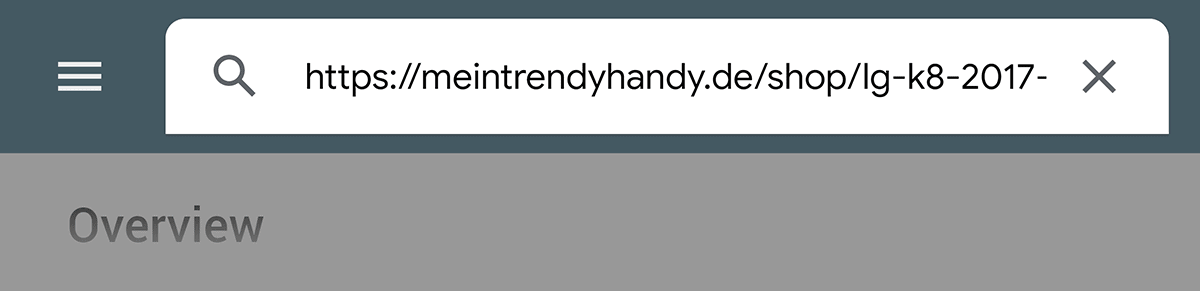

Next, pop your URL into the URL inspection field at the top of the page.

And Googlebot will rush over to your page.

Sure enough, this page is still giving me a 404 “Not found” status.

How do we fix it?

Well, we have two options:

- Leave it as is. Google will eventually deindex the page. This makes sense if the page is down for a reason (like if you don’t sell that product anymore).

- You can redirect the 404 page to a similar product page, category page, or blog post.

How to Fix “Soft 404” Errors

Now it’s time to fix these pesky “Soft 404” errors.

Again, check out the URLs with that error.

Then, visit each URL in your browser.

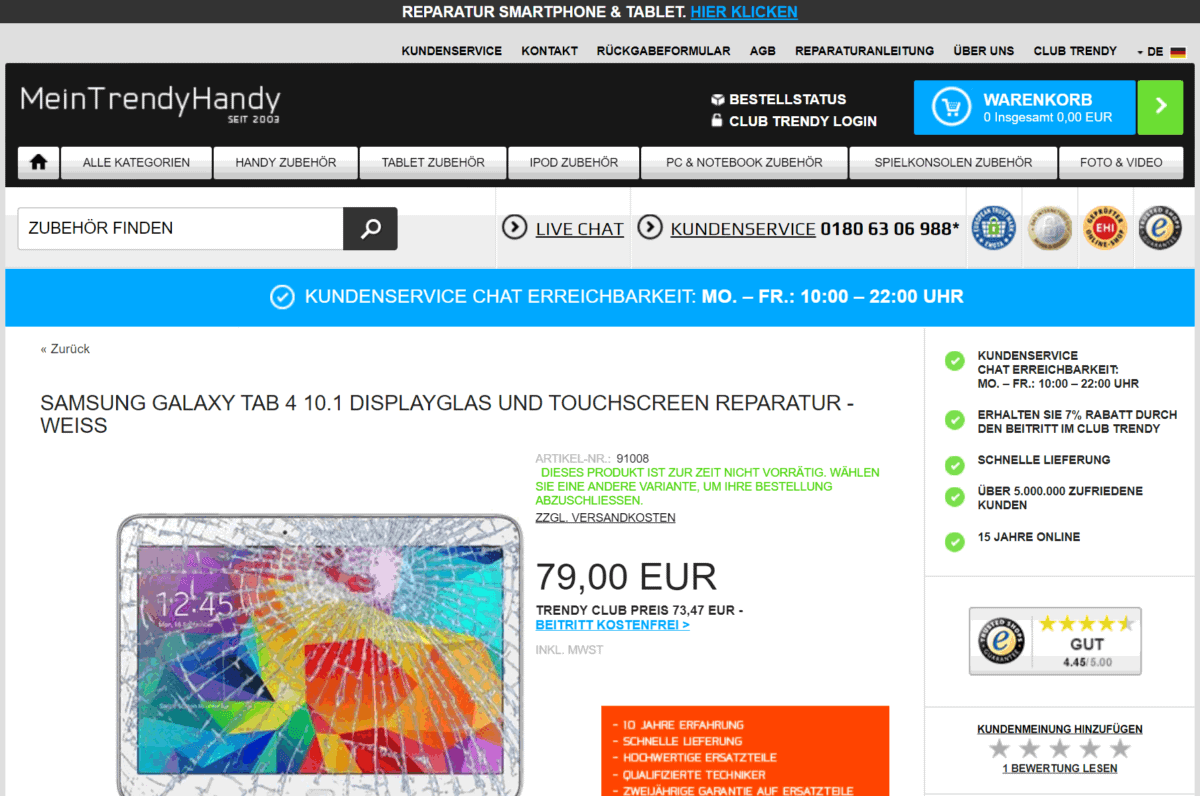

Looks like the first page on the list is loading fine.

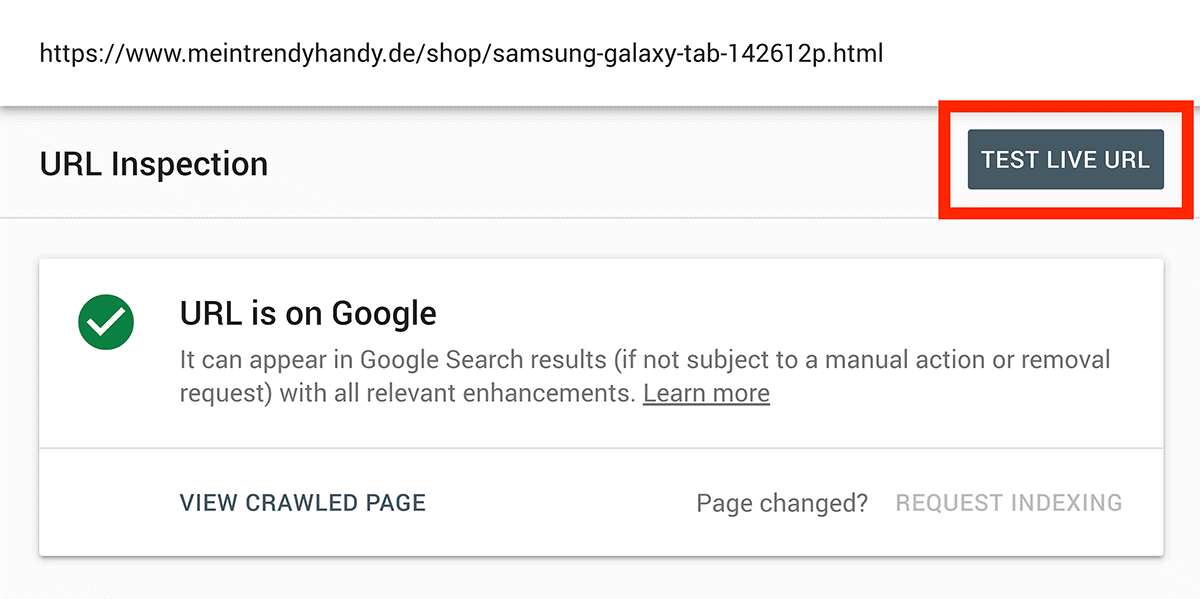

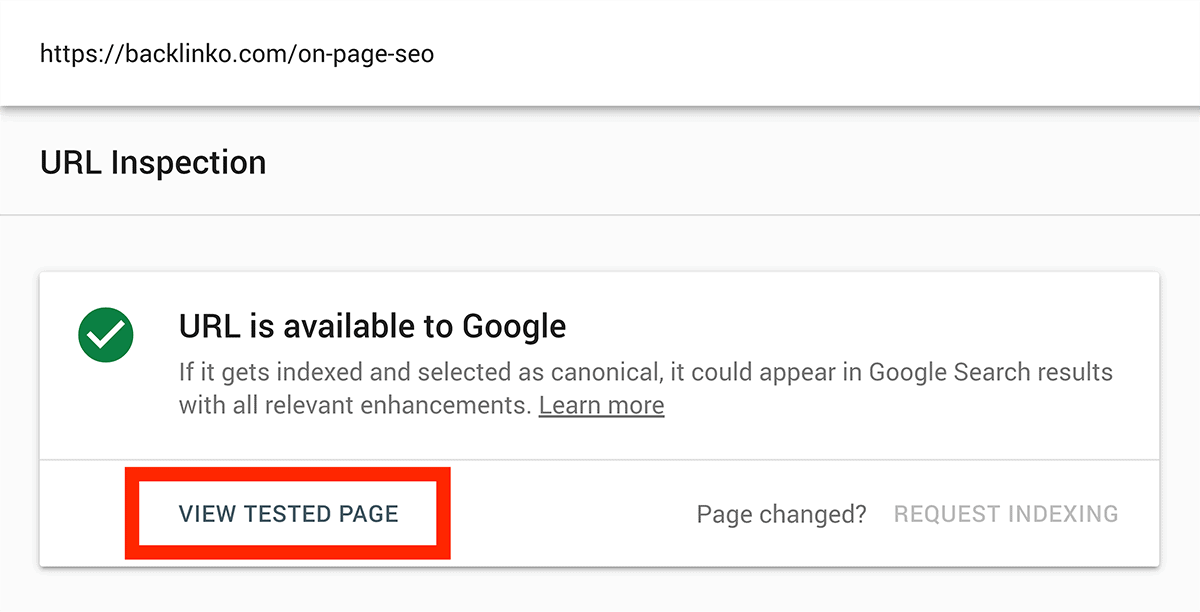

Let’s see if Google can access the page OK. Again, we’ll use the URL Inspection tool.

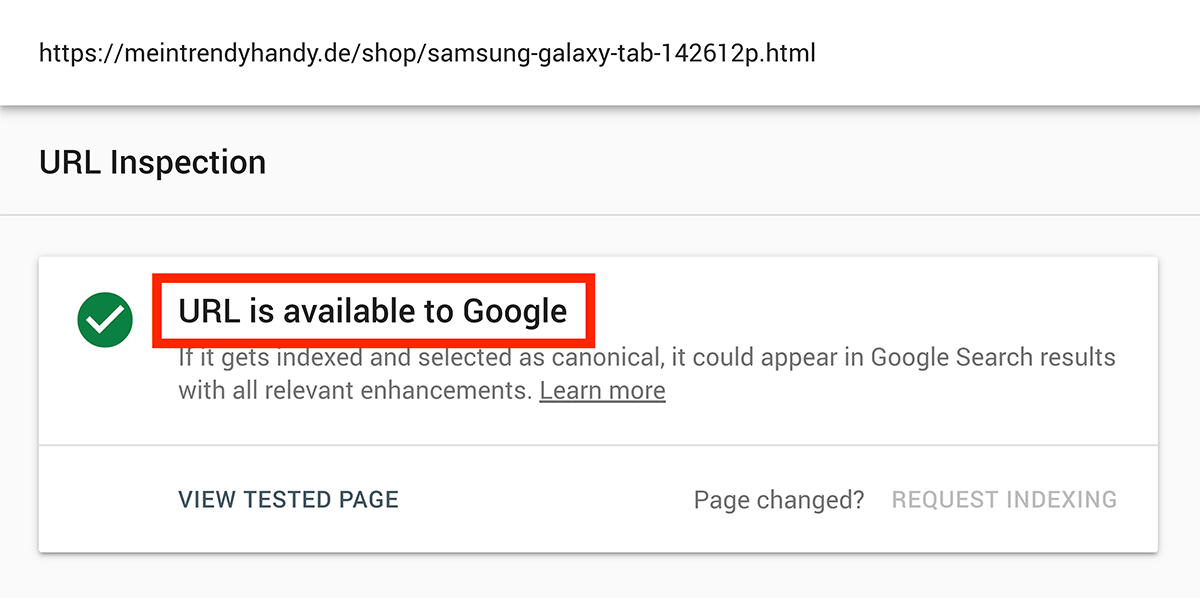

This time we’ll hit the “Test Live URL button”. This sends Googlebot to the page. It also renders the page so you can see your page like Googlebot sees it.

Looks like Google found the page this time.

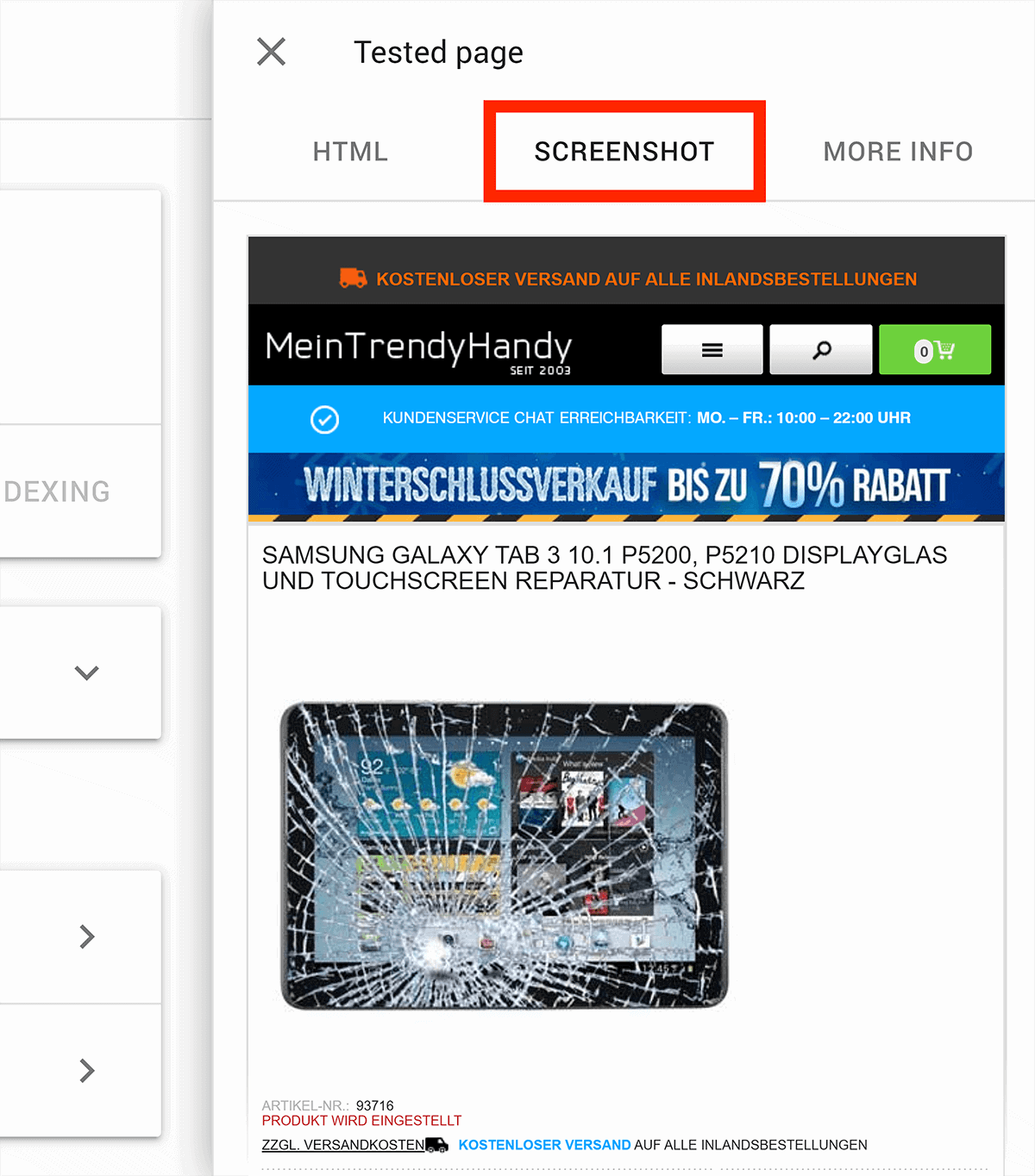

Now let’s see how Google rendered the page. Click “View Tested Page”, then the “Screenshot” tab:

Looks pretty much the same as how visitors see it. That’s good.

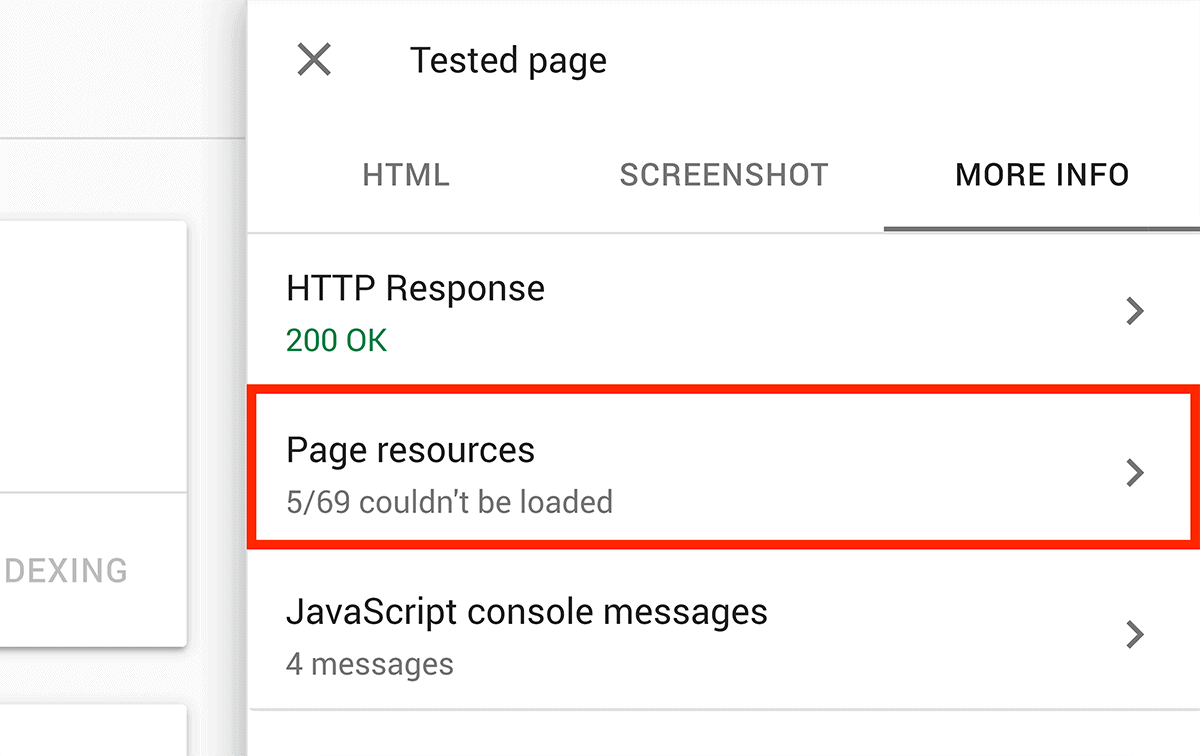

Next, click the More Info tab, and check for any page resources that Google wasn’t able to load correctly.

Sometimes there’s a good reason to block certain resources from Googlebot. But sometimes these blocked resources can lead to soft 404 errors.

In this case though, these 5 things are all meant to be blocked.

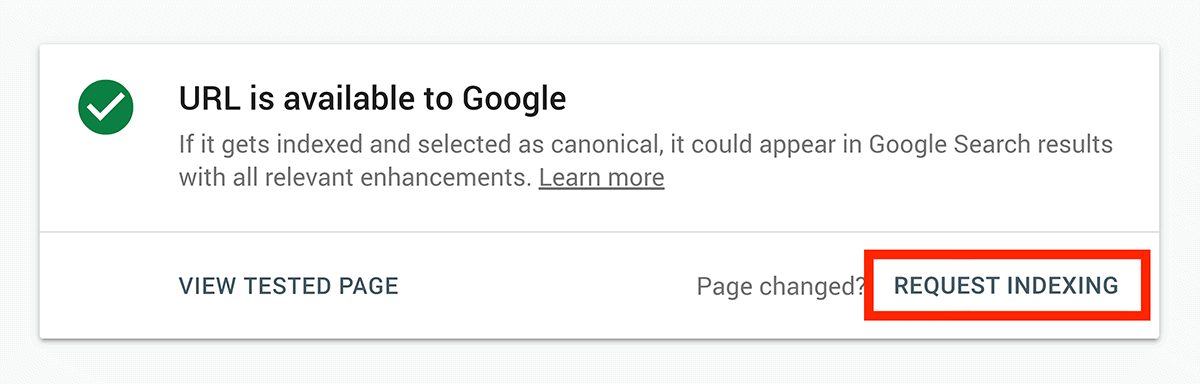

Once you’ve made sure any indexing errors are resolved, click the “Request Indexing” button:

This tells Google to index the page.

The next time Googlebot stops by, the page should get indexed.

How to Fix Other Errors

You can use the same exact process I just used for “Soft 404s” to fix any error you run into:

- Load up the page in your browser

- Plug the URL into “URL Inspection”

- Read over the specific issues that the GSC tells you about

- Fix any issues that crop up

Here are a few examples:

- Redirect errors

- Crawl errors

- Server errors

Bottom line? With a bit of work, you can fix pretty much any error that you run into in the Coverage report.

How to Fix “Warnings” In The

Index Coverage Report

I don’t know about you…

…but I don’t like to leave anything to chance when it comes to SEO.

Which means I don’t mess around when I see a bright orange “Warning”.

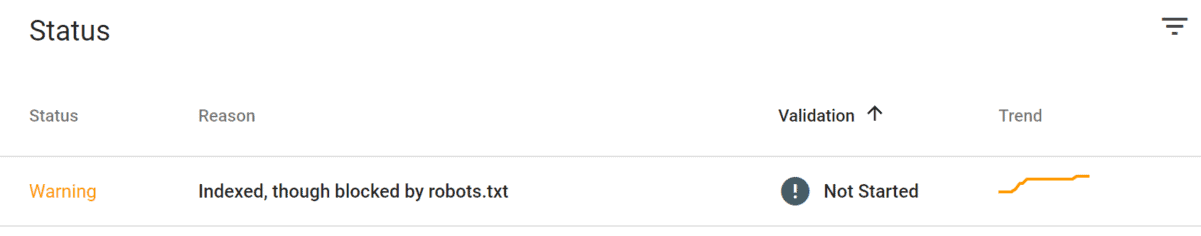

So let’s hit the “Valid with warnings” tab in the Index Coverage Report.

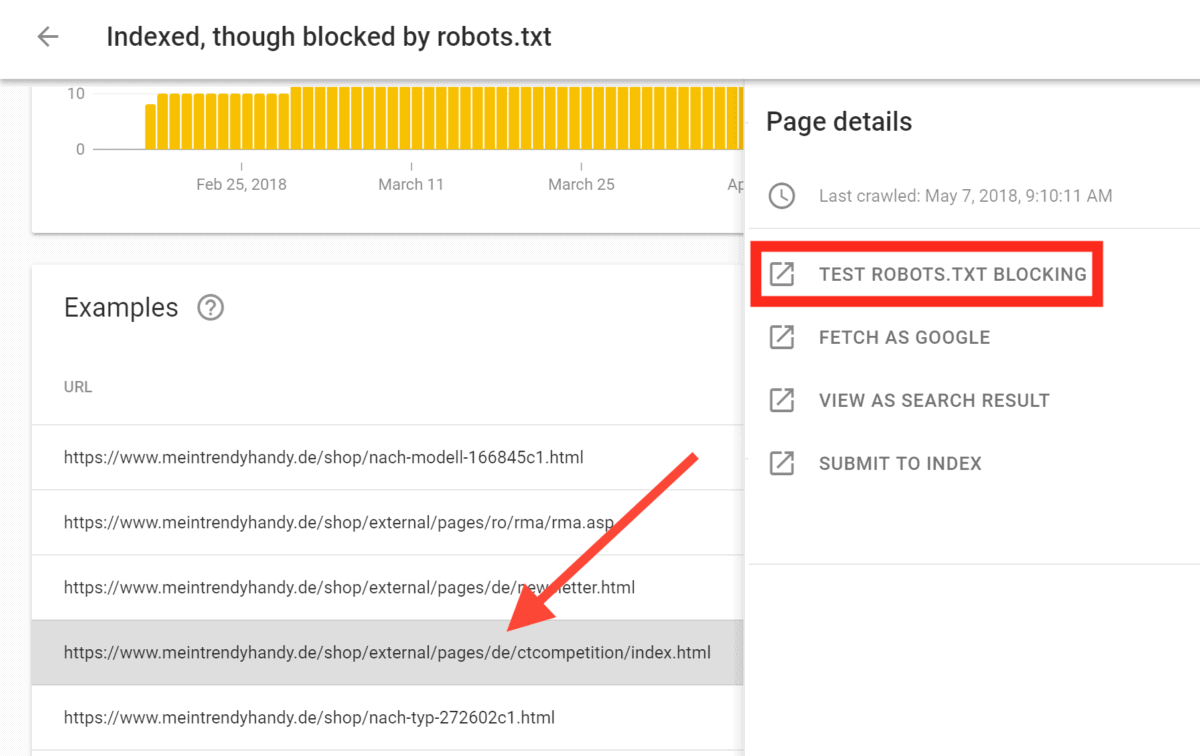

This time there’s just one warning: “Indexed, though blocked by robots.txt”.

So what’s going on here?

Let’s find out.

The GSC is telling us the page is getting blocked by robots.txt. So instead of hitting “Fetch As Google”, click on “Test Robots.txt Blocking”:

This takes us to the robots.txt tester in the old Search Console.

As it turns out, this URL IS getting blocked by robots.txt.

So what’s the fix?

Well, if you want the page indexed, you should unblock it from Robots.txt (duh).

But if you don’t want it indexed, you have two options:

- Add the “noindex,follow” tag to the page. And unblock it from robots.txt

- Get rid of the page using the URL Removal Tool

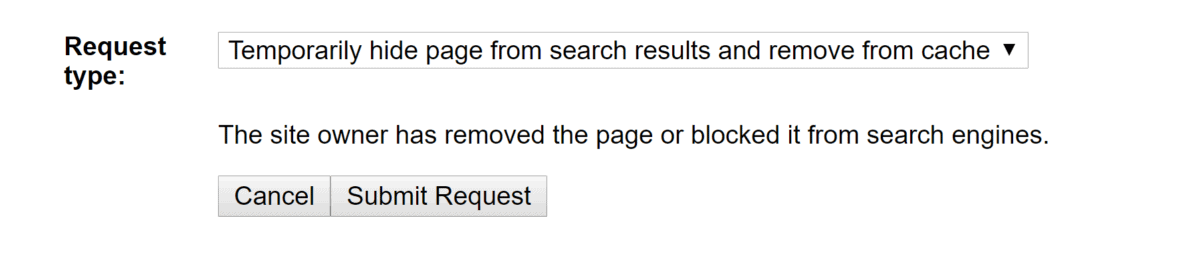

Let’s see how to use the URL Removal Tool:

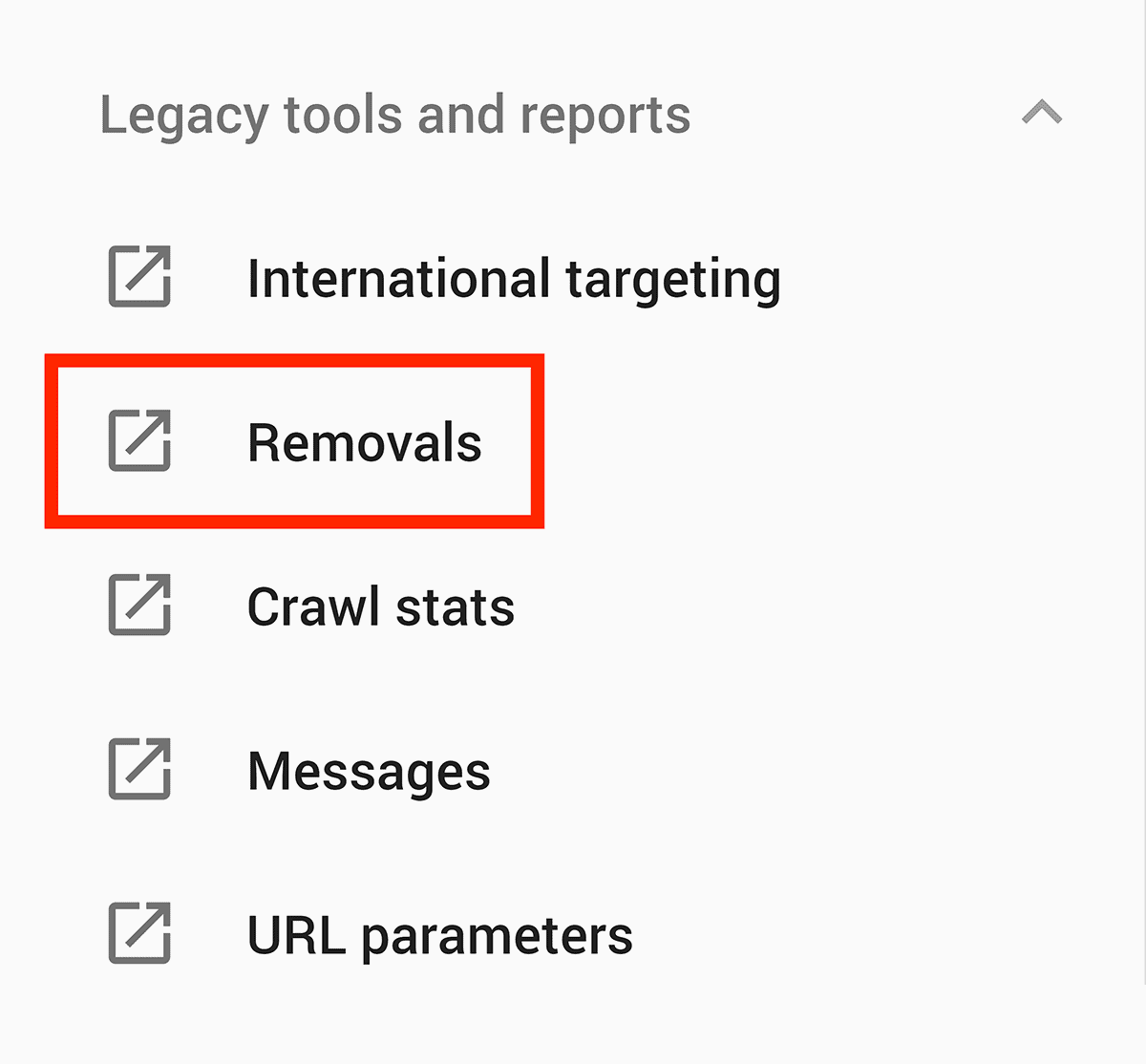

How To Use The URL Removal Tool In Search Console

The URL Removal Tool is a quick and easy way to remove pages from Google’s index.

Unfortunately, this tool hasn’t moved over to the new Google Search Console yet. So you’ll need to use the old GSC to use it.

Expand the “Legacy tools and reports” tab in the new GSC sidebar, then click “Removals”, where you’ll be taken to the old GSC.

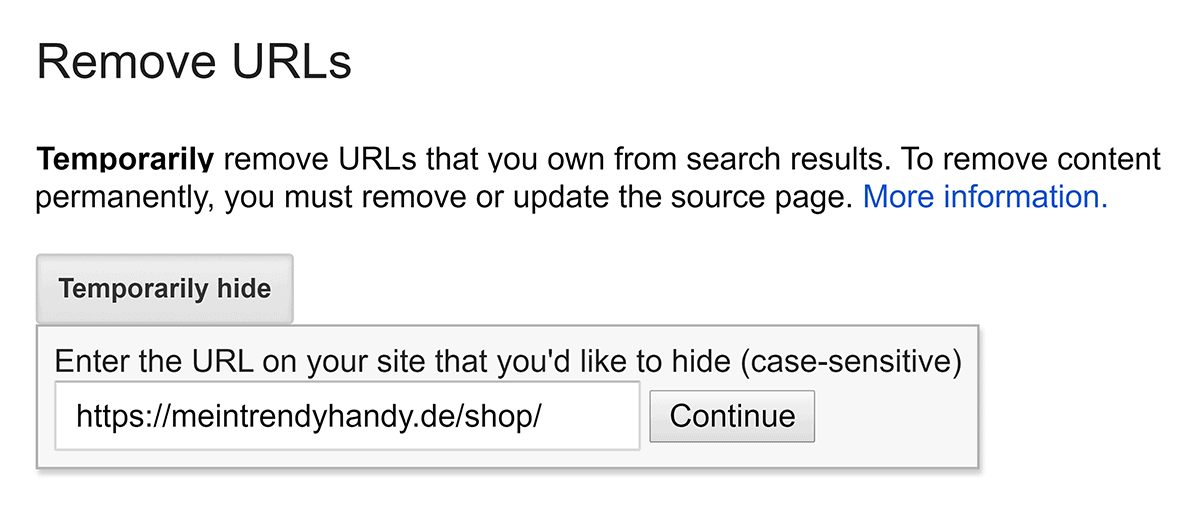

Finally, paste in the URL you want to remove:

Double triple check that you entered the right URL, then click “Submit Request”.

Note: A removal is only active for 90 days. After that Googlebot will attempt to recache the page.

But considering the page is blocked through robots.txt…

…this page will be gone for good!

Check Indexed Pages For Possible Issues

Now let’s move on to the “Valid” tab.

This tells us how many pages are indexed in Google.

What should you look for here? Two things:

Unexpected drop (or increase) of indexed pages

Notice a sudden drop in the number of indexed pages?

That could be a sign that something’s wrong:

- Maybe a bunch of pages are blocking Googlebot.

- Or maybe you added a noindex tag by mistake.

Either way:

Unless you purposely deindexed a bunch of pages, you definitely want to check this out.

On the flip side:

What if you notice a sudden increase in indexed pages?

Again, that might be a sign that something is wrong.

(For example, maybe you unblocked a bunch of pages that are supposed to be blocked).

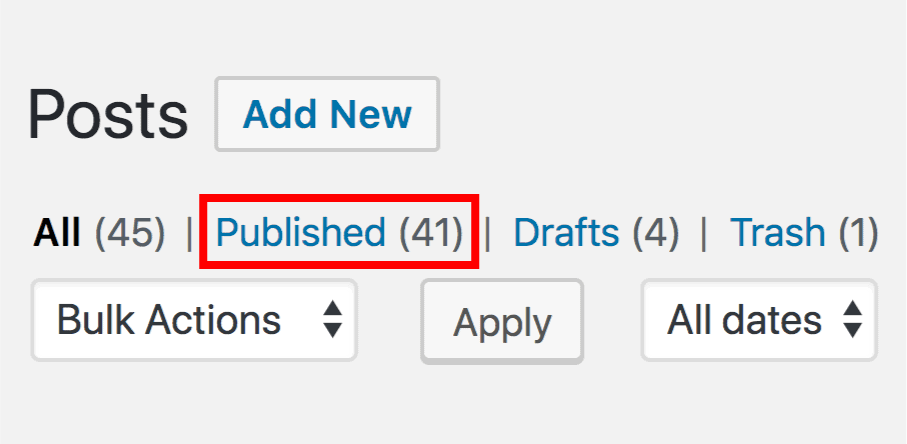

An unexpectedly high number of indexed pages

There are currently 41 posts at Backlinko.

So when I take a look at the “Valid” report in Index Coverage, I’d expect to see about that many pages indexed.

But if it’s WAY higher than 41? That’s a problem. And I’m going to have to fix it.

Oh, in case you’re wondering… here’s what I do see:

So no need to worry about me 😉

Make Sure Excluded Stuff Should Be Excluded

Now:

There are plenty of good reasons to block search engines from indexing a page.

Maybe it’s a login page.

Maybe the page contains duplicate content.

Or maybe the page is low quality.

Note: When I say “low quality”, I don’t mean the page is garbage. It could be that the page is useful for users… but not for search engines.

That said:

You definitely want to make sure Google doesn’t exclude pages that you WANT indexed.

In this case, we have a lot of excluded pages…

And if you scroll down, you get a list of reasons that each page is excluded from Google’s index.

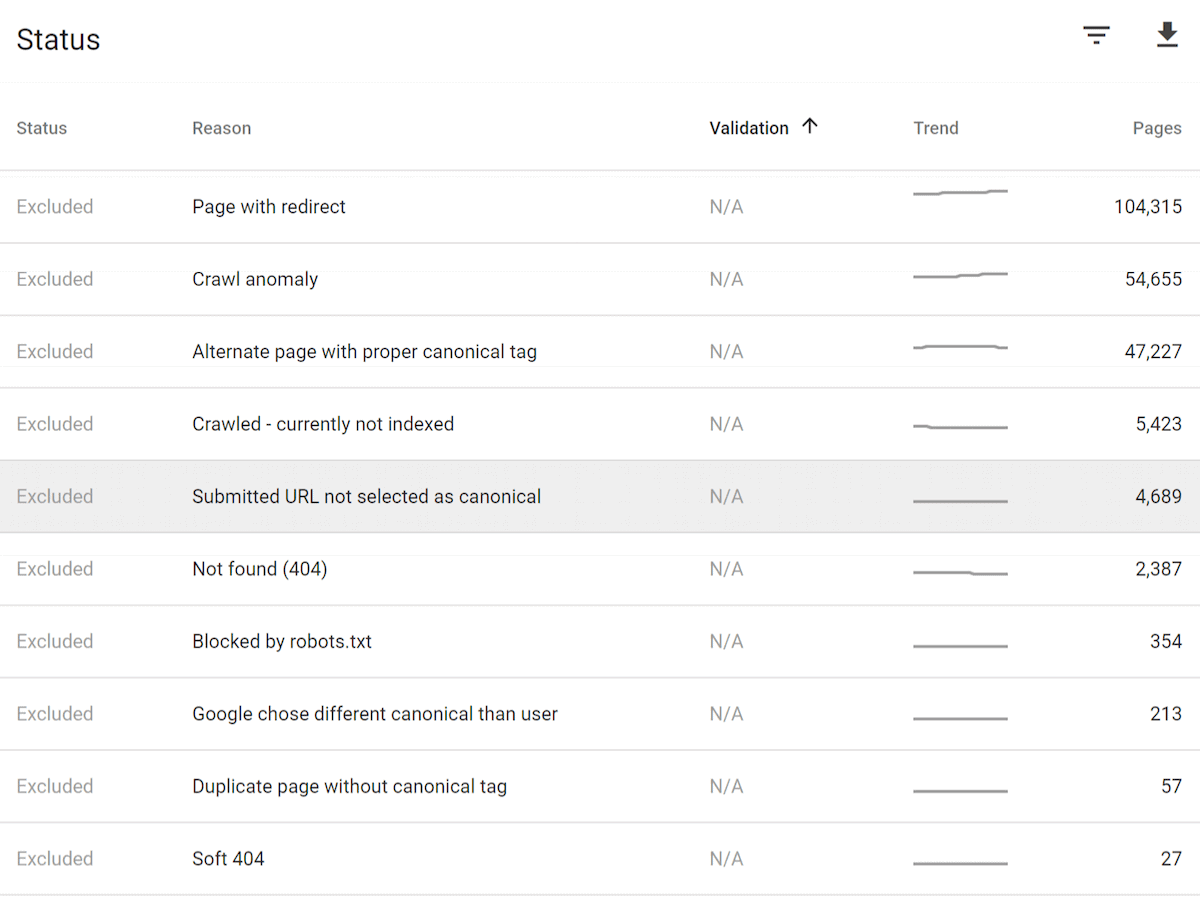

So let’s break this down…

“Page with redirect”

The page is redirecting to another URL.

This is totally fine. Unless there are backlinks (or internal links) pointing to that URL, they’ll eventually stop trying to index it.

“Alternate page with proper canonical tag”

Google found an alternative version of this page somewhere else.

That’s what a canonical URL is supposed to do. So that’s A-OK.

“Crawl Anomaly”

Yikes! Could be a number of things. So we’ll need to investigate.

In this case, it looks like the pages listed are returning a 404.

“Crawled – currently not indexed”

Hmmm…

These are pages that Google has crawled, but (for some reason) are not indexed.

Google doesn’t give you the exact reason they won’t index the page.

But from my experience, this error means: the page isn’t good enough to warrant a spot in the search results.

So, what should you do to fix this?

My advice: work on improving the quality of any pages listed.

For example, if it’s a category page, add some content that describes that category. If the page has lots of duplicate content, make it unique. If the page doesn’t have much content on it, beef it up.

Basically, make the page worthy of Google’s index.

“Submitted URL not selected as Canonical”

This is Google telling you:

“This page has the same content as a bunch of other pages. But we think another URL is better”

So they’ve excluded this page from the index.

My advice: if you have duplicate content on a number of pages, add the noindex meta robots tag to all duplicate pages except the one you want indexed.

“Blocked by robots.txt”

These are pages that robots.txt is blocking Google from crawling.

It’s worth double checking these errors to make sure what you’re blocking is meant to be blocked.

If it’s all good? Then robots.txt is doing its job and there’s nothing to worry about.

“Duplicate page without canonical tag”

The page is part of set of duplicate pages, and doesn’t include a canonical URL.

In this case it’s pretty easy to see what’s up.

We’ve got a number of PDF documents. And these PDFs contain content from other pages on the site.

Honestly, this isn’t a big deal. But to be on the safe side, you should ask your web developer to block these PDFs using robots.txt. That way, Google ONLY indexes the original content.

“Discovered – currently not indexed”

Google has crawled these pages, but hasn’t included them in the index yet.

“Excluded by ‘noindex’ tag”

All good. The noindex tag is doing its job.

So that’s the Index Coverage report. I’m sure you’ll agree: it’s a VERY impressive tool.

Chapter 3:Get More Organic Traffic with the

Performance Report

In this chapter we’re going to deep dive into my favorite part of the GSC: “The Performance Report”.

Why is it my favorite?

Because I’ve used this report to increase organic traffic to Backlinko again and again.

I’ve also seen lots of other people use the Performance Report to get similar results.

So without further ado, let’s get started…

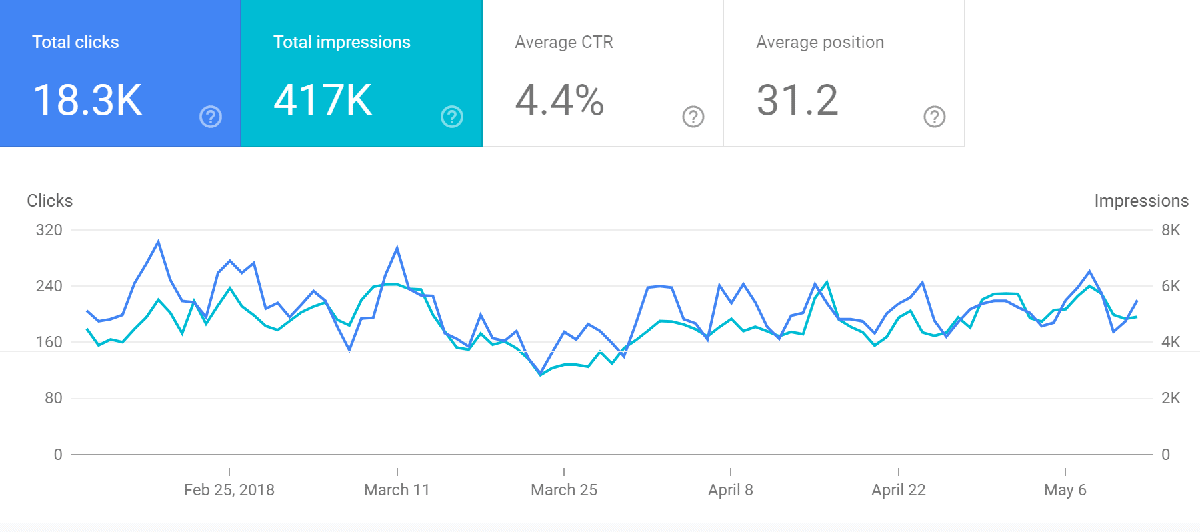

What Is The Performance Report?

The “Performance” report in Google Search Console shows you your site’s overall search performance in Google. This report not only shows you how many clicks you get, but also lets you know your CTR and average ranking position.

And this new Performance Report replaces the “Search Analytics” report in the old Search Console (and the old Google Webmaster Tools).

Yes, a lot of the data is the same as the old “Search Analytics” report. But you can now do cool stuff with the data you get (like filter to only show AMP results).

But my favorite addition to the new version is this:

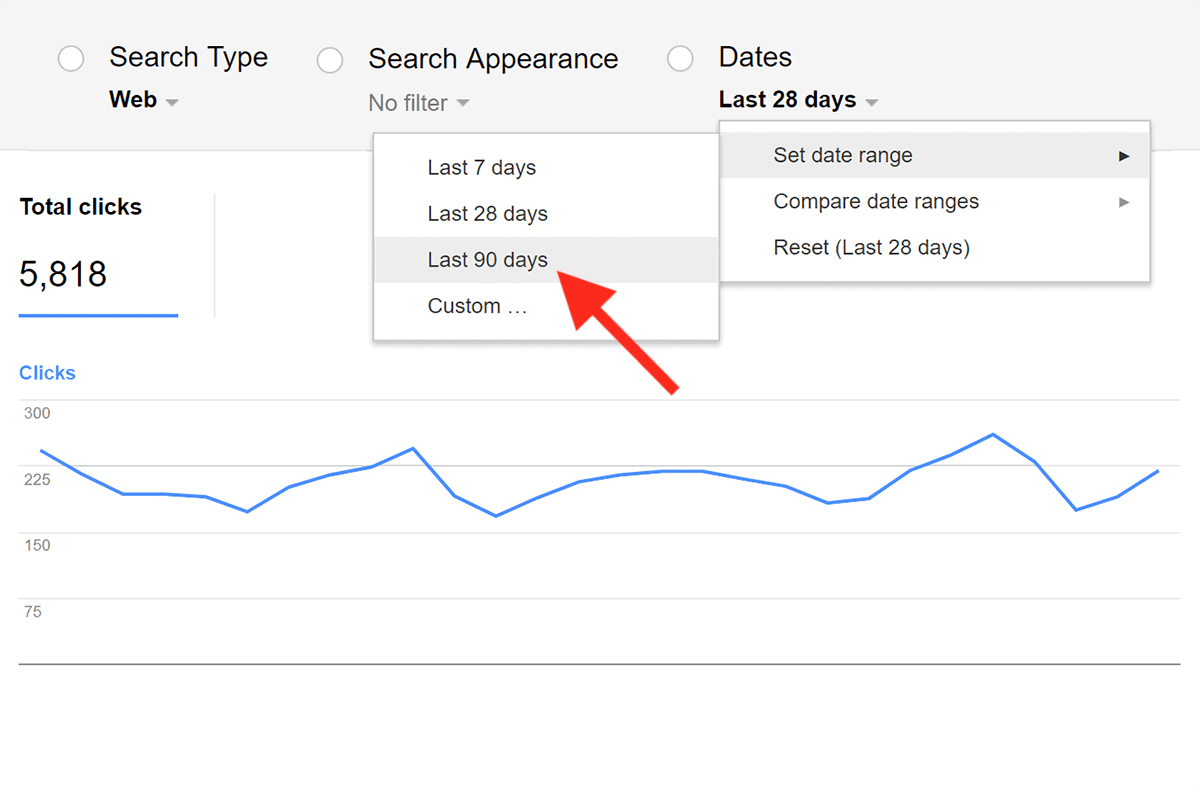

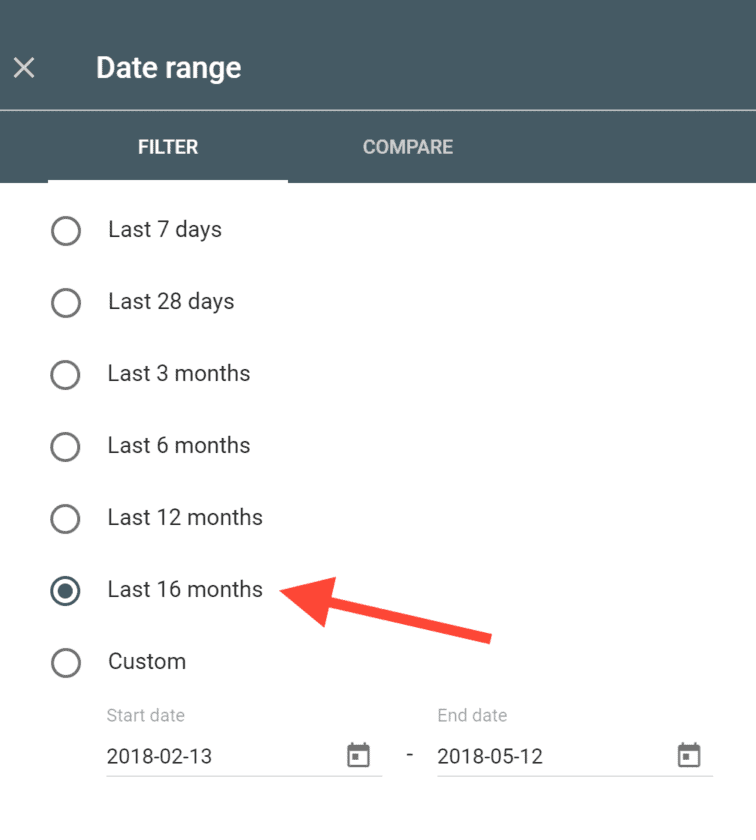

In the old Search Analytics report you could only see search data from the last 90 days.

(Which sucked)

Now?

We get 16 MONTHS of data:

For an SEO junkie like me, 16 months of data is like opening presents on Christmas morning.

(In fact, I used to pay for a tool to automatically pull and save my old Google Webmaster Tools data. Now, thanks to the beta version of the new GSC, it’s a free service)

How To Supercharge Your CTR With The

Performance Report

There’s no question that CTR is a key Google ranking factor.

The question is:

How can you improve your CTR?

The GSC Performance Report.

Note: Like I did in the last chapter, I’m going to walk you through a real-life case study.

Last time, we looked at an ecommerce site. Now we’re going to see how to use the GSC to get more traffic to a blog (this one).

Specifically, you’re going to see how I used The Performance Report to increase this site’s CTR by 63.2%.

So let’s fire up the Performance report in the new Search Console and get started…

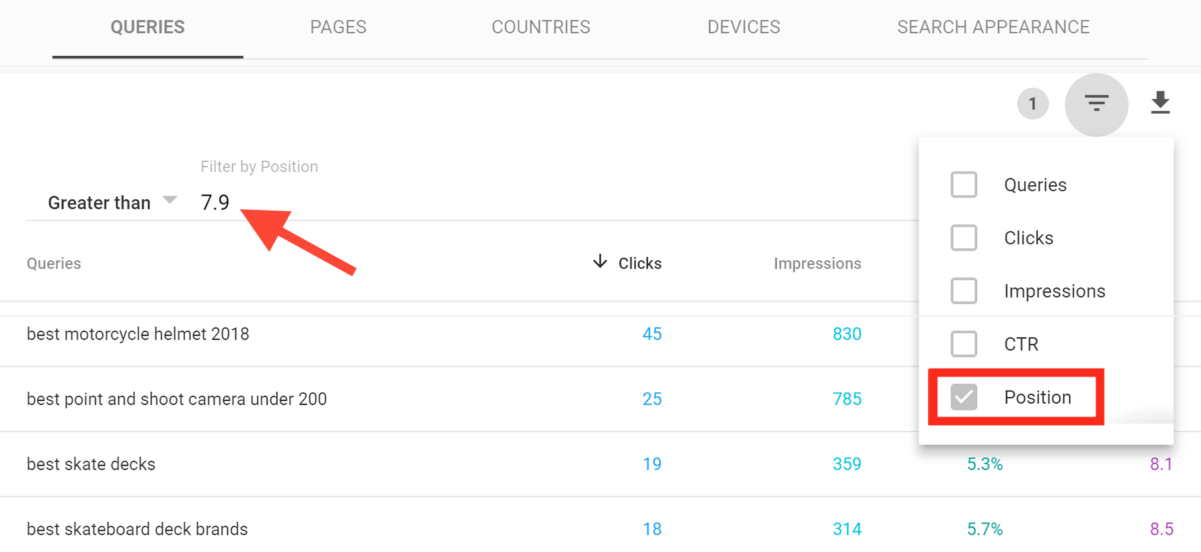

Find Pages With a Low CTR

First, highlight the “Average CTR” and “Average Position” tabs:

You want to focus on pages that are ranking #5 or lower… and have a bad CTR.

So let’s filter out positions 1-4.

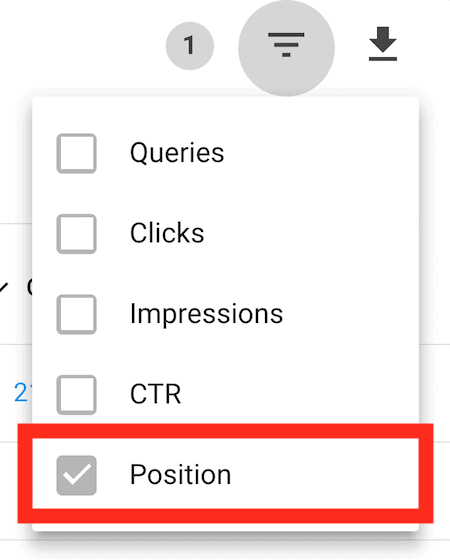

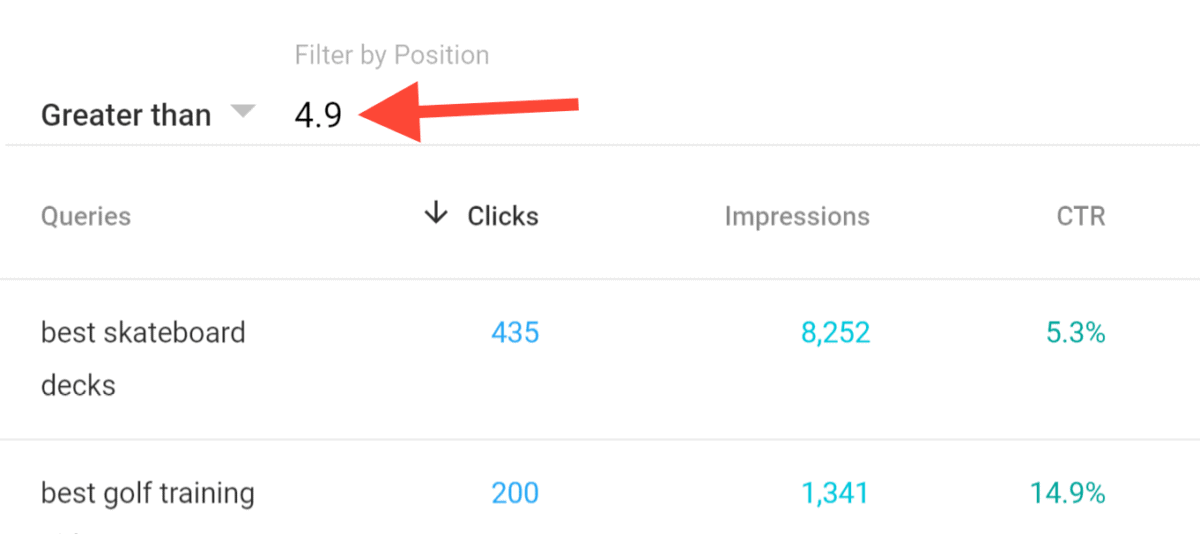

To do that, click on the filter button, and check the “Position” box.

You’ll now see a filter box above the data. So we can go ahead and set this to “Greater than” 4.9:

Now you have a list of pages that are ranking #5 or below.

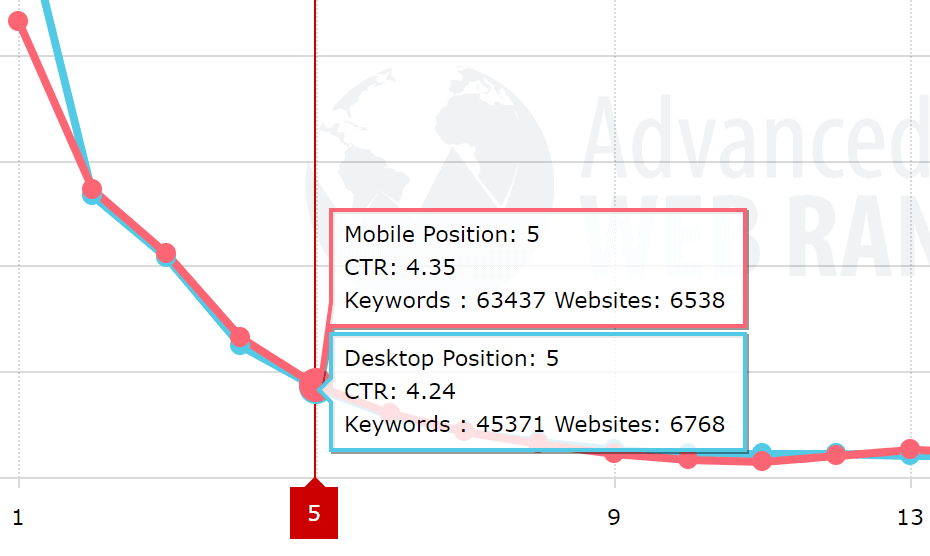

According to Advanced Web Ranking, position #5 in Google should get a CTR of around 4.35%:

You want to filter out everything that’s beating that expected CTR of 4.35%. That way you can focus on pages that are underperforming.

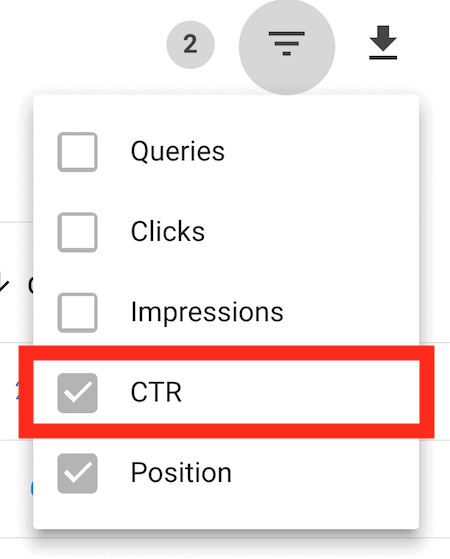

So click the filter button again and check the “CTR” box.

(Make sure you leave the “Position” box ticked)

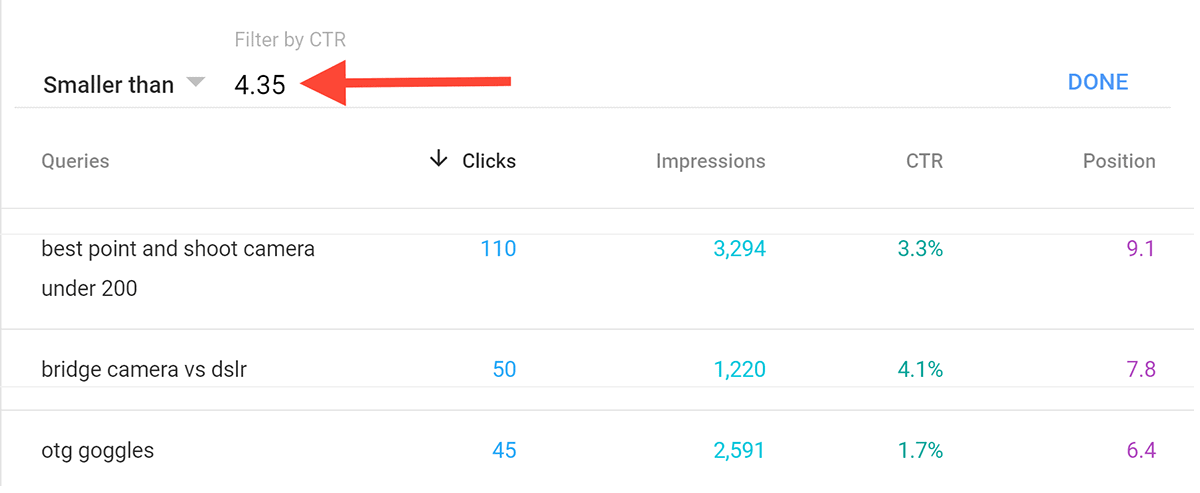

Then, set the CTR filter to “Smaller than” 4.35.

So what have we got?

A list of keywords that are ranking 5 or lower AND have a CTR less than 4.35%.

In other words:

Keywords you could get more traffic from.

We just need to bump up their CTR.

So:

Let’s see if we can find a keyword with a lower-than-expected CTR.

When I scroll down the list… this keyword sticks out like a sore thumb.

1,504 impressions and only 43 clicks… ouch! I know that I can do better than 2.9%.

Now that we’ve found a keyword with a bad CTR, it’s time to turn things around.

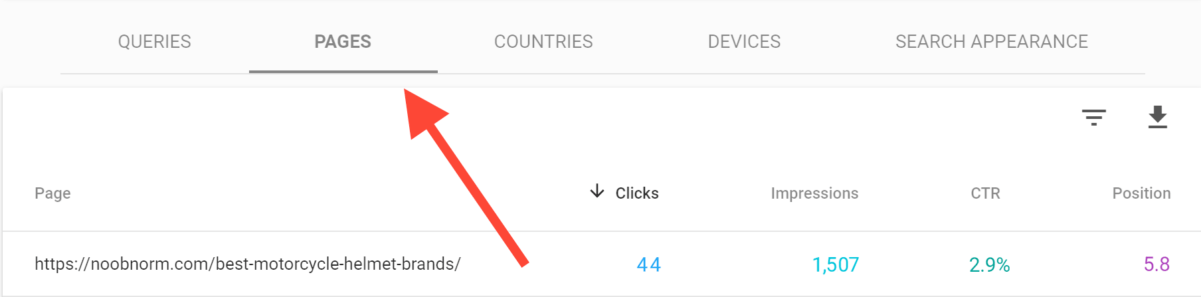

Find the page

Next, you want to see which page from your site ranks for the keyword you just found.

To do that, just click on the query with the bad CTR. Then, click “Pages”:

Easy.

Take a look at ALL the keywords this page ranks for

There’s no point improving our CTR for one keyword… only to mess it up for 10 other keywords.

So here’s something really cool:

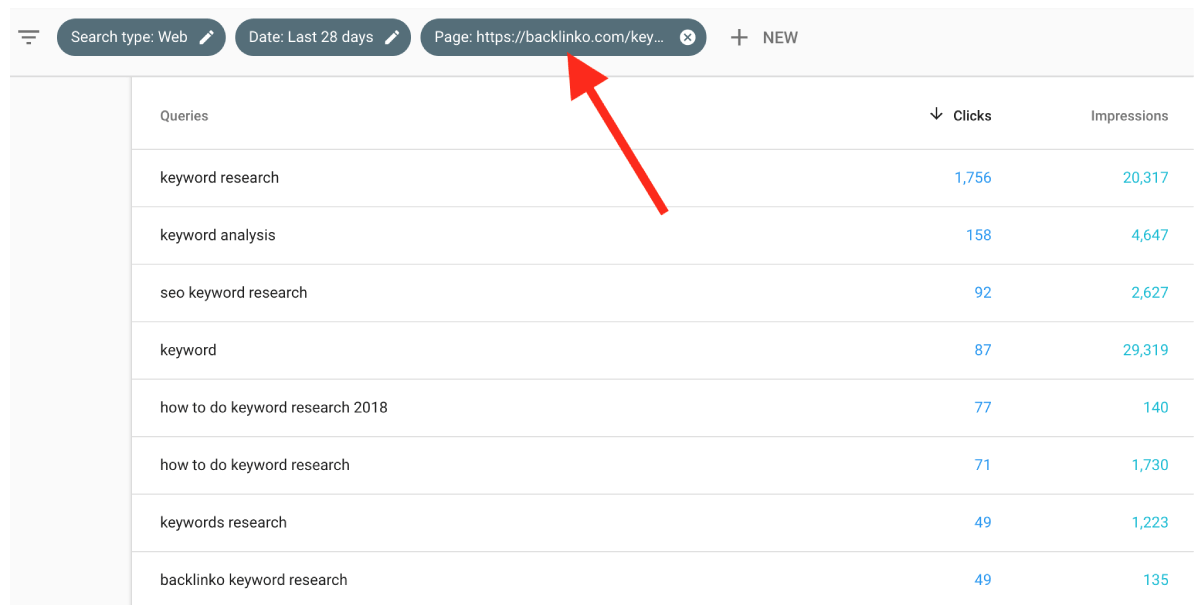

The Performance report can show you ALL keywords that your page ranks for.

And it’s SUPER easy to do.

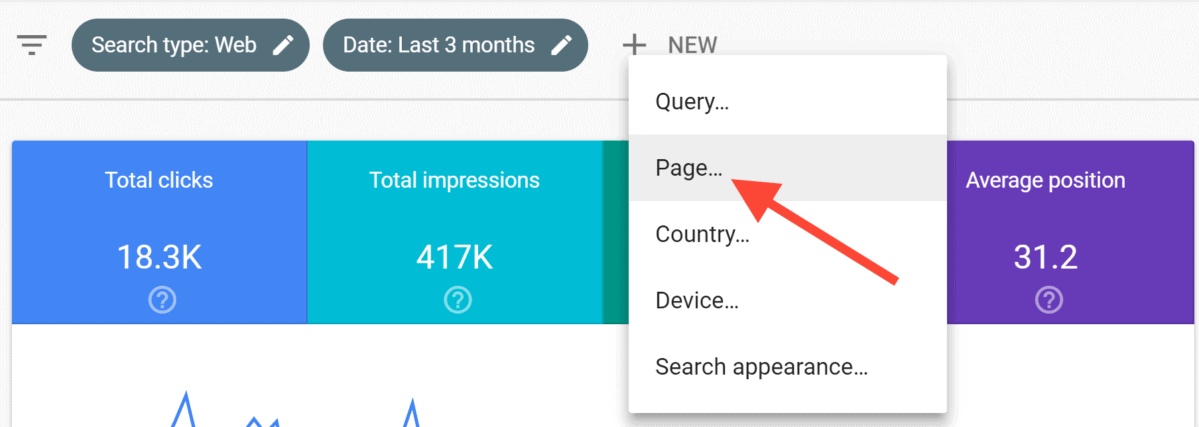

Just click on “+ New” in the top bar and hit “page…”.

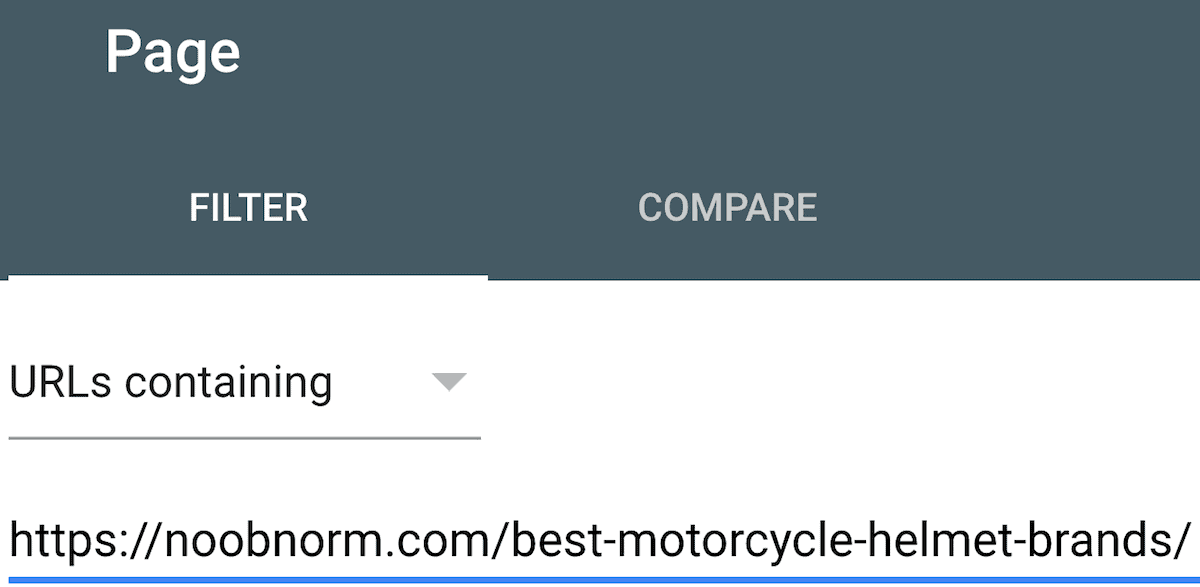

Then enter the URL you want to view queries for.

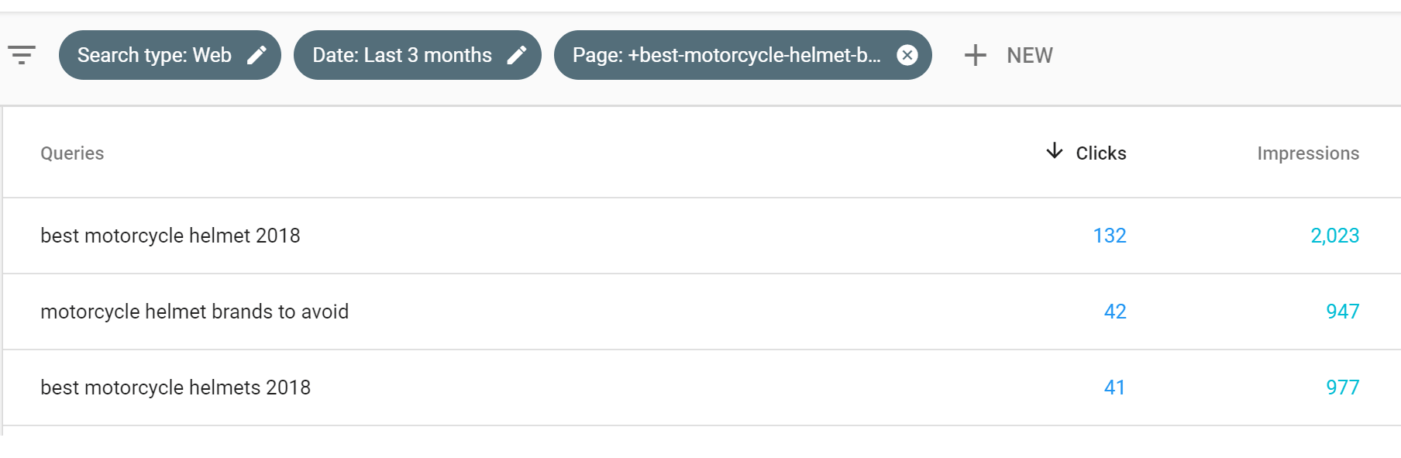

Bingo! You get a list of keywords that page ranks for:

You can see that the page has shown up over 42,000 times in Google…but only got around 1,500 clicks.

So this page’s CTR is pretty bad across the board.

(Not just for this particular keyword)

Optimize your title and description to get more clicks

I have a few go-to tactics that I use to bump up my CTR.

But my all time favorite is: Power Words.

What are power words?

Power words show that someone can get quick and easy results from your content.

And they’ve been proven again and again to attract clicks in the SERPs.

Here are a few of my favorite Power Words that you can include in your title and description:

- Today

- Right now

- Fast

- Works quickly

- Step-by-step

- Easy

- Best

- Quick

- Definitive

- Simple

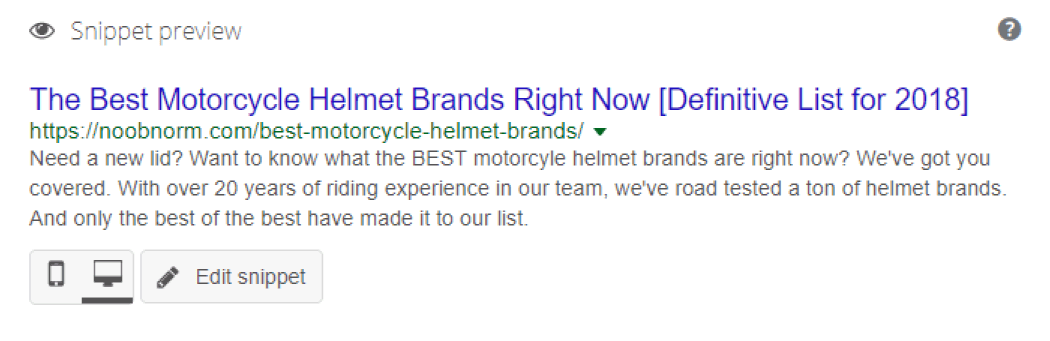

So I added a few of these Power Words to the page’s title and description tag:

Monitor the results

Finally, wait at least 10 days. Then log back in.

Why 10 days?

It can take a few days for Google to reindex your page.

Then, the new page has to be live for about a week for you to get meaningful data.

With that, I have great news:

With the new Search Console, comparing CTR over two date ranges is a piece of cake.

Just click on the date filter:

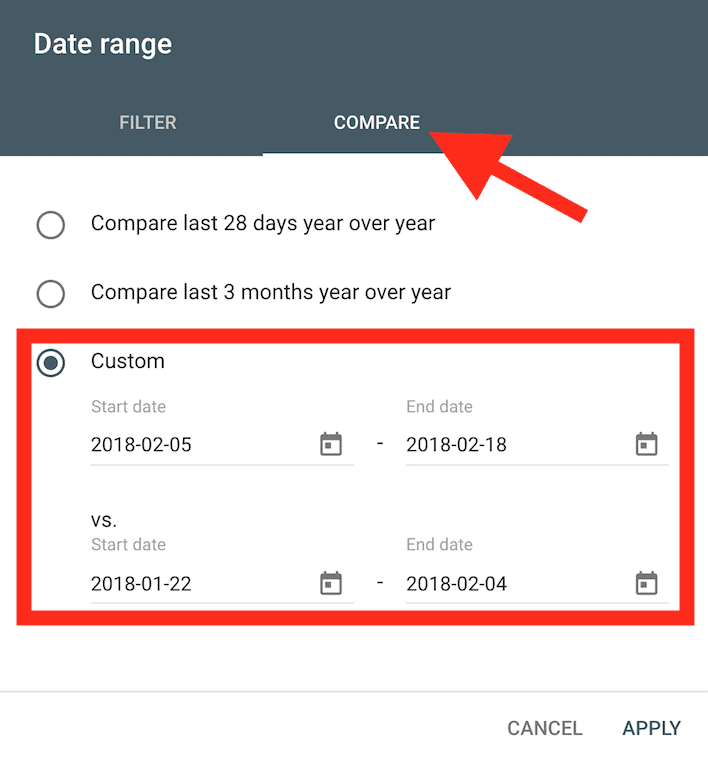

Select the date range. I’m going to compare the 2 week period before the title change, to the 2 weeks after:

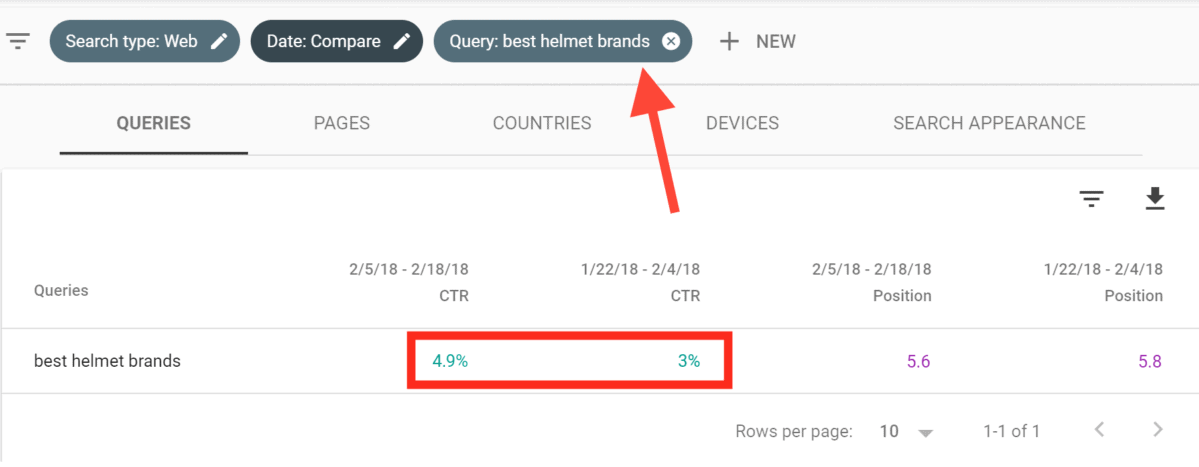

Finally, filter the data to show search queries that include the keyword you found in step #1 (in this case: “best helmet brands”).

Boom!

We’ve increased our CTR by 63.2%. And just as important: we’re now beating the average CTR for position 5.

Pro tip: You’ll find that different title formats work better in different niches. So you might have to experiment to find the perfect format for YOUR industry. The good news: Search Console gives you the data you need to do just that.

How To Find “Opportunity Keywords” With GSC’s

Performance Report

If the last example didn’t convince you of just how awesome the new Performance Report is, then I guarantee this one will.

What Is An Opportunity Keyword?

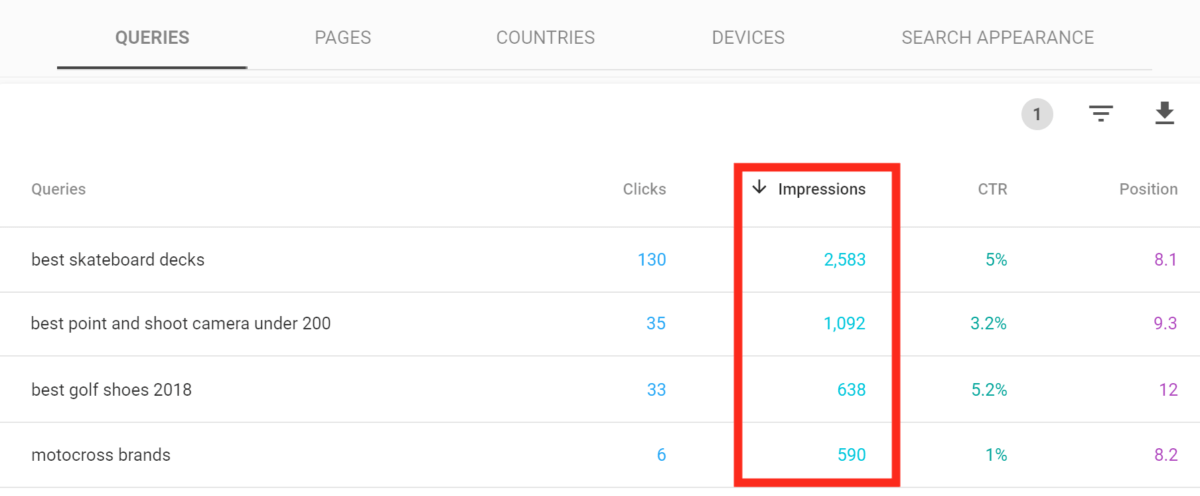

An opportunity keyword is a phrase that ranks between positions 8-20 AND gets a decent number of impressions.

Why is this such a big opportunity?

Google already considers your page to be a decent fit for the keyword (otherwise you wouldn’t be anywhere close to page 1). When you give your page some TLC, you can usually bump it up to the first page.

You’re not relying on iffy keyword volume data from third party SEO tools. The impression data you get from the GSC tells you EXACTLY how much traffic to expect.

Mining For Gold With Google Search Console’s

Performance Report

Finding these gold nugget keywords in the Performance report is a simple, 3-step process.

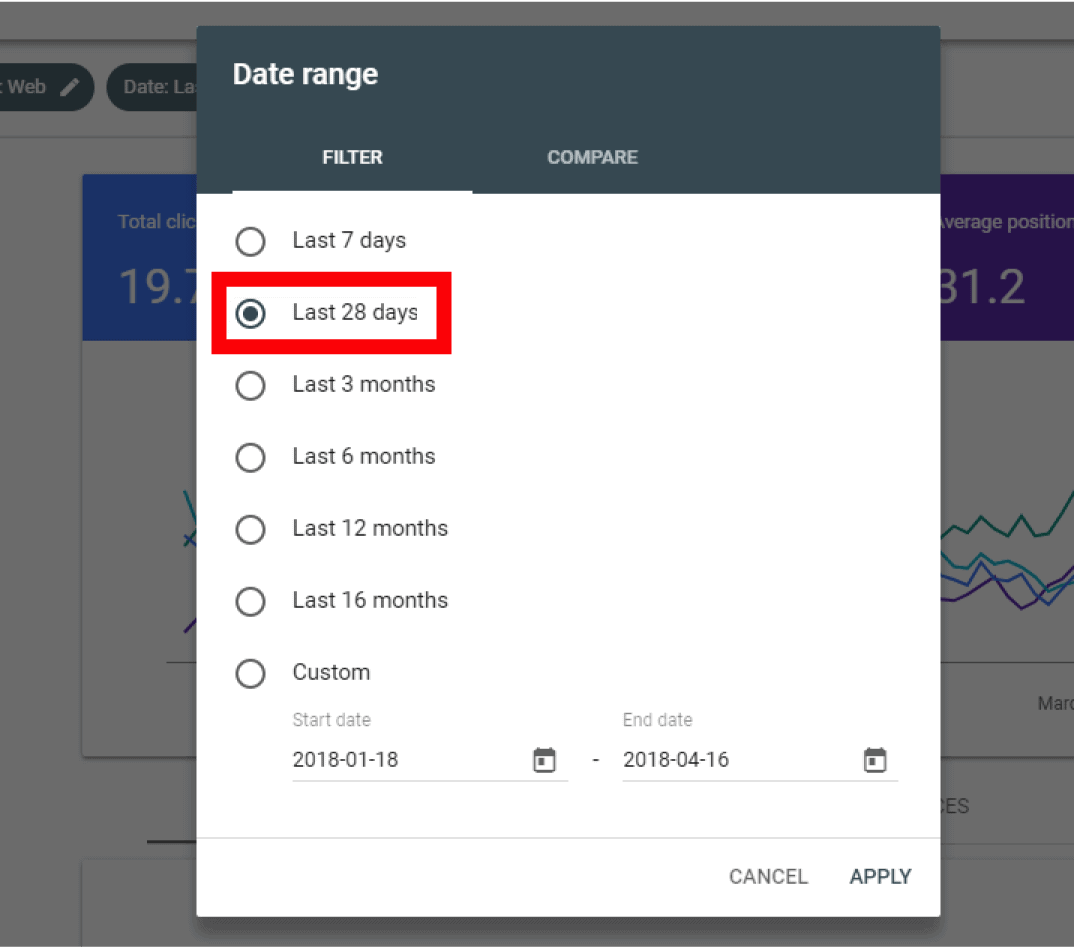

1. Set the date range to the last 28 days:

2. Filter the report to show keywords ranking “Greater than” 7.9

3. Finally, sort by “Impressions”. And you get a huge list of “Opportunity Keywords”:

Here’s what to do to push those pages up:

Cover The Topic In INSANE Detail

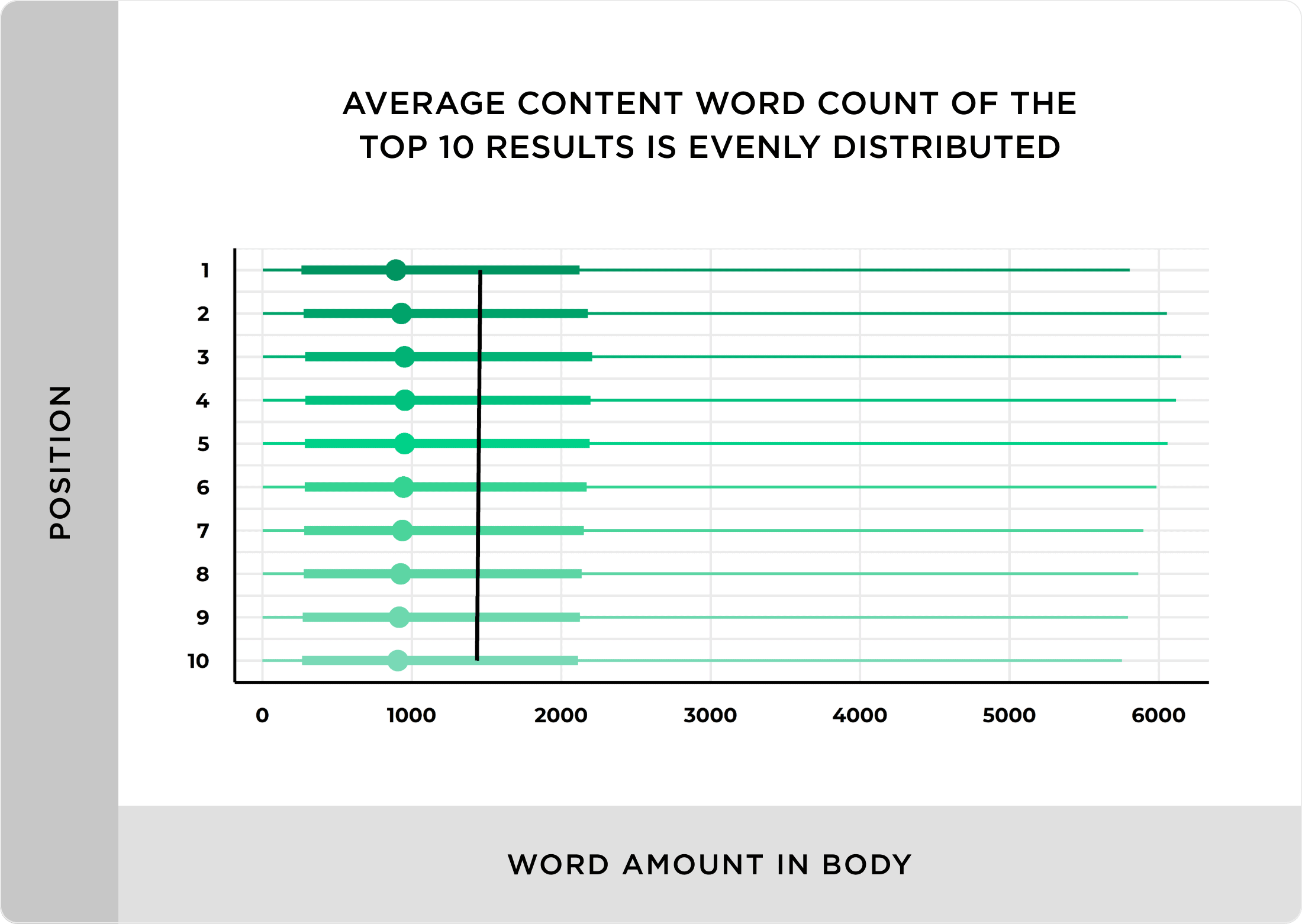

Google LOVES content that covers 100% of a topic.

That’s probably why the word count of a Google top 10 result is 1,447 words.

So make sure your content is a BEAST. It should cover everything there is to know about your topic.

(Kind of like this guide you’re reading. 🙂 )

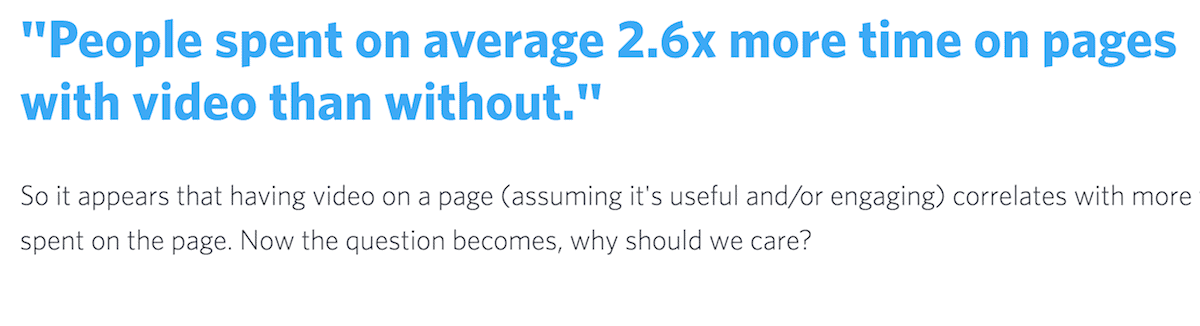

Improve Dwell Time With Video

Dwell time is the amount of time a Google searcher spends on your page.

And when you increase your Dwell Time, you can get higher rankings (thanks to RankBrain).

Now you already made your content super in-depth. So there’s a good chance your Dwell Time is already solid.

But to seal the deal…

Add some videos to your page. Wistia found that visitors spend 2.6x more time on pages with video.

That’s a HUGE difference.

And if you want to learn some other ways to boost Dwell Time, here’s a video that shows you how to do it:

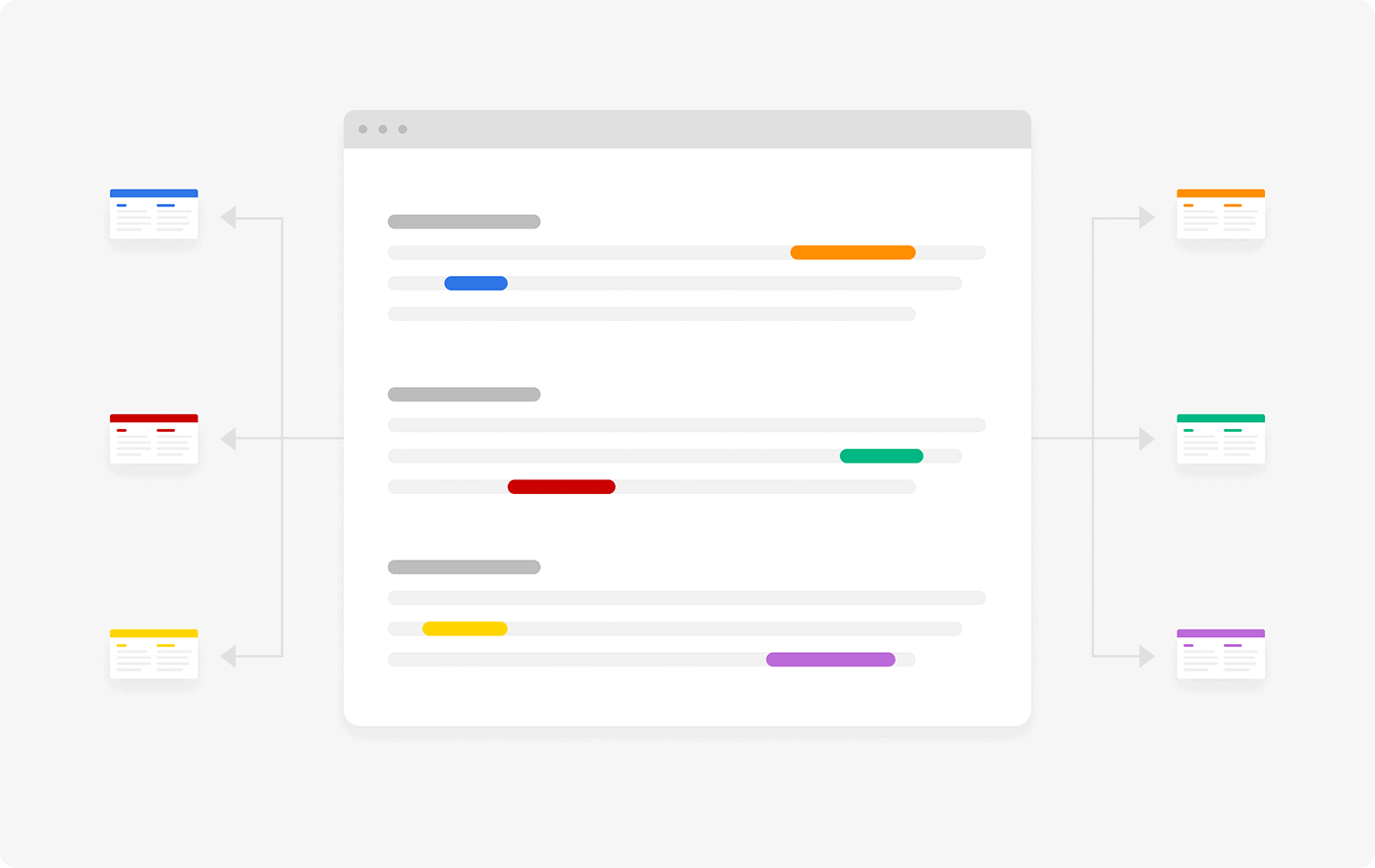

Add Internal Links For An EASY Win

Adding a few internal links to your page can give it a quick (and EASY) boost.

In chapter 4 I’m going to show you how to use Search Console to optimize internal linking.

But for now, just keep in mind that a handful of strategic internal links can quickly boost your rankings.

Grab Some New Backlinks To CRUSH The Competition

Yup, backlinks are still a GIGANTIC part of Google’s algorithm.

Which means:

Building high-quality backlinks to your page can boost its rankings… even if everything else stays exactly the same.

But this is not a link building guide. This is.

So make sure to bookmark that guide so you can read it later.

And before we move on to the next chapter, I’ve got two quick bonus tips for you…

Bonus Tip #1: Optimize For Opportunity Keywords In GSC… and Rank For HUNDREDS Of Longtails

In my guide to Google’s RankBrain, I said:

“Long tail keywords are dead.”

And I’m not taking it back.

The days of optimizing 1000 pages around 1000 long tail keywords are long gone.

The good news? You can now get one of your pages to rank for hundreds or even thousands of long tail keywords.

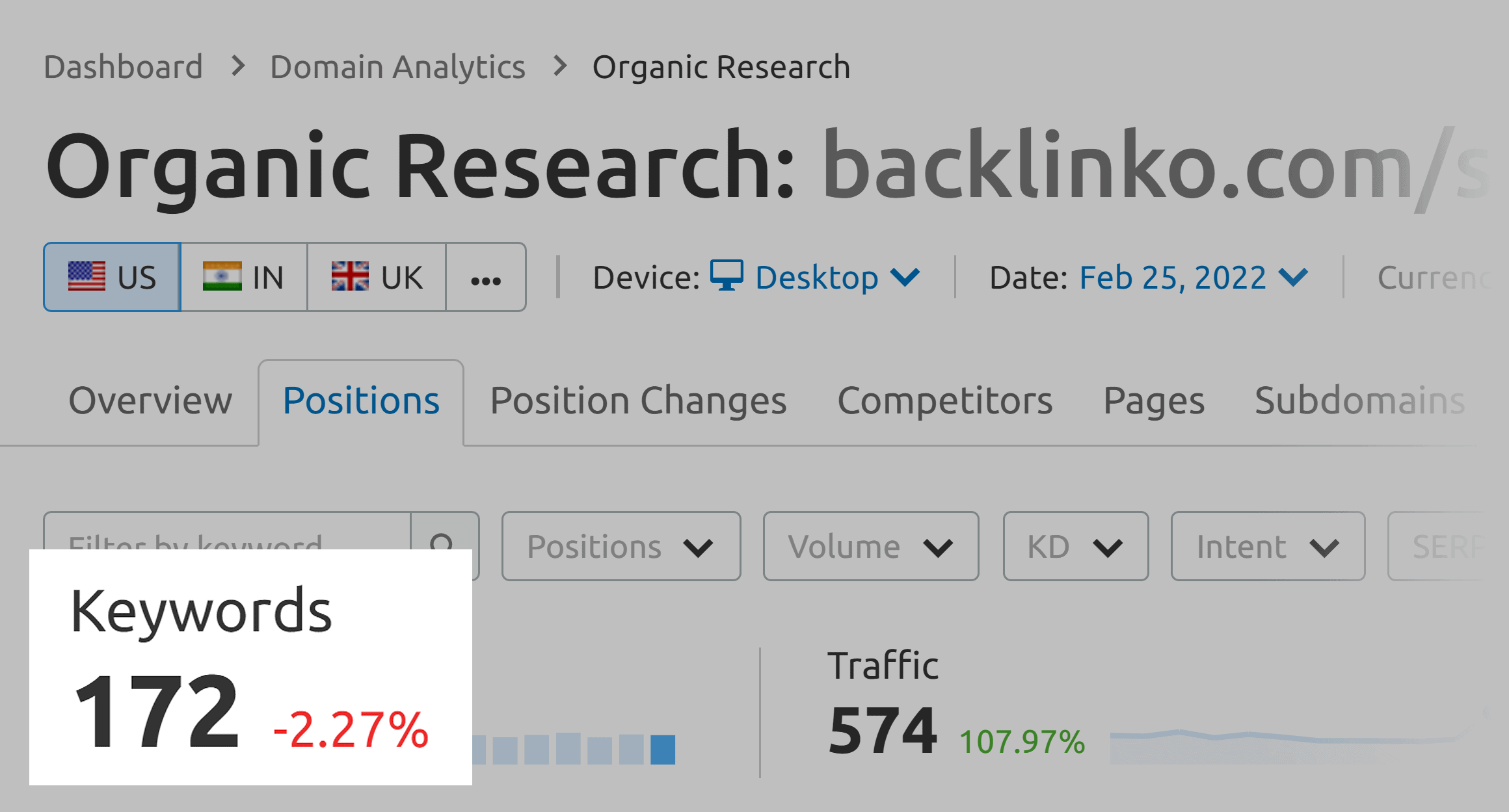

Want proof? According to Semrush, my post on SEO techniques ranks for 172 different keywords…

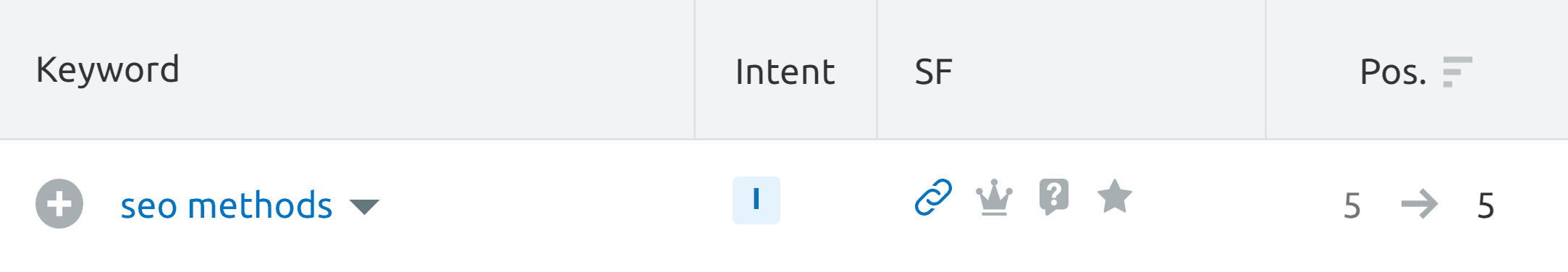

For example, I rank on page 1 for keywords like “SEO methods”, even though I didn’t optimize for that term.

Why? Because Google is smart enough to figure out that “SEO methods” and “SEO techniques” are basically the same.

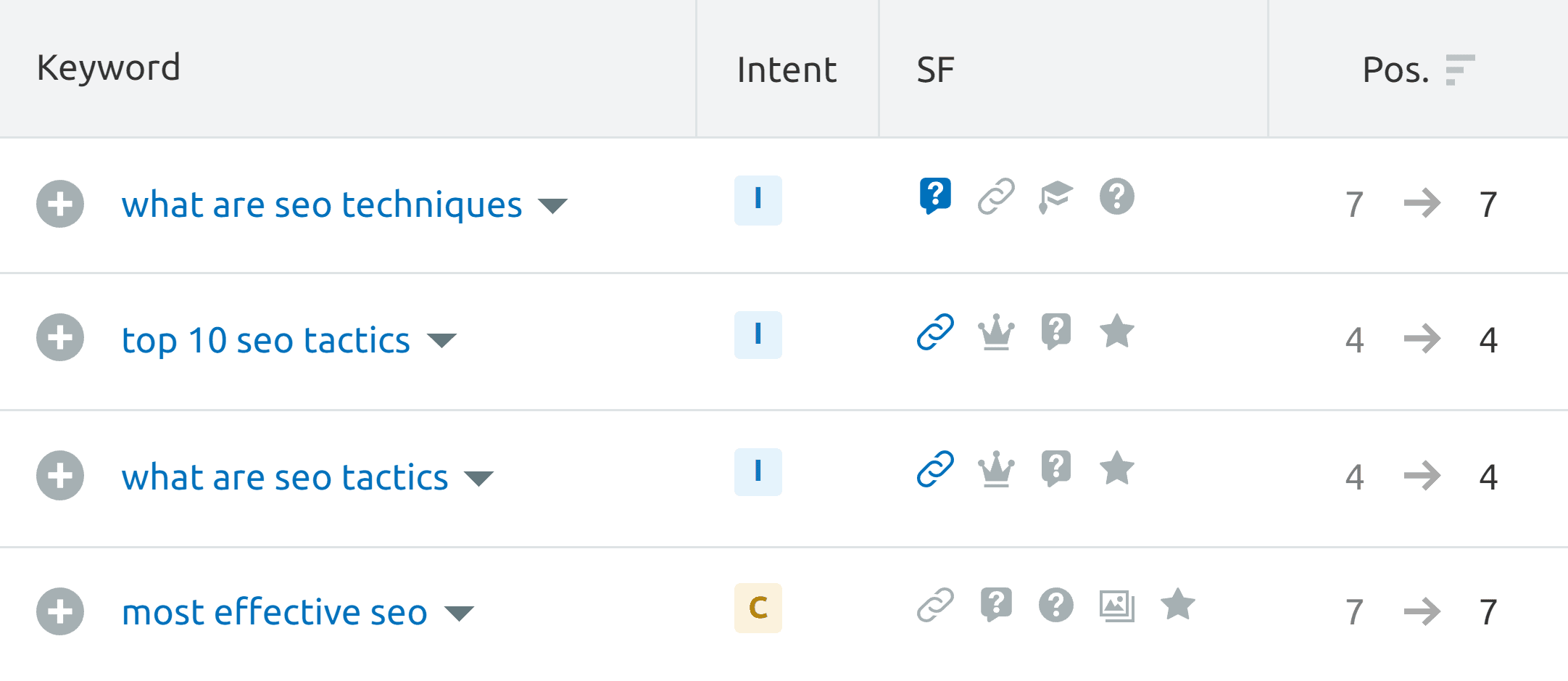

OK, how about long tails? Yep, I rank for a TON of them:

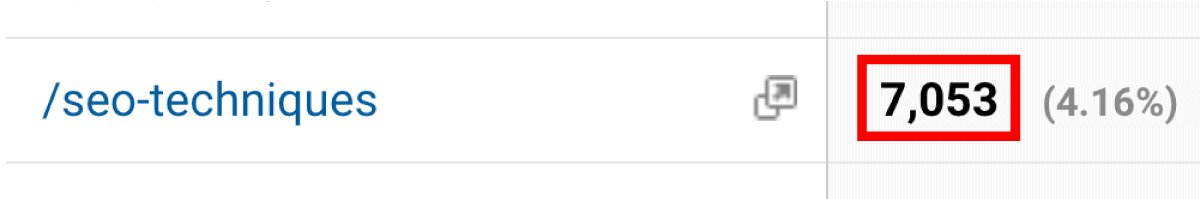

The result? This one page brings me over seven thousand visitors a month from Google…

So what’s the secret?

Well first, you want to make your content SUPER in-depth. We already covered that. But it bears repeating.

Because when you publish meaty content, you rank for hundreds of long tail keywords automatically.

Need a hint on what extra sections to include? Just check what keywords your page is already ranking for in Search Console.

And here’s another pro tip:

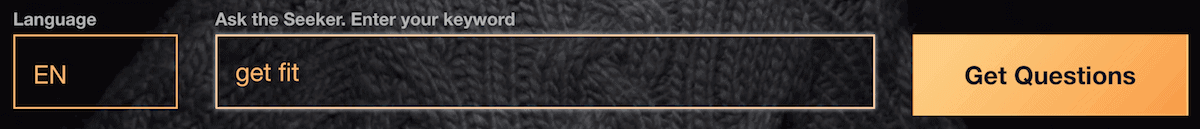

Find the most common questions people ask about your topic. Then, answer them in your content.

The easiest way to find questions: use Answer The Public.

Just type your main keyword into the box…

And you’ll get a massive list of questions…

The best part? These answers give you a shot to rank as a Featured Snippet.

After all: why rank #1 when you can rank #0?

Find High-Impression Keywords

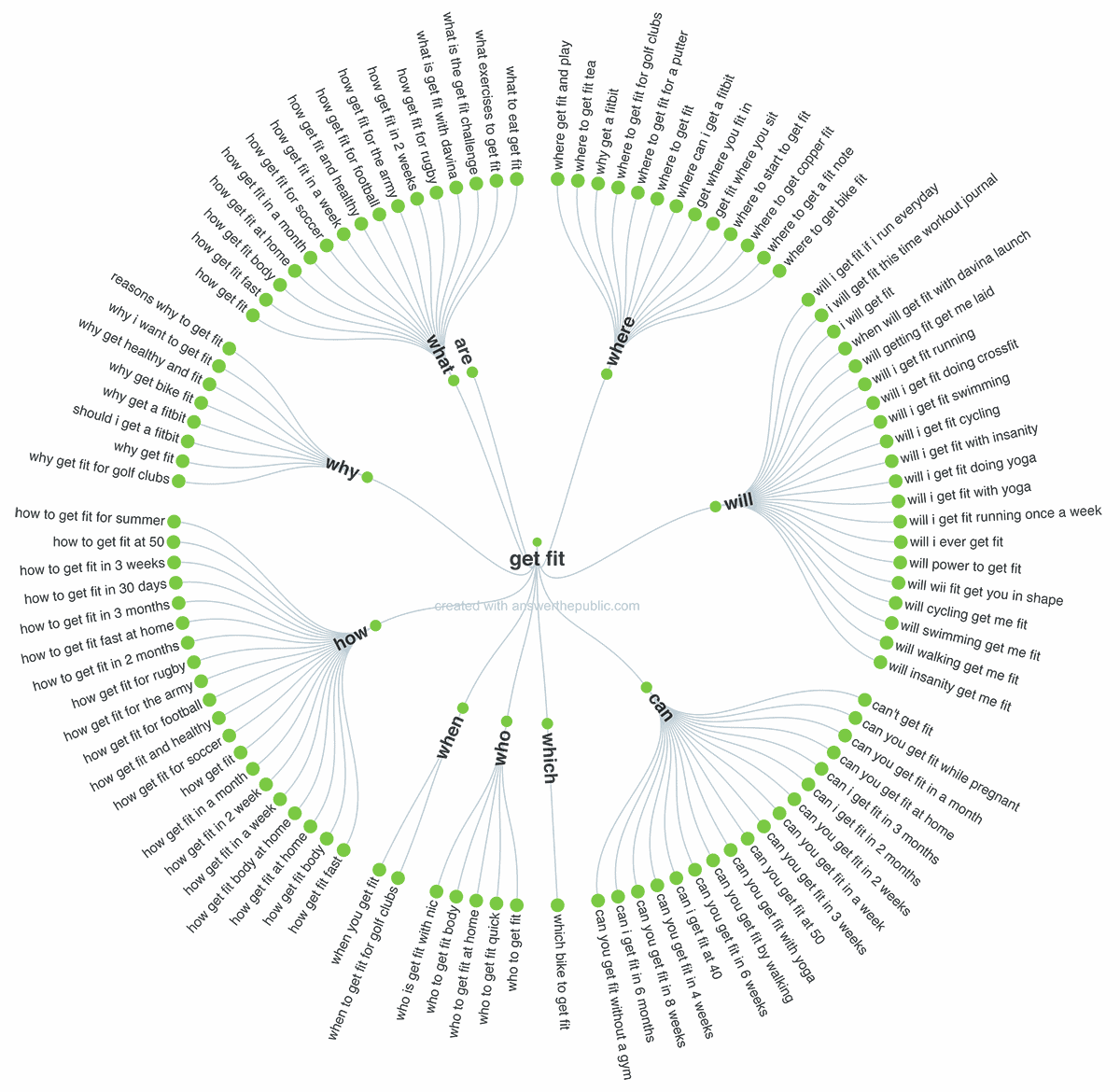

I already showed you how to optimize keywords that rank 8-20.

But…

I also like to look for keywords that aren’t ranking, yet still get some impressions. Here’s an example:

That keyword is sitting at position 50-ish… yet the page was still seen nearly 200 times.

Which tells me: if that many people are visiting the 5th page, wait until I hit the first page.

It’s gonna be nuts!

Chapter 4:Cool GSC Features

In this chapter I’m going to show you some of the coolest features in the Google Search Console.

First, I’ll teach you how you can use the Search Console to fix your schema.

Then, I’ll show you one of the quickest (and EASIEST) wins in SEO.

Power Up Important Pages With Internal Links

Make no mistake:

Internal links are SUPER powerful.

Unfortunately, most people use internal linking all wrong.

That’s the bad news.

The good news?

The Search Console has an awesome feature designed to help you overcome this problem.

This report shows you the EXACT pages that need some internal link love.

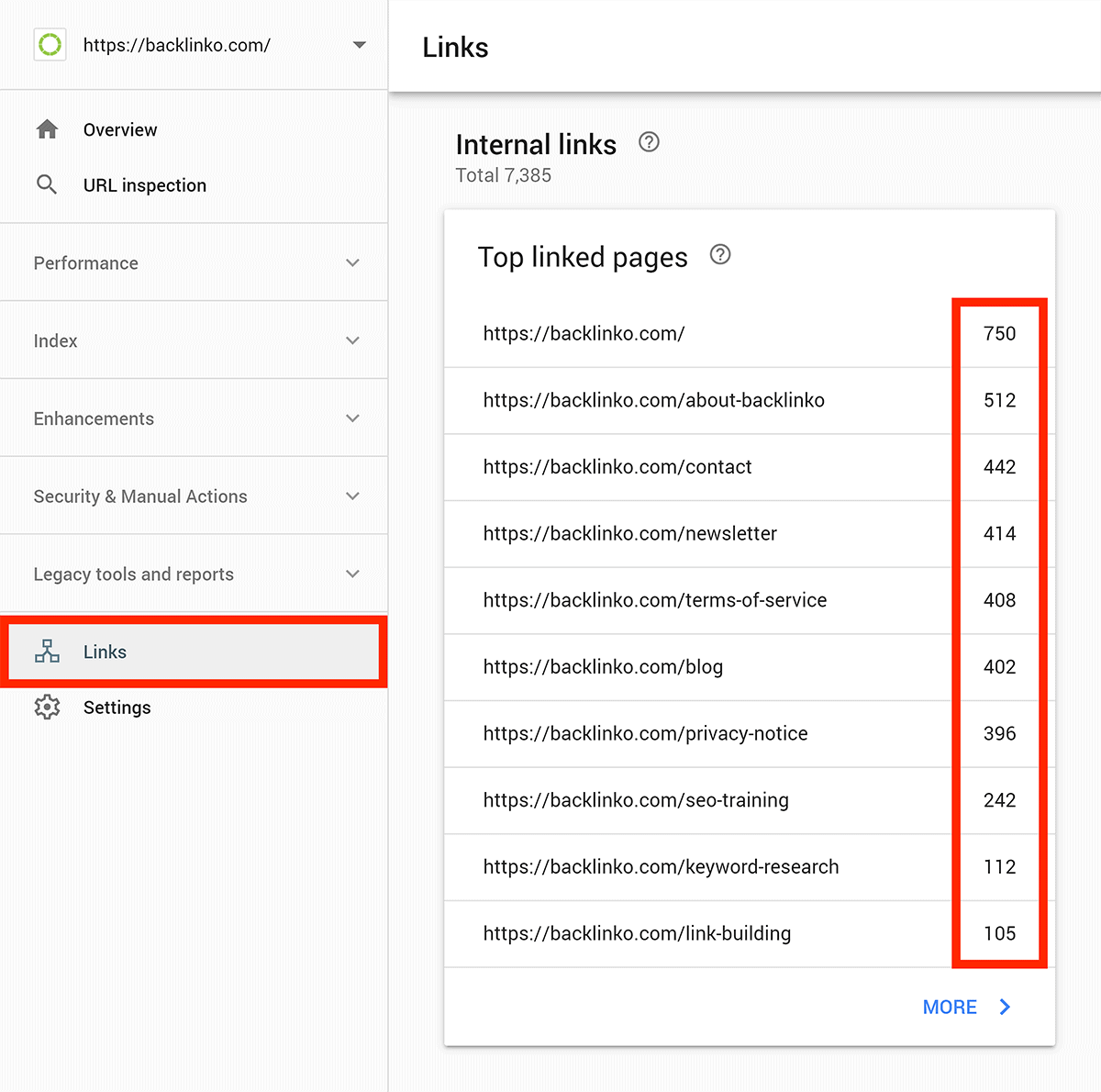

To access this report, hit “Links” in the GSC sidebar.

And you’ll get a report that shows you the number of internal links pointing to every page on your site.

This report is already a goldmine.

But it gets better…

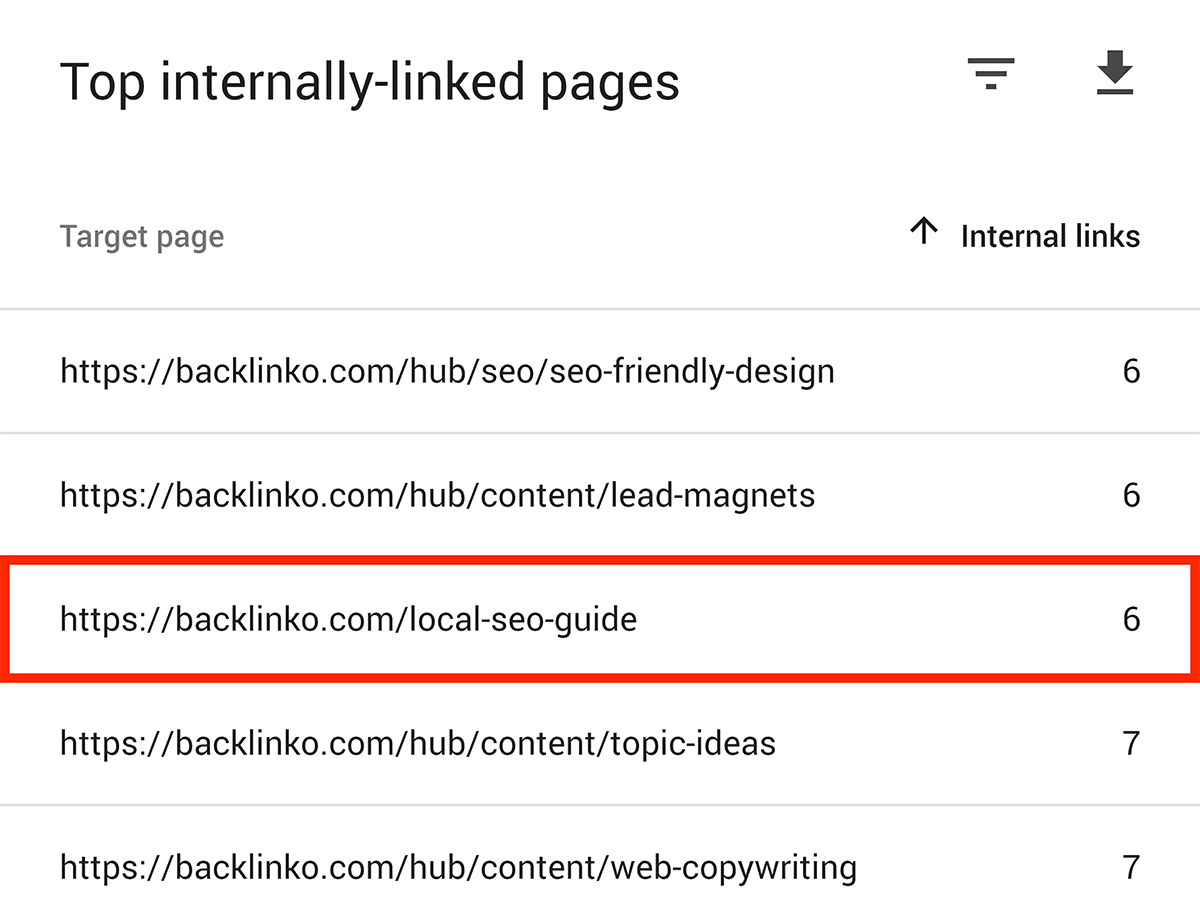

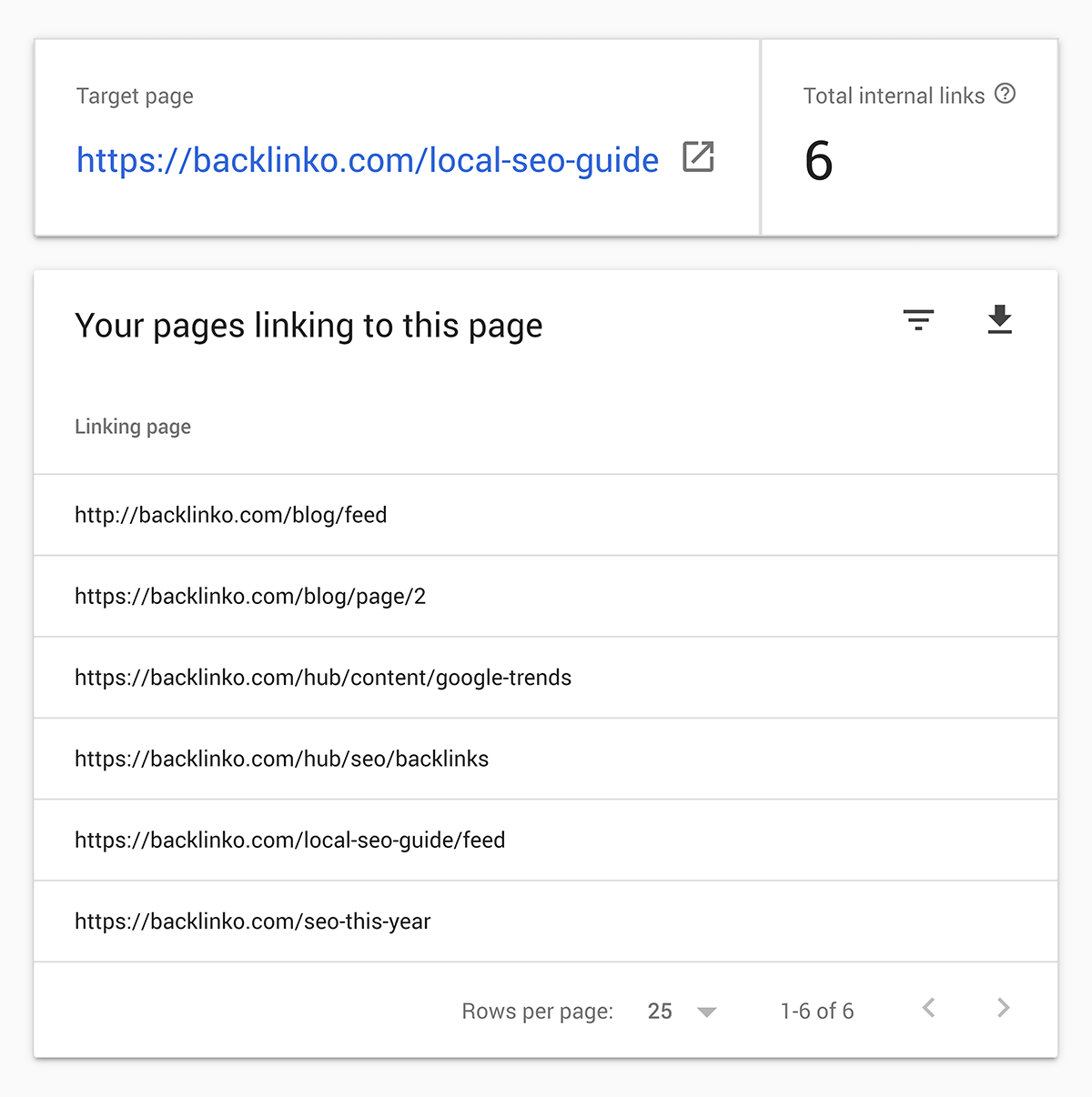

You can find the EXACT pages that internally link to a specific page. Just click on one of the URLs under the “Internal Links” section:

And you’ll get a list of all the internal links pointing to that page:

In this case, we only have 6 internal links pointing to our Local SEO Guide. That’s not good.

So:

Once you find a page that doesn’t have enough internal links juice, add some internal links that point to that page.

Time spent: under a minute.

Assessment: Win!

Pro Tip: Supercharge Key Posts With Internal Links From Powerhouse Pages

What’s a Powerhouse Page?

It’s a page on your site with lots of quality backlinks.

More backlinks = more link juice to pass on through internal links.

You can easily find Powerhouse Pages in the Google Search Console.

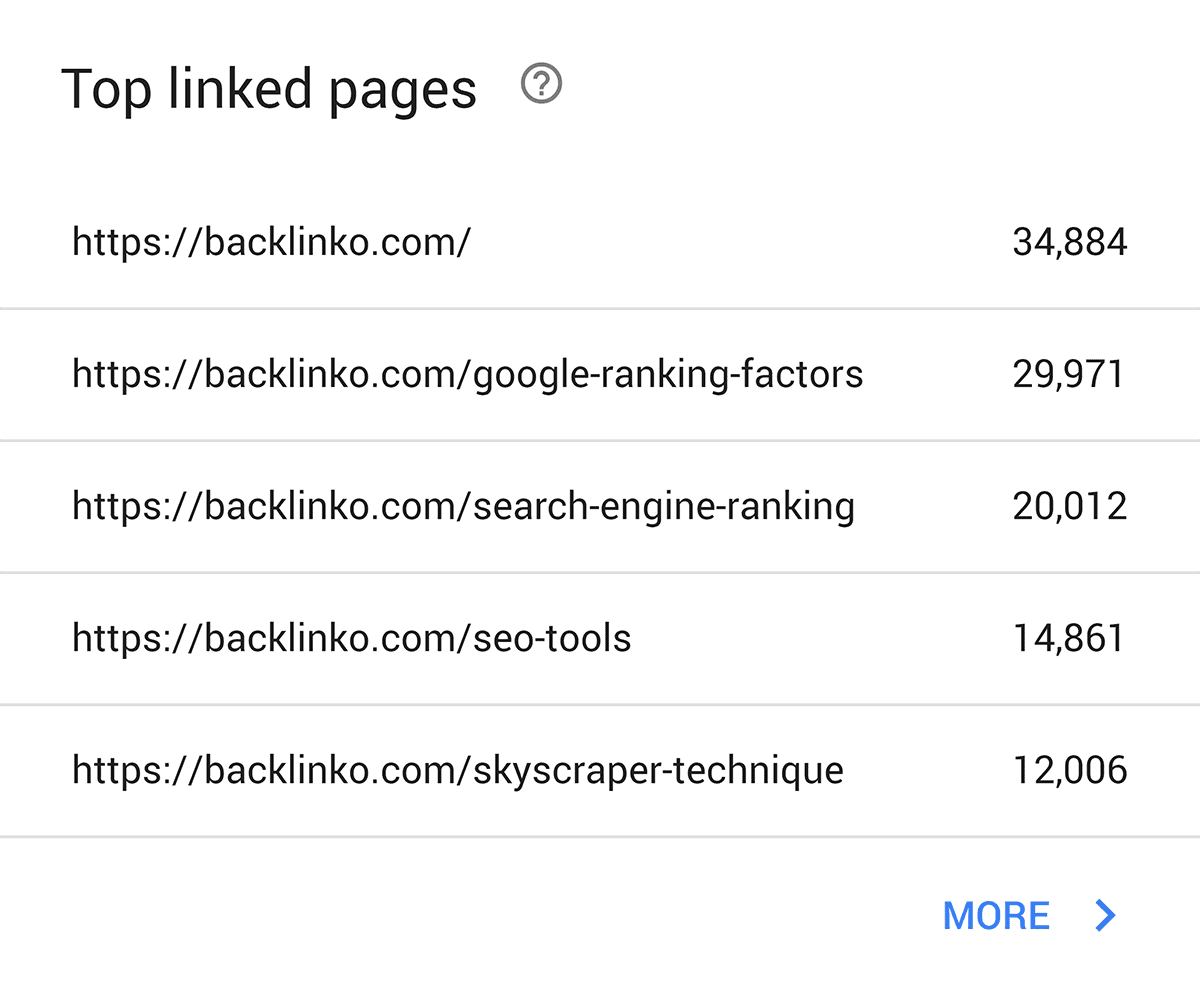

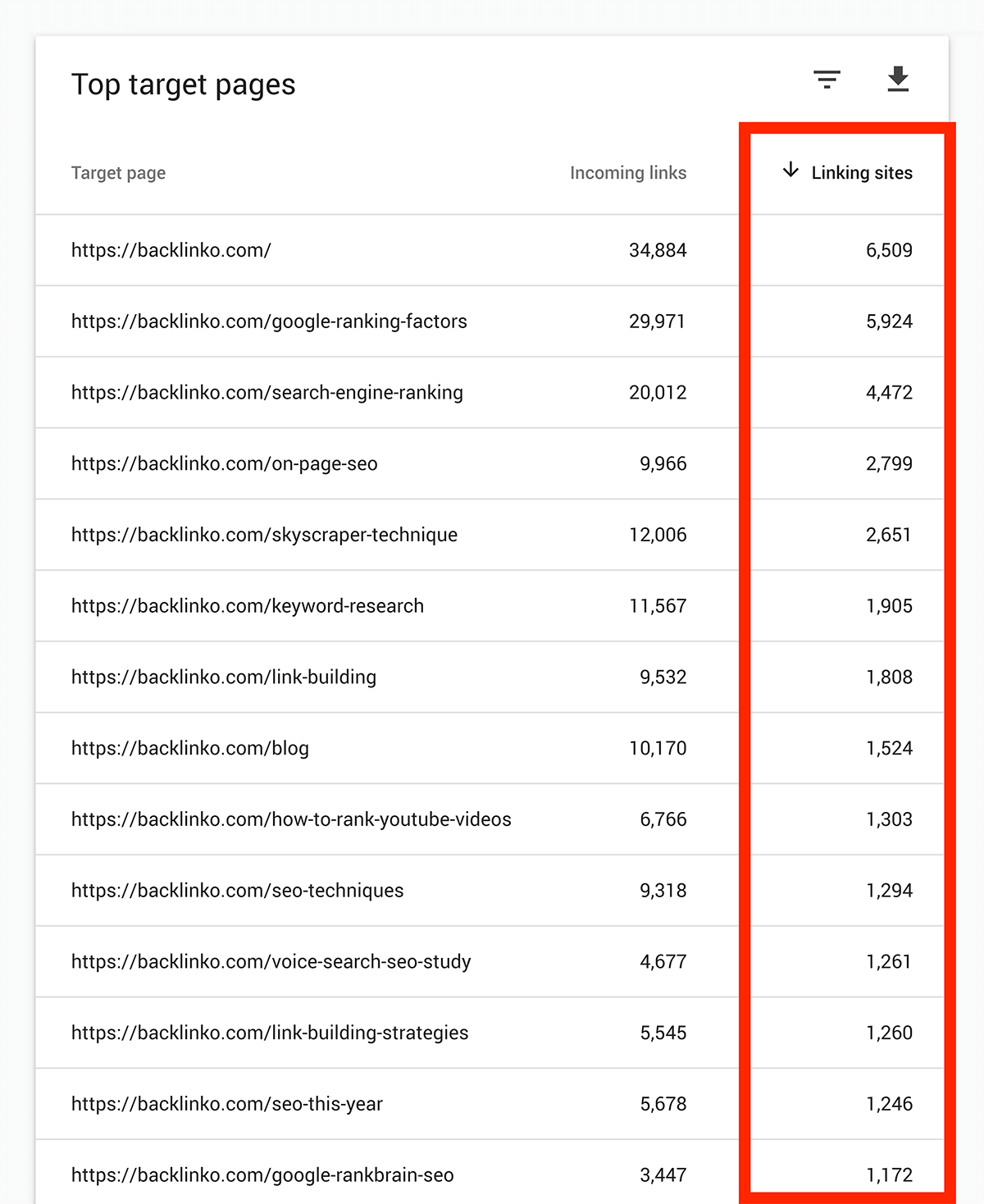

Just hit the “Links” button again. And you’ll see a section titled “Top linked pages”.

Click “More” for a full list.

By default, the report is ordered by the total number of backlinks. But I prefer to sort by number of linking sites:

These are your Powerhouse Pages.

And all you need to do is add some internal links FROM those pages TO the ones you want to boost.

Easy, right?

Chapter 5:Advanced Tips and Strategies

Now it’s time for some advanced tips and strategies.

In this chapter you’ll learn how to use Google Search Console to optimize crawl budget, fix issues with mobile usability, and improve your mobile CTR.

Mastering Crawl Stats

If you have a small site (<1,000 pages), you probably don’t need to worry about crawl stats.

But if you have a huge site… that’s a different story.

In that case, it’s worth looking into your crawl budget.

What Is Crawl Budget?

Your Crawl Budget is the number of pages on your site that Google crawls every day.

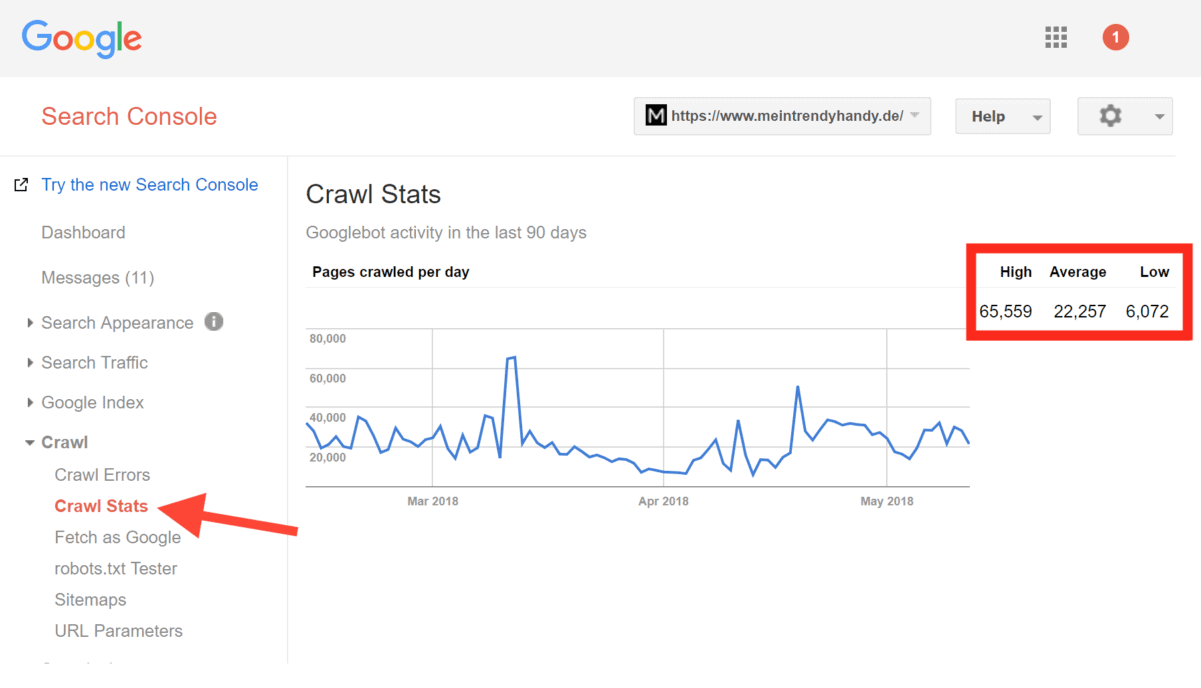

You can still see this number in the old “Crawl Stats” report.

In this case, Google crawls an average of 22,257 pages per day. So that’s this site’s Crawl Budget.

Why Is Crawl Budget Important For SEO?

Say you have:

200,000 pages on your website

and

A crawl budget of 2,000 pages per day

It could take Google 100 days to crawl your site.

So if you change something on one of your pages, it might take MONTHS before Google processes the change.

Or, if you add a new page to your site, Google’s going to take forever to index it.

So what can you do to get the most out of your Crawl Budget?

Three things…

First, stop wasting Crawl Budget on unnecessary pages

This is a biggie for Ecommerce sites.

Most ecommerce sites let their users filter through products… and search for things.

This is great for sales.

But if you’re not careful, you can find yourself with THOUSANDS of extra pages that look like this:

yourstore.com/product-category/?size=small&orderby=price&color=green…

Unless you take action, Google will happily waste your crawl budget on these junk pages.

What’s the solution?

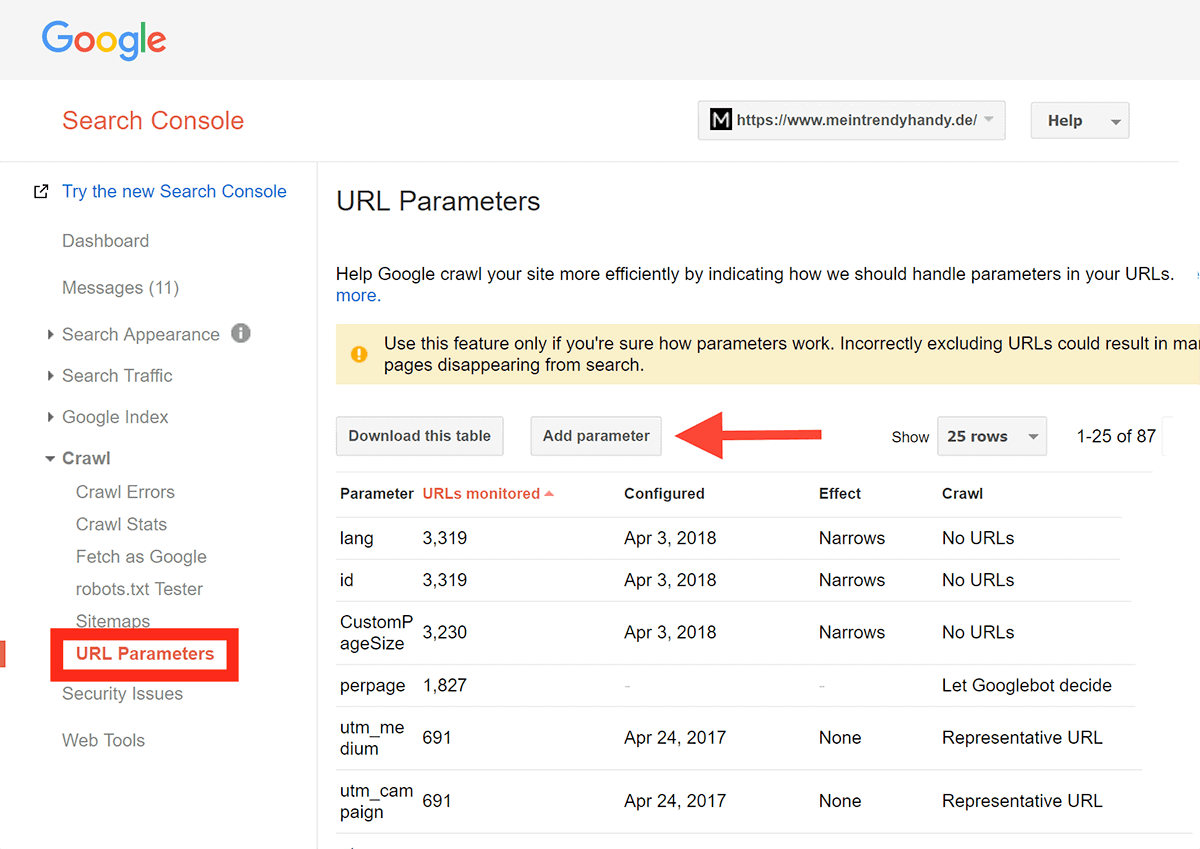

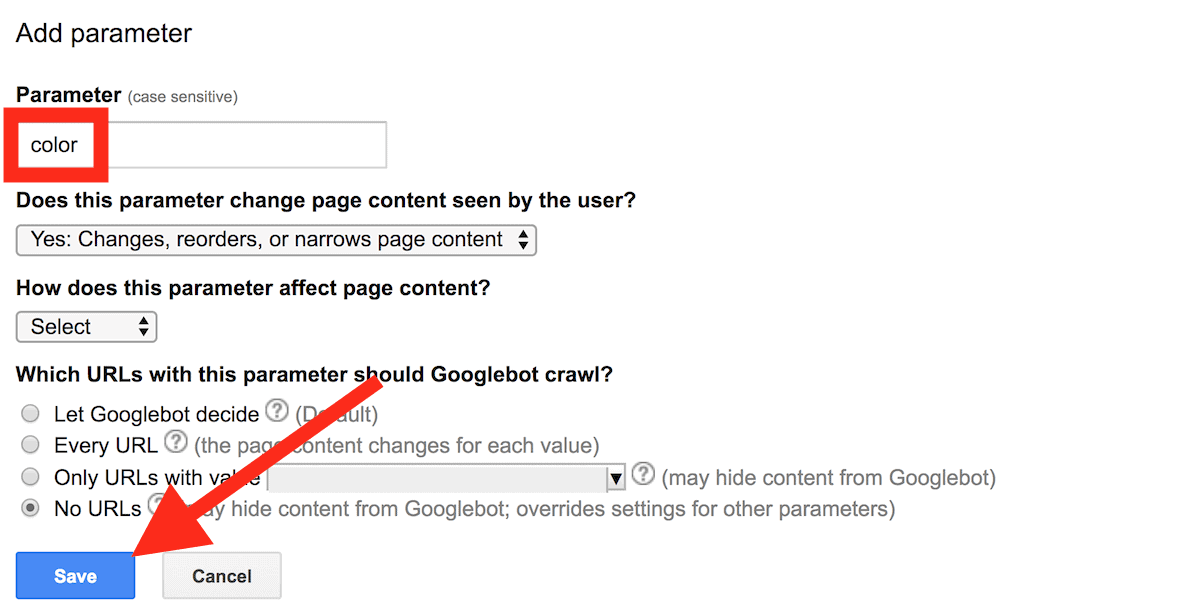

To set these up, click the “URL Parameters” link in the old GSC. Then hit “Add Parameter”.

Let’s say that you let users filter products by color. And each color has its own URL.

For example, the color URLs look like this:

yourstore.com/product-category/?color=red

You can easily tell Google not to crawl any URLs with that color parameter:

Repeat this for ALL parameters you don’t want Google to crawl.

And if you’re somewhat new to SEO, check in with an SEO specialist to make sure this is implement correctly. When it comes to parameters, it’s easy to do more harm than good!

See how long it takes Google to download your page

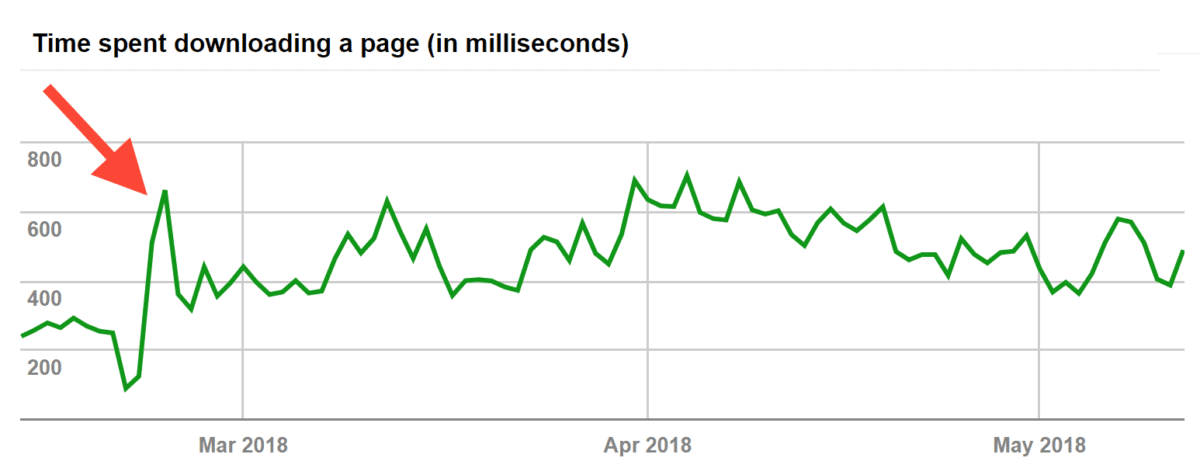

The crawl report in Search Console shows you the average time it takes Google to download your pages:

See that spike? It means that it suddenly took Google A LOT longer to download everything.

And this can KILL your Crawl Budget.

In fact, we have this quote straight from the horse’s mouth…

In a Google Webmaster Central blog post, Googler Gary Illyes explained:

“Making a site faster improves the users’ experience while also increasing crawl rate. For Googlebot a speedy site is a sign of healthy servers, so it can get more content over the same number of connections. On the flip side, a significant number of 5xx errors or connection timeouts signal the opposite, and crawling slows down.”

Bottom line? Make sure your site loads SUPER fast. You already know that this can help your rankings.

As it turns out, a fast-loading site squeezes more out of your crawl budget too.

Get more backlinks to your site

As if backlinks couldn’t be any more awesome, it turns out that they also help with your crawl budget.

In an interview with Eric Enge of Stone Temple Consulting, Matt Cutts said:

“The best way to think about it is that the number of pages that we crawl is roughly proportional to your PageRank. So if you have a lot of incoming links on your root page, we’ll definitely crawl that. Then your root page may link to other pages, and those will get PageRank and we’ll crawl those as well. As you get deeper and deeper in your site, however, PageRank tends to decline.”

The takeaway:

More backlinks = bigger crawl budget.

Get The Most Out of “URL Inspection”

I already covered the URL Inspection tool in Chapter 3.

But that was one part of a big process. So let’s take a look at URL Inspection as a standalone tool.

Specifically, I’m going to show you 3 cool things you can do with the Fetch As Google tool.

Get new content indexed (in minutes)

URL Inspection is the FASTEST way to get new pages indexed.

Just published a new page?

Just pop the URL into the box and press Enter.

Then hit “Request Indexing”…

…and Google will normally index your page within a few minutes.

Use “URL Inspection” to reindex updated content

If you’re a regular Backlinko reader, you know that I LOVE updating old content.

I do it to keep my content fresh. But I also do it because it increases organic traffic (FAST).

For example, in this case study, I reveal how relaunching an old post got me 260.7% more organic traffic in just 14 days.

And you better believe I always use the “Fetch As Google” tool to get my new content indexed ASAP.

Otherwise, I have to wait around for Google to recrawl the page on its own.

As Sweet Brown famously said: “Ain’t nobody got time for that!”.

Identify Problems With Rendering

So what else can the “URL Inspection” tool do?

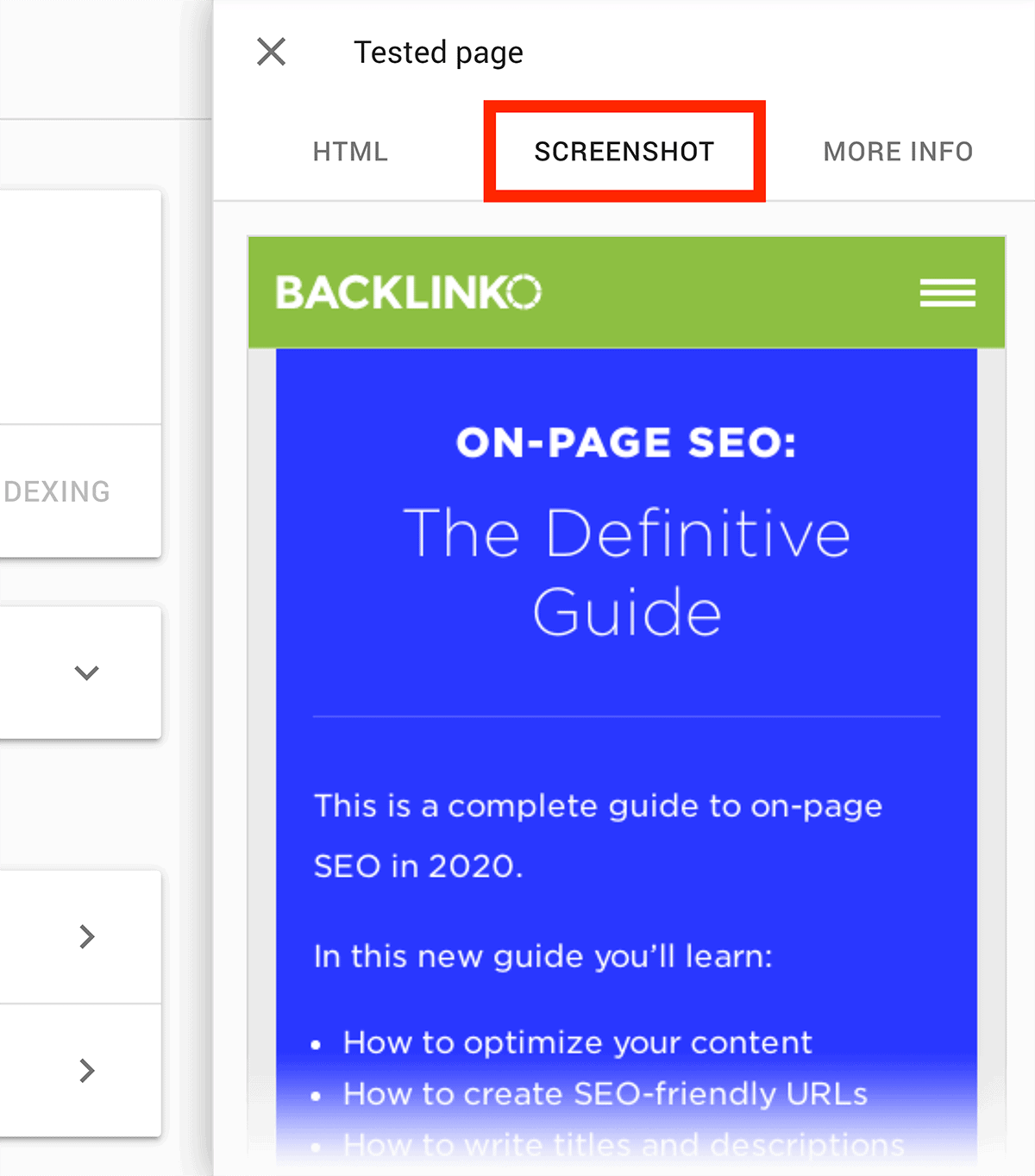

“Test Live URL” shows you how Google and users see your page.

You just need to hit the “View Tested Page” button.

Then hit “Screenshot”. And you’ll see exactly how Google sees your page.

Make Sure Your Site Is Optimized For Mobile

(Unless You Like Losing Traffic)

As you might have heard, more people are searching with their mobile devices than with desktops.

(And this gap is increasing every day)

And Google’s “Mobile-First” index means that Google only cares about the mobile version of your site.

Bottom line? Your site’s content and UX has to be 100% optimized for mobile.

But how do you know if Google considers your site mobile optimized?

Well, the Google Search Console has an excellent report called “Mobile Usability”. This report tells you if mobile users have trouble using your site.

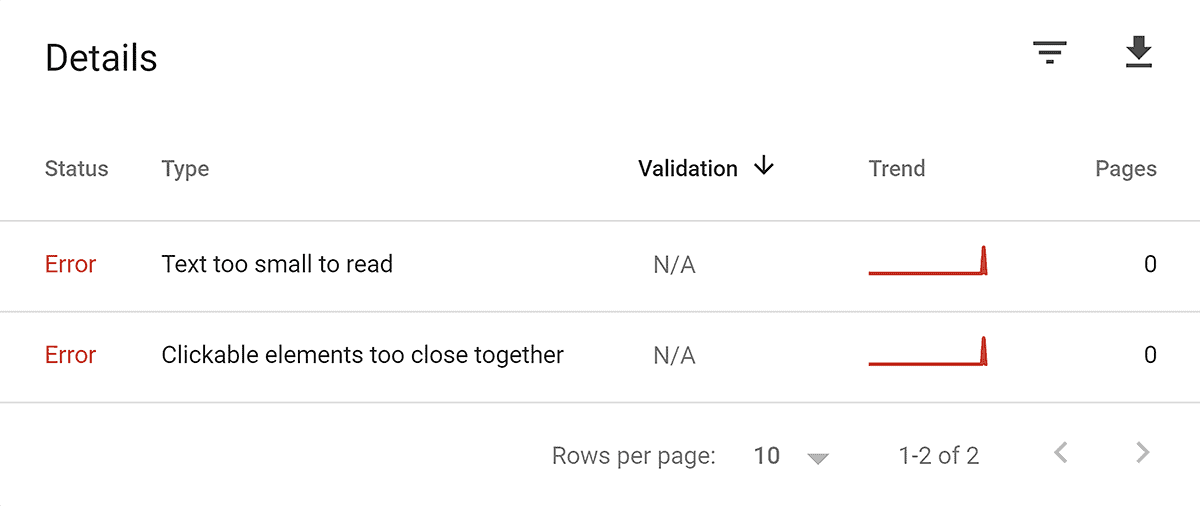

Here’s an example:

As you can see, the report is telling us about two mobile usability issues: “Text too small to read” and “Clickable elements too close together”.

All you need to do is click on one of the issues. And the GSC will show you:

1. Pages with this issue

2. How to fix the problem

Then, it’s just a matter of taking care of that issue.

And if you need more help optimizing your site for mobile users, make sure to read my guide to mobile optimization.

Compare Your CTR On Desktop and Mobile

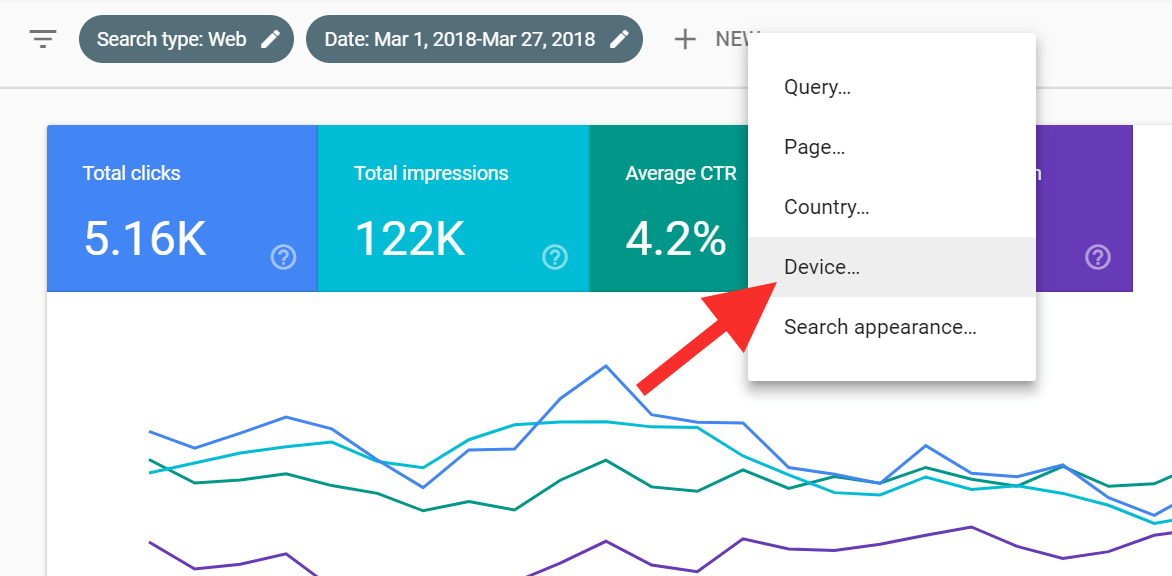

The new Performance report lets you easily compare mobile and desktop CTR.

Here’s how:

Fire up the Performance report. Then, hit “New+” to add a new filter, and select “Device”…

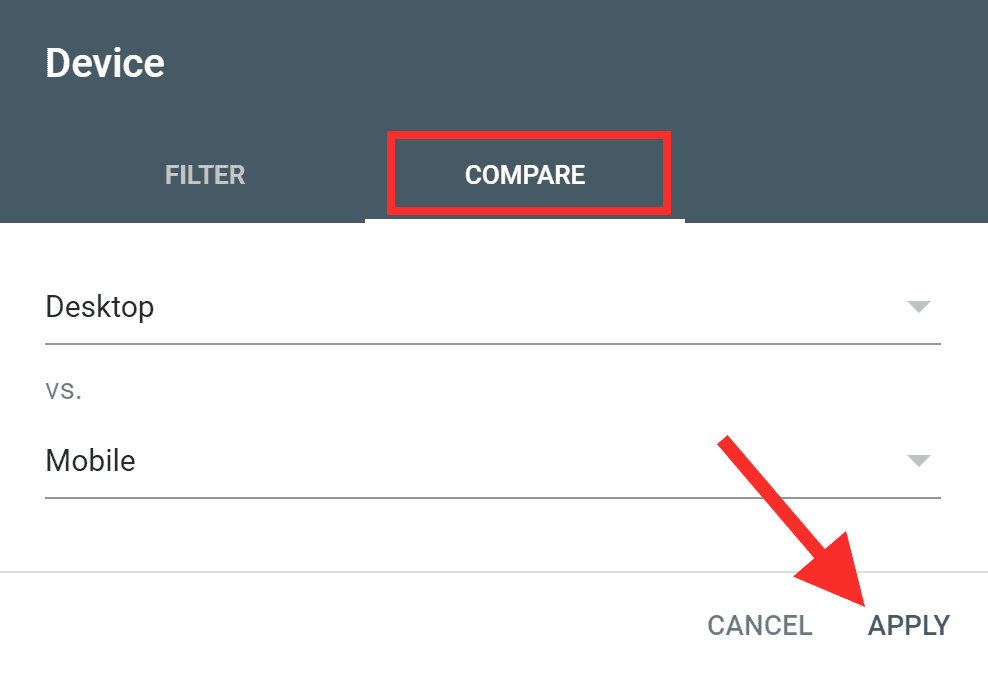

Click the compare tab on the popup, select “Desktop vs Mobile”, and hit apply…

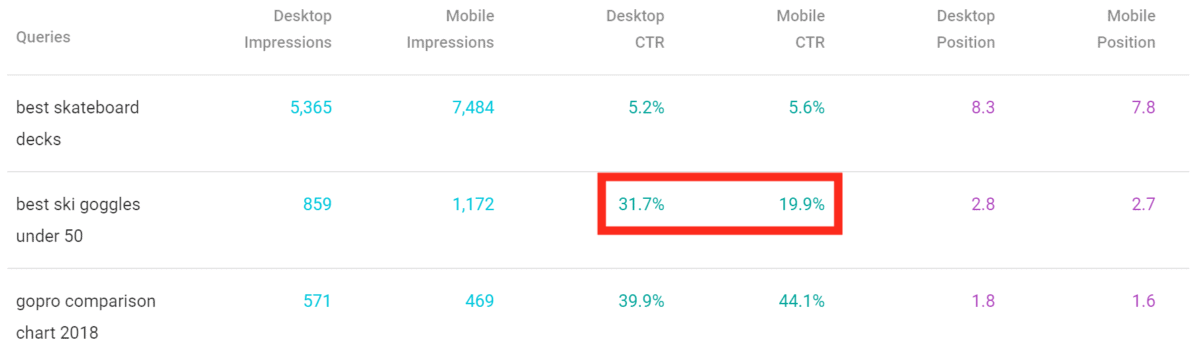

You’ll get a list of queries, with separate stats for mobile and desktop.

Of course, it’s normal for mobile and desktop CTRs to be a little bit different.

But if you see a BIG difference, it could be that your title and description tags don’t appeal to mobile searchers.

And that’s something you’d want to fix.